- Millions of pieces of extremist propaganda are released on the internet every year.

- Sorting the radical from non-radical is a major challenge, even for AI that has been trained for years.

- A new supervised adaptive learning algorithm shows promise for stemming the flow of hate.

Extremist groups like ISIS have used the internet to propagate their ideologies and recruit individuals for more than a decade. Social media played an important role in the rise of ISIS [1], and increased terrorist activity online is described by the United Nations Office of Counter Terrorism as practically synonymous with modern terrorism [2]. The amount of ISIS-related content is staggering; hundreds of millions of pieces of extremist information are posted on the internet every year.

Stopping the proliferation of extremist ideologies isn’t easy. A major problem is identifying ISIS propaganda in the first place; the group evades detection in numerous ways, including mixing their material with content from legitimate news outlets, blurring ISIS branding, and hijacking Facebook accounts [3]. When you combine the evasive tactics with highly heterogeneous and dynamic online environments, traditional content analysis fails to properly characterize which online material is extremist in origin and which is not.

Since 2017, AI algorithms have been trained to detect extremist content. AI now automatically removes more than 98% of propaganda on Facebook; on YouTube over 20 million videos were removed from the platform in 2019 before being published [4]. Although these figures are impressive, they aren’t good enough. If just a few videos slip through the net, the results can be disastrous. For example, Omar “super jihadist” Omsen, released just a handful of videos on his “19HH” website, named after the 19 September 11 attackers [5]. His propaganda, viewed tens of thousands of times, was responsible for the recruitment of at least 55 residents of Nice, France who went to join ISIS in Syria and Iraq [6].

Sociology, Meet AI

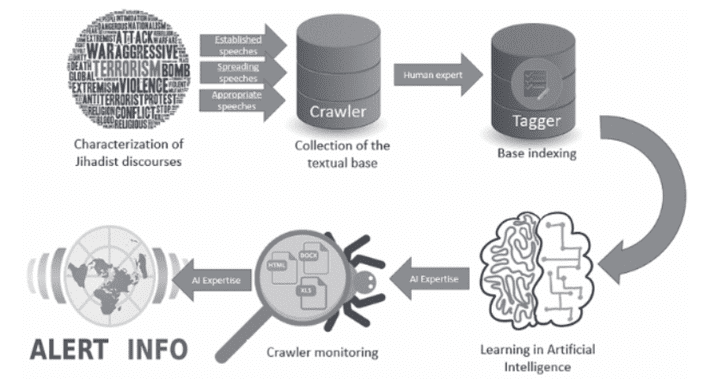

A new study, Effective Fight Against Extremist Discourse Online [1] suggests a new way to tackle the problem of online extremism: combine the expertise of sociologists, Isalmologoists and other experts with an AI algorithm that can recognize and follow the evolution of ISIS material. Where this research is unique is that, if successful, it has the potential to detect any threat in any language, wherever it lurks—adapting as terrorists modify their efforts to evade authorities. In other words, AI has the potential to always be one step ahead of the terrorists, instead of the other way around. The project uses supervised adaptive learning, where the learning algorithm adapts to acquire new descriptors so that it can recognize and follow the concepts as they evolve. The basic steps are shown in the study’s image below:  To establish baselines for AI learning, the study authors characterized three different sources of available ISIS propaganda, assembled with the help of sociologists familiar with the extremist groups:

To establish baselines for AI learning, the study authors characterized three different sources of available ISIS propaganda, assembled with the help of sociologists familiar with the extremist groups:

- Established speeches: this source contained speeches from ISIS leader Abou Bakr al-Baghdadi, who rose to prominence after he announced the creation of a “caliphate” in parts of Iraq and Syria [7]. Speeches and written references from ISIS’s online journals were also characterized.

- Spreading speeches: contained all the text from seven prominent recruitment videos from the defunct 19HH website.

- Appropriated discourses: statements made by Internet users who read or viewed the propaganda.

A network monitoring client captured real-time data from explicitly monitored locations and sent it to a crawler for further analysis. The crawler retrieved a set of sample words, profiles, and hashtags. These were forwarded to human experts, sociologists, and Islamologists, who characterized the data as “radical”, “non-radical”, or “out-of-context.” Next, a database tagger facilitated adding tags to the dataset. After original posts were split in sentences, they were clustered by similarity. The biggest clusters (i.e. the most frequent sentences) were selected and used to populate the knowledge database. The final monitoring was comprised of four main stages.

- Data obtained from crawler.

- Data received by a “manager”, processed, and sent for analysis.

- Input data analyzed; results sent back to manager to update stored data.

- Results are presented to the GUI.

The development of the tool is an ongoing project, with the ultimate hope that AI will be able to analyze extremist content, messages, and conversations—adapting as terrorist organizations find new channels of communication. “The scope of the project is large,” state the study authors, “but the stakes are high. The Internet war has started, and terrorist groups are ahead of us.”

References

[1] EFFECTIVE FIGHT AGAINST EXTREMIST DISCOURSE ONLINE

[2] COUNTERING TERRORISM ONLINE WITH ARTIFICIAL INTELLIGENCE

[3] ISIS ‘still evading detection on Facebook’, report says

[4] These Tech Companies Managed to Eradicate ISIS Content. But They’re…

[5] France’s super jihadi and the teenage girl trapped in Syria

[6] Meet the Man from Nice Who Rose to Become a Major Jihadist Recruiter

[7] How IS leader Abu Bakr al-Baghdadi was cornered by the US