One of the most impressive generative AI applications I have seen is viperGPT.

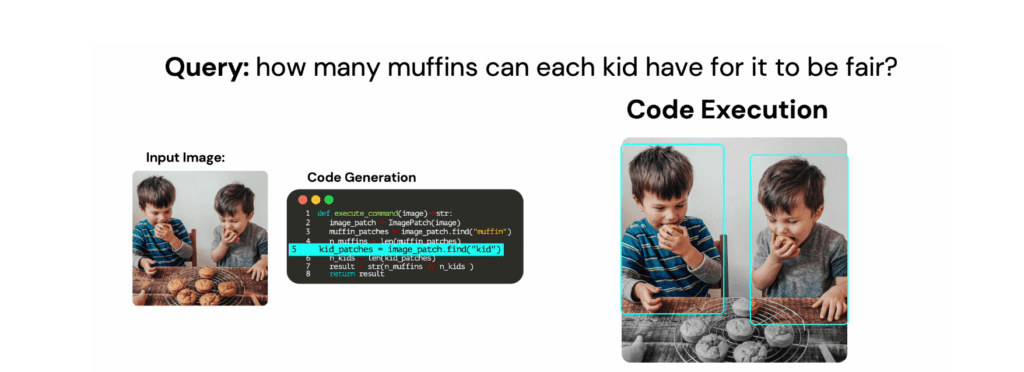

The image / site explains it best. The steps are:

- You start with an image and a prompt. Ex how would you divide the muffins between two boys

- No other information is provided

- Using computer vision, the LLM detects that there are two boys and 8 muffins in the image

- Then the LLM generates code to divide these muffins between the two boys – coming up with the answer of 4

This example, earlier this year, showed the potential of multimodal LLMs

And as of last week, that future is upon us

ChatGPT can now see, hear & speak.

What are the implications of it (as per the open AI announcements)

- You can speak with ChatGPT and have it talk back

- You can provide Image and voice input and get voice output

- ChatGPT can understand and generate text in various languages, styles, and tones

With multimodal ability, you can also work on higher level skills which involve engaging with chatGPT through multiple modalities

This includes

- Rehearsals – drama rehearsals

- Soft skills – preparing for teaching

- Scenario modelling –

- Completing artwork – ex take a picture of a painting and suggesting a story from it

- Suggesting content from images – ex show the London underground map and ask for verbal directions

But we could go higher levels of abstraction for creation

- Create an app from a sketch

- Design a game from a diagram

But what happens when the code generation ability takes on its full impact? In it;s ultimate incarnation, that implies an ability to reason. The real value is in the ability to create better code which ties the other modalities together – much as we see in ViperGPT

Generative AI Megatrends: ChatGPT can see, hear and speak – but what does it mean when chatgPT can think?

One of the most impressive generative AI applications I have seen is viperGPT.

The viperGPT image / site explains it best. The steps are:

- You start with an image and a prompt. Ex how would you divide the muffins between two boys

- No other information is provided

- Using computer vision, the LLM detects that there are two boys and 8 muffins in the image

- Then the LLM generates code to divide these muffins between the two boys – coming up with the answer of 4

This example, earlier this year, showed the potential of multimodal LLMs

And as of last week, that future is upon us

ChatGPT can now see, hear & speak.

What are the implications of it (as per the open AI announcements)

- You can speak with ChatGPT and have it talk back

- You can provide Image and voice input and get voice output

- ChatGPT can understand and generate text in various languages, styles, and tones

With multimodal ability, you can also work on higher level skills which involve engaging with chatGPT through multiple modalities

This includes

- Rehearsals – drama rehearsals

- Soft skills – preparing for teaching

- Scenario modelling –

- Completing artwork – ex take a picture of a painting and suggesting a story from it

- Suggesting content from images – ex show the London underground map and ask for verbal directions

But we could go higher levels of abstraction for creation

- Create an app from a sketch

- Design a game from a diagram

But what happens when the code generation ability takes on its full impact?

In it’s ultimate incarnation, that implies an ability to reason.

Thus, the real value is in the ability to create better code which ties the other modalities together – much as we see in ViperGPT

Image source: viperGPT