A little less than a year ago, I posted a blog on generating multivariate frequencies with the Python Pandas data management library, at the same time showcasing Python/R graphics interoperability. For my data science work, the ability to produce multidimensional frequency counts quickly is sine qua non for subsequent data analysis.

Pandas provides a method, value_counts(), for computing frequencies of a single dataframe attribute. By default, value_counts excludes missing (NaN) values, though they’re included with the dropna=False option. As noted in that blog, however, the multivariate case is more problematic. “Alas, value_counts() works on single attributes only, so to handle the multi-variable case, the programmer must dig into Pandas’s powerful split-apply-combine groupby functions. There is a problem with this though: by default, these groupby functions automatically delete NA’s from consideration, even as it’s generally the case with frequencies that NA counts are desirable. What’s the Pandas developer to do?

There are several work-arounds that can be deployed. The first is to convert all groupby “dimension” vars to string, in so doing preserving NA’s. That’s a pretty ugly and inefficient band-aid, however. The second is to use the fillna() function to replace NA’s with a designated “missing” value such as 999.999, and then to replace the 999.999 later in the chain with NA after the computations are completed. I’d gone with the string conversion option when first I considered frequencies in Pandas. This time, though, I looked harder at the fillna-replace option, generally finding it the lesser of two evils.”

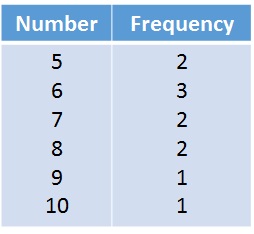

Subsequent to that posting, I detailed a freqs1 function for single attribute dataframe frequencies and freqsdf for the multivariate case. The functions have worked pretty well for me on Pandas numeric, string, and data datatypes. Simple versions of these functions are included below.

Last summer I started experimenting with the Pandas categorical datatype. Much like factors in R, “A categorical variable takes on a limited, and usually fixed, number of possible values (categories; levels in R). Examples are gender, social class, blood type, country affiliation, observation time or rating via Likert scales.” The categorical datatype can be quite useful in many instances, both in signaling to analytic functions a specific role for the attribute, and also for saving memory by storing integers instead of more consuming representations such as string.

Unfortunately, as I started to incorporate categorical attributes in my work, I found that my trusty freqsdf no longer worked, tripped up by the internal representation of the new datatype. So it was back to the drawing board to expand freqsdf functionality to handle categorical data. In the remainder of this blog, I present proof of concepts for two competing functions, freqscat and freqsC, that purport to satisfy all datatypes of freqsdf plus categorical attributes. Hopefully useful extensions, these functions should be seen as POC only.

The cells that follow exercise Pandas frequencies options for historical Chicago crime data with over 6.8M records. I first load feather files produced from prior data munging into Pandas dataframes, then build freqscat and freqsC functions from freqs1 and freqsdf foundations.

The technology used is JupyterLab 0.32.1, Anaconda Python 3.6.5, NumPy 1.14.3, and Pandas 0.23.0.

Read the entire post here.