Software is always not only a set of instructions but also a context that manages, interacts and executes these instructions. At the start of developing, the engineer configures a dev environment. He can continue to change it in all stages of development. The problem appears when we need to share our software with testers or deploy it to production. Up-to-date development technique known as CI/CD is to test and possibly to deploy code with all changes made by the developer. But, how can we share our code if it doesn’t work without context or works unreliably in different contexts? And what should we do if we want to set-up two or more instances of our service to increase productivity or reliability?

What is Docker

Docker is an open platform for developing, delivering and operating applications. It was designed to make apps faster. With Docker, you can separate application from infrastructure and treat the infrastructure as a managed application. It helps you to test code faster and reduce the time between writing code and running code. Docker does this with a lightweight container virtualization platform, using processes and utilities that help manage and layout applications.

Docker allows you to safely run almost any application in an isolated container. The isolation allows you to run many containers on the same host at the same time because components will not interact with each other in any unwanted way. The lightweight nature of the container, which runs without the extra load of a hypervisor, allows you to get more out of hardware.

Docker allows you to deploy code everywhere from local machine or data center to cloud infrastructure.

Docker architecture

Docker is a client-server app. Docker daemon serves your app (create, deploy, shut down, etc..) and docker client interacts with it to manage its activity. Client and server can exist in one system and also docker client can connect to the remote daemon.

You need to know 3 main terms:

- Image

- Registry

- Container

Image is a read-only pattern that is used to build a container. The Image can contain an Apache Nginx or Kafka with Ubuntu, etc.. You can add, update and share your images. The Image is a construction component.

Each image consists of layers. Docker uses UnoinFS to combine these layers into one image. UnionFS implements a for other file systems. It allows files and directories of separate file systems, known as branches, to be transparently overlaid, forming a single coherent file system. Contents of directories having the same path within the merged branches will be seen together in a single merged directory within the new, virtual filesystem.

That makes docker lightweight. Anytime, you make any changes, a new layer will be created without replacing the entire image or rebuilding it, as you probably will do with a virtual machine, only the level is added or updated. And you do not need to distribute the whole new image. The only update is distributed, and this makes distributing images easier and faster.

Also, this allows you to reduce the space required to your app, because of using the same levels for several container instances. That is because all layers are read-only, So we can use these layers for multiple instances but create a new one read-write layer for each container where it can make any changes isolated from others.

At the heart of each image is a basic image. For example, ubuntu, the base image of Ubuntu, or Debian, the base image of the Debian distribution. You can also use images as a basis for creating new images.

Registries are storage for images. It allows you to save own or use any public ones. There are public and private registries. The official Docker registry is called Docker Hub. Registries are a distribution component.

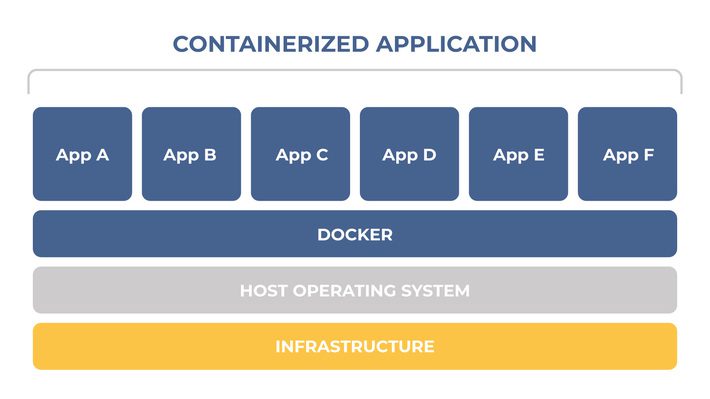

Containers are an abstraction at the app layer that packages user files and dependencies together. They can be created, started, stopped, moved, or deleted. Multiple containers can run on the same machine and share the OS kernel with other containers, each running as isolated processes in userspace. Containers take up less space than VMs (container images are typically tens of MBs in size). They can handle more applications and require fewer VMs and Operating systems. It’s a component of execution.

Structure of containerized applications with Docker

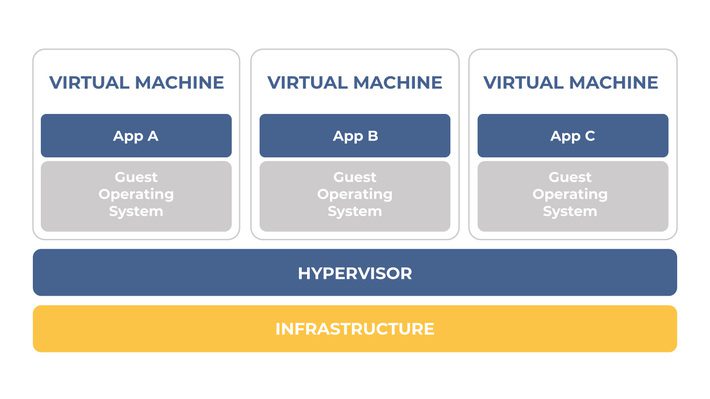

The main alternative is to use Virtual machines (VMs) that are an abstraction of physical hardware turning one server into many servers. The hypervisor allows multiple VMs to run on a single machine. Each VM includes a full copy of an operating system, the application, necessary binaries and libraries – taking up tens of GBs. VMs can also be slow to boot.

Structure of containerized applications with Virtual Machine

How does it work?

You have a simple REST service written on node.js platform and you want to deploy it. First of all, you need to create an image. You should create a Dockerfile where you will specify a set of commands on how to build a new container. You can choose a base image as node or node-alpine (that is smaller). Then you will describe a bunch of commands that will copy and paste the code in the right directory, install all dependencies and run a server. At the host machine, Docker will check if it has a node image and download it if it’s necessary.

When docker daemon starts the container, it creates a read/write level on top of the image (using the union file system, as mentioned earlier), in which the application can be launched.

Docker uses several namespaces to isolate containers such as PID, NET and UTC namespaces. Some of all listed before features uses a Linux kernel, So you need a special virtual machine if you use Windows.

Some of the common use-cases

Simplifying Configuration

Docker provides the ability to run any platform with its own config on top of any infrastructure without the overhead of a virtual machine. Using Dockerfile you can put the configuration in the code as well as pass via env variables for different environments and deploy it. So, the same docker image can be used in different environments.

Developer Productivity

With Docker we are sure that the dev environment will be as close to production as possible. That will reduce a number of bugs. Also, once written Dockerfile can be used a lot of times to build and deploy an app. That will reduce the time for environment preparation.

App Isolation

There are cases when you need to have isolation among applications in the same server to decrease cost or you need a plan to separate a monolithic application into decoupled pieces. For example, you might need to run two REST API servers, both of which require node.js. But, each of them uses a slightly different version of node and has other similar dependencies. You can run these servers in several containers to solve this issue.

Server Consolidation

The application isolation abilities of Docker allows consolidating multiple servers to save on cost without the memory footprint of multiple OSes. It is also able to share unused memory across the instances. Docker provides far denser server consolidation as compared to VMs.

Rapid Deployment

Docker containers can be created and boot up in milliseconds. This happens because containers do not boot up an OS and just running the application process. Moreover, the immutable nature of Docker images gives you the peace of mind that things will work exactly the way they have been working and are supposed to work.

Load balancing

When we have a high load app than we need to share a server load to several instances of the same service. That is a commonly used method in a microservice architecture. Of course, we need some service that will orchestrate our Docker containers. But just because of Docker we have the ability to quickly create a new instance of our service.

Who uses Docker?

PayPal

PayPal migrated 700+ applications to Docker Enterprise, running over 200,000 containers. This company also achieved a 50% productivity increase in building, testing and deploying applications.

Visa

After just six months in production, Visa achieved a 10x increase in scalability for two customer-facing payment processing applications. Visa processes $5.8 trillion in transactions, while maintaining the company’s robust availability and security capabilities.

Cornell University

Cornell University has achieved 13x faster application deployment by leveraging reusable architecture patterns and simplified build and deployment processes with Docker Enterprise.

Other

BCG Gamma, Desigual, Jabil, Citizen Bank, GE Appliances, BBC News, Lyft, Spotify, Yelp, ADP, eBay, Expedia, Groupon, ING, New Relic, The New York Times, Oxford University Press.