Decision Trees, Random Forests and Boosting are among the top 16 data science and machine learning tools used by data scientists. The three methods are similar, with a significant amount of overlap. In a nutshell:

- A decision tree is a simple, decision making-diagram.

- Random forests are a large number of trees, combined (using averages or “majority rules”) at the end of the process.

- Gradient boosting machines also combine decision trees, but start the combining process at the beginning, instead of at the end.

Decision Trees and Their Problems

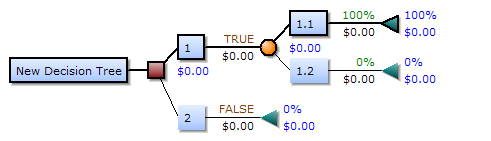

Decision trees are a series of sequential steps designed to answer a question and provide probabilities, costs, or other consequence of making a particular decision.

They are simple to understand, providing a clear visual to guide the decision making progress. However, this simplicity comes with a few serious disadvantages, including overfitting, error due to bias and error due to variance.

- Overfitting happens for many reasons, including presence of noise and lack of representative instances. It’s possible for overfitting with one large (deep) tree.

- Bias error happens when you place too many restrictions on target functions. For example, restricting your result with a restricting function (e.g. a linear equation) or by a simple binary algorithm (like the true/false choices in the above tree) will often result in bias.

- Variance error refers to how much a result will change based on changes to the training set. Decision trees have high variance, which means that tiny changes in the training data have the potential to cause large changes in the final result.

Random Forest vs Decision Trees

As noted above, decision trees are fraught with problems. A tree generated from 99 data points might differ significantly from a tree generated with just one different data point. If there was a way to generate a very large number of trees, averaging out their solutions, then you’ll likely get an answer that is going to be very close to the true answer. Enter the random forest—a collection of decision trees with a single, aggregated result. Random forests are commonly reported as the most accurate learning algorithm.

Random forests reduce the variance seen in decision trees by:

- Using different samples for training,

- Specifying random feature subsets,

- Building and combining small (shallow) trees.

A single decision tree is a weak predictor, but is relatively fast to build. More trees give you a more robust model and prevent overfitting. However, the more trees you have, the slower the process. Each tree in the forest has to be generated, processed, and analyzed. In addition, the more features you have, the slower the process (which can sometimes take hours or even days); Reducing the set of features can dramatically speed up the process.

Another distinct difference between a decision tree and random forest is that while a decision tree is easy to read—you just follow the path and find a result—a random forest is a tad more complicated to interpret. There are a slew of articles out there designed to help you read the results from random forests (like this one), but in comparison to decision trees, the learning curve is steep.

Random Forest vs Gradient Boosting

Like random forests, gradient boosting is a set of decision trees. The two main differences are:

- How trees are built: random forests builds each tree independently while gradient boosting builds one tree at a time. This additive model (ensemble) works in a forward stage-wise manner, introducing a weak learner to improve the shortcomings of existing weak learners.

- Combining results: random forests combine results at the end of the process (by averaging or “majority rules”) while gradient boosting combines results along the way.

If you carefully tune parameters, gradient boosting can result in better performance than random forests. However, gradient boosting may not be a good choice if you have a lot of noise, as it can result in overfitting. They also tend to be harder to tune than random forests.

Random forests and gradient boosting each excel in different areas. Random forests perform well for multi-class object detection and bioinformatics, which tends to have a lot of statistical noise. Gradient Boosting performs well when you have unbalanced data such as in real time risk assessment.

References

Top Data Science and Machine Learning Methods Used in 2017

Random Forests explained intuitively

Boosting Algorithms for Better Predictions

Gentle Introduction to the Bias-Variance Trade-Off in Machine Learning

How to improve random Forest performance?

Training a Random Forest with a big dataset seems very slow #257

Gradient Boosting vs Random Forests

Using random forest for reliable classification and cost-sensitive …

Applications of Gradient Boosting Machines

A Gentle Introduction to Gradient Boosting

Application of Stochastic Gradient Boosting (SGB) Technique to Enha…