Photo by Clayton Robbins on Unsplash

AI development today rests on the shoulders of Machine Learning algorithms that require huge amounts of data to be fed into training models. This data needs to be of consistently high quality to correctly represent the real world, and to achieve that, the data needs to be labeled accurately throughout. A number of data labeling methods exist today, from in-house to synthetic labeling. Crowdsourcing finds itself among the most cost- and time-effective of the labeling approaches (Wang and Zhou, 2016).

Crowdsourcing is human-handled, manual data labeling that uses the principle of aggregation to complete assignments. In this scenario, a large number of performers complete various tasksfrom transcribing audio files and classifying images to visiting on-site locations and measuring walking distanceand their best efforts are subsequently combined to achieve the desired outcome.

Research shows that crowdsourcing has become one of the most sought-after data labeling approaches to date with companies like MTurk, Hive, Clickworker, and Toloka attracting millions of performers the world over (Guittard et al., 2015). In some cases, such as with Toloka App Services, the process has been refined to become almost automatic, requiring only clear guidelines and examples from requesters to receive the labeled data shortly after.

Importance of instructions

This brings us to the main point instructions. As our lives are becoming more AI-dependent, the evolution of AI is in turn becoming increasingly more reliant on ML algorithms for training. These algorithms cannot survive without accurate data labeling. And, therefore, instructions on how to label data correctly are the gateway to success in both crowdsourcing and AI development. Ultimately, poor instructions lead to a poor AI product, nevermind the other factors.

Whereas many crowdsourcing platforms work to hone their delivery pipelines, simplifying the procedure as much as possible, instructions often remain a sore point. No matter how well-oiled the whole data-labeling mechanism is, theres no way around having clear instructions for crowd workers that can be easily understood and followed accordingly.

Since 95% of all ML labels are supervised, i.e. done by hand (Fredriksson et al., 2020), the instructions aspect of crowdsourcing should never be overlooked or underplayed. However, research also indicates that when it comes to having a systematic approach to labeling data and prepping crowd workers, most requesters often dont know what to do beyond general notions (Fredriksson et al., 2020).

Disagreements between requesters, as well as expert annotators, and crowd workers continue to pop up and can only be resolved by refining instructions (Chang et al, 2017). For instance, Konstantin Kashkarov, a crowd worker with Toloka, admits that he has disagreed with the instructions from various requesters more than a few times in his career as a labeler (VLDB Discussion, 2021) thataccording to himcontained errors and inconsistencies. Kairam and Heer (2016) stipulate that these inconsistencies indeed translate into labeling troubles unless theyre swiftly addressed by the majority voting of crowd workers.

However, how to do this efficiently still remains an open question: practice shows that greater numbers (as opposed to fewer experts) are not necessarily reflective of facticity, especially in narrow, domain-specific tasks and the narrower, the more so. In other words, just because there are many crowd performers involved in a given project doesnt mean these performers wont make labeling mistakes; in fact, most of them might make the same or similar mistakes if instructions dont resolve ambiguity. And since instructions are the stepping stone to the whole ML ecosystem, even the tiniest misinterpretation and subsequent labeling irregularity can lead to noisy data sets and imprecise AI. In some cases, this can even potentially endanger our lives, such as when AI is used to help diagnose illnesses or administer drugs.

So, how do we make instructions accurate and make sure data labeling is error-free? To answer this question, we must first look at the types of problems many labelers face.

Frequent issues and grey areas

When it comes to crowdsourcing instructions, research indicates that things are not at all cut and dried. On the one hand, its been shown that crowd performers will only go as far as they have to, and hence all responsibility to do with the comprehension of tasks ultimately falls on the requester. Both confusing interfaces and unclear instructions tend to result in improperly completed assignments. Any ambiguity is bound to lead to labeling mistakes, while the crowd workers cannot be expected to ask clarifying questions, and as a general rule, they wont (Rao and Michel, 2016).

At the same time, crowdsourcing practitioners, among them Toloka, experimented with this notion, and it turned out that crowd workers were savvier than previously expected. Ivan Stelmakh, a PhD student at Carnegie Mellon University and a panelist at the recent VLDB conference, explains that his team tried giving confusing instructions to performers on Toloka on purpose expecting a poor performance. They were surprised to discover that the results were still very robust, which implies that somehow the performers were able to understandwhether instinctively or, more likely, through experiencewhat to do and how. This implies that (a) its not just up to the instructions but those who read those instructions, and (b) the more experienced the readers are, the higher the probability of satisfactory results.

Another conclusion that follows has to do with how simple or complex the task in question is. According to Zack Lipton, who conducts ML research at Carnegie Mellon, the outcome very much depends on whether it is a standard or non-standard task. A simple task with confusing instructions can be completed by experienced performers without major problems. This isnt the case with unusual or rare tasks: even experienced crowd workers may struggle to come up with acceptable answers if the instructions arent clear, because they have no domain-specific experience to fall back on.

Importantly, Liptons experiments demonstrated that with such tasks different versions of instructions play a direct role in the ultimate outcome. Therefore, it appears that Rao and Michels argument about the role of initial guidelines tends to outweigh Ivan Stelmakhs observation of the performers self-guiding ability as the tasks difficulty rises.

Furthermore, according to Mohamed Amgad, a Fellow at Northwestern University who also made an appearance at VLDB, this rule applies to unusual tasks even when the instructions are completely clear. In other words, theres something inherent about erring during manual task completion, and this problem becomes more pronounced as the tasks become less common and more advanced. In the end, it comes down to variability that can only be eliminated with experience (not just clear instructions), so the underlying issueaccording to himis sometimes embedded in the task itself, not its explanation. To put it bluntly, even if there are very clear instructions on how to build a rocket, most of us will probably struggle with this task unless we have some background in engineering and physics.

Confusion and the bias problem

As weve seen, ambiguity in instructions seems to become more of a worry as the task becomes more sophisticated, finally reaching a stage when even clear instructions may lead to substandard results. And sometimes, it turns out, this prevailing inherence goes beyond the crowd workers experience right into the realm of personal interpretation. According to Olga Megorskaya, CEO of Toloka, imminent biases exist in datasets that are related to the actual data, guidelines, and also personality and background of the labelers.

This is known as subjective data and biased labeling in the scientific community, a ubiquitous problem thats qualitatively different from individual errors, because it reflects a common, sometimes hidden group tendency (Zhang and Sheng, 2016 via Wauthier & Jordan 2011 and Faltings et al. 2014). In the best case scenario, this tendency can reflect a particular view that another group might not share, making the labeling results only partially accurate. In the worst case scenario, the outcome can be prejudicial and offensive, such as when in a widely publicized Google case the dark-skinned individuals were mislabeled to be holding a gun, while the light-skinned individuals with the very same device were judged to be holding a harmless thermometer.

Importantly, biased labeling arises not merely from expert vs. non-expert differences or individual preferences, but rather from varying metrics and scales used in decision-making. Often this is the result of ones socio-cultural background and points of reference. Whats more, this phenomenon may not be apparent to requesters, and so the detection of these biases and their modeling can be very challenging, resulting in a negative effect on inference algorithms and training (Zhang and Sheng, 2016).

From the standpoint of statistics, such biases are essentially systematic errors that can be potentially overcome by enlarging sample sizes or collecting different datasets. This means that whats often more important is not clear instructions, but clear examples and enough of them for the crowd workers to see a particular pattern in order to steer clear of erring. At the same time, these biases can be so pronounced that theyre often entirely culture-based. A question like who has the prettiest face in this picture? or identify the most dangerous animal can result in different answers from different individuals within different socio-cultural groups where standards of beauty and local fauna can vary significantly. Often, these differences come down to geography.

A question like identify a blue object in this image can, too, yield very different results from individuals from Russia vs. Japan vs. India, where the colors green, blue, and yellow are not classified in the same way.

ANTTI T. NISSINEN, FLICKR // CC BY 2.0

Yet another example comes from a recent survey that was meant to detect hateful speech and abusive language. It turns out that most English speakers may not have the same standards and acceptance levels compared to those of other linguistic backgrounds; for this reason, to get at the bigger picture, members from other, smaller groups should be consulted.

According to Jie Yang, Assistant Professor at Delft University in the Netherlands, the bias problem should be subject to the top-down approach to labeling. This means that all potential biases have to be considered in advance, i.e. when deciding on the kinds of results that are required and thus who exactly should be completing the tasks to obtain these results.

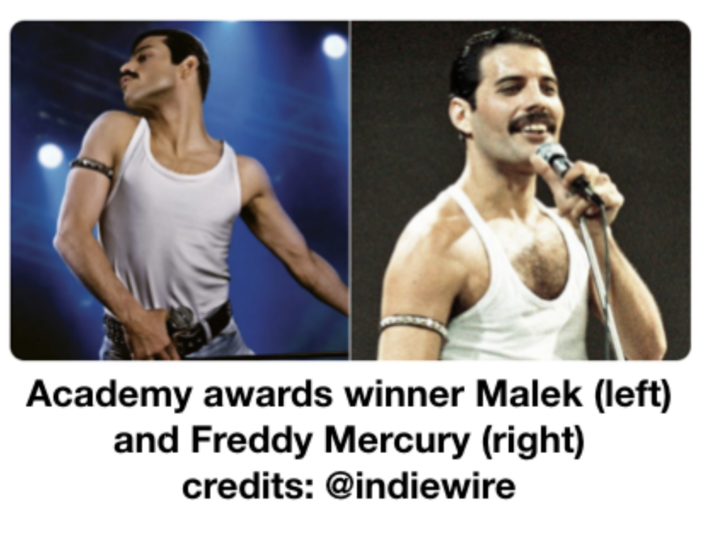

Moreover, according to Krivosheev et al (2020), this overlaps with the issue of confusion of observations, when crowd workersincluding those who try their best to do everything by the bookconfuse items of similar classes. This happens because the items interpretability is embedded within the task, but the description fails to provide enough examples and explanations to point to the desired interpretation. An instance of this phenomenon would be having to identify Freddie Mercury in an image but does actor Rami Malek playing Freddie count or not?

If this confusion issue is present, then the effect observed by Rao and Michel and corroborated by Liptons experiments can be multiplied manifold.

Suggested solutions

Despite some inherent issues related to the type of tasks and performers involved, instructions still remain a pivotal factor in the success of data-labeling projects. According to Megorskaya, even one small change in the guidelines can affect the whole data set; ergo, the question that needs to be addressed is to what extent exactly do changes in instructions have a say in the AI end-product and how to minimize any negative effect?

Jie Yang stipulates that while bias poses a serious obstacle to accurate labeling, crucially, theres no panacea available: any attempt to resolve bias would be entirely domain-dependent. At the same time, as weve seen, this is a multi-faceted problem accurate results rely on clear instructions and, beyond that, on the tasks themselves (how rare/difficult) and also performers (their experience and socio-cultural background). Consequently, and somewhat expectedly, no universal solution encompassing all of these aspects currently exists.

Nonetheless, Zhang et al (2013) proposes a strategy that attempts to control quality by having periodical checkpoints meant to discard both low quality labels and labelers amid completion. Vaughan (2018) further suggests that before proceeding with any task, projects should be piloted by creating micro pools and testing both UI and crowd workers. Its been shown that theres a negative correlation between confusion in instructions and labeling accuracy, as well as acceptance of tasks (Vaughan, 2018 via Jain et al., 2017). In other words, the more examples there are and the clearer the task, the more workers will be willing to participate and the better/quicker will be the result. Be that as it may, while this strategy can help resolve confusion, research indicates that these steps may still be insufficient in combating bias in subjective data.

Zhang and Sheng (2016) suggest a different track historical information on labelers should be evaluated to consider assigning different weight or impact factor to different workers. This weight should depend on their levels of domain expertise and other relevant socio-cultural, as well as educational background. To put it in simpler terms, for better or worse, not all crowd workers should always be treated equally in the context of their labeling output.

Among other suggestions that follow the same logic is Active Multi-label Crowd Consensus (AMCC) put forth by Tu et al. (2019). This model attempts to assess crowd workers to account for their commonality and differences and subsequently group them according to this rubric. Each group shares a particular trait in this scenario thats reflected in the labeling results that can be followed and dissected much more easily. This model is supposed to reduce the influence of any unreliable workers and those lacking the right background or expertise for successful task completion.

The bottom line

Clear instructions are instrumental in realizing data-labeling projects. Concurrently, other factors emerge to share responsibility as tasks become rarer and more challenging. At some point, problems can be expected to arise even when instructions are clear, because the crowd workers tackling the task have little experience to fall back onto.

Accordingly, inherent biases and, to a lesser extent, confusion of observations will persist, which stem not only from the clarity of instructions and examples, but also from choosing the right performers. In certain situations, the crowd workers socio-cultural background may play as much of a role as their domain expertise.

While some of these problematic factors can be addressed using widely accepted quality assurance tools, no universal solution exists apart from (a) selecting those workers that have the right experience and expertise, and (b) those who are able to address the tasks based on their specific background that has been judged to be pertinent to the assignment.

Since instructions remain at the epicenter of the accuracy problem all the same, it is recommended that the following points be considered when preparing instructions:

- Instructions must always be written, so that theyre easy to understand!

- Crowdsourcing platforms may help with preparing instructions, they can enforce these instructions, facilitate the labeling process, check for consistency, and verify results; however, it is the requester who ultimately needs to explain beyond any doubt what is being asked, and how exactly they want the data to be labeled.

- Plentiful and clear/unambiguous examples must always be supplied.

- You need to keep in mind that as a rule of thumb, theres a positive correlation between task difficulty and clarity of instructions: less clarity means less accuracy.

- Understanding instructions shouldnt require any extraordinary skills; if such skills are implicit, you need to acknowledge that only experienced workers will know what to do.

- If you have an uncommon task, instructions must be crystal clear and with contrasting examples (i.e. what is wrong) for even the most experienced workers to be able to follow.

- Confusion can be resolved with clear examples, but in some cases, even experienced crowd workers might provide noisy data sets if the bias problem is not addressed prior.

- The best countermeasure to bias is that the crowd workers ought to be selected not merely based on their expertise, but also their socio-cultural background that must always match the tasks demands.

- Managerial responsibility has to be maintained throughout the planning process: micro decisions will lead to macro results, with even the tiniest detail potentially having far-reaching implications.