I myself do epidemiologic research, which rarely calls for developing machine learning models. Instead, I spend my time developing logistic regression models that I have to be able to interpret for the broader scientific (and sometimes non-scientific) community. I have to be able to explain in some easy, risk-communication way not only the strength of association between the independent variable (e.g., making a habit of social distancing) and the dependent variable (e.g., catching COVID-19), but also, what it will do for you if you adopt the behavior. Take this headline as an example of great public health communication by some scientific researchers in Sweden:

“85% of cough droplets blocked by surgical mask, experiment shows.”

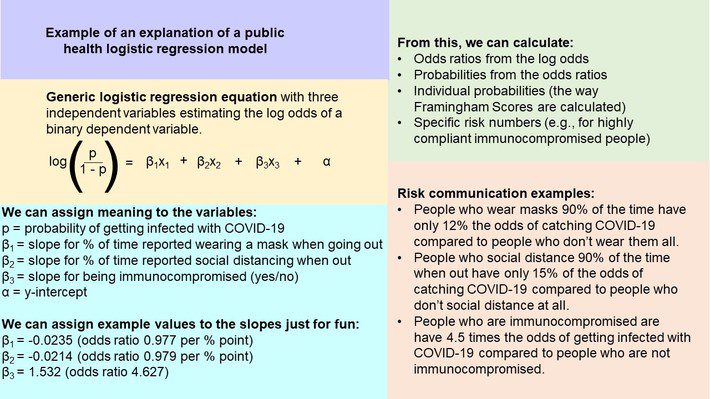

Now that is one explainable finding. Notice how – although I’m pretty sure there was at least one regression equation involved – we don’t hear anything about slopes, or variances, or adjustments. This is the way science has to be done if it is to be understood by a broad community of both scientists and non-scientists. I made this figure below as an example of how we take the results of these models and turn them into public health risk communication.

Public Health Models are Explainable, so Why Not AI?

So this is why I was particularly intrigued when I first heard about “explainable artificial intelligence”, or “explainable AI”, or – to be a little hot and racy – XAI. I heard about it on an industry panel discussion put on by SAS. The reason why I was intrigued by this is that the panelists basically established the consensus that once AI models are developed, most of the time, their developers cannot really explain why a particular output happened.

I have no children in my life right now, and no one I know is pregnant. But when one of my customers asked me during a meeting for some information about a medication for her baby, and we Googled the information and read it together, after that, I started getting ads all over my browser and my Gmail for baby products. Yet, when my other customer asked me earlier this year about hemorrhoid ointment which we investigated online together, I was not regaled with ads for posterior relief (thank goodness)!

So, the relevant question is – why? I can explain every independent variable in my logistic regression model and how it works in the model, so why can’t these modelers do the same for me? Obviously, the answer is that the model has become too complicated for them to understand in order to explain it. So it’s not explainable, right?

Our Lived Experience Suggests that Our AI is at Least Somewhat Explainable

Actually, let’s return to my anecdote. Searching for baby products produces baby ads, but a search for hemorrhoid ointment produces no ads obviously connected with hemorrhoids or related topics. Without realizing it, I performed a test on this AI algorithm that seems to be dominating my online life. This is one of the strategies used by XAI modelers, called LIME. I learned about LIME in an online course I took by instructor Aki Ohashi, who actually does machine learning work at the Palo Alto Research Center. In LIME, once the model has its inputs and outputs, you do post-hoc analysis to try to figure out why it did what it did. One of the things you do in this post-hoc analysis is perturb the inputs and see how that changes the outputs.

Looking for Explanations to AI Outputs can Troubleshoot the Algorithm by Uncovering Biases

While this seems like a desperate way to try to explain an already-deployed AI model, it also seems to me like an excellent way to test an AI model when it is under development. In fact, what would be perfect is to get a diverse crowd of people to come in and try to use that AI model and see what they get. Not only could this be a great way for modelers to take notes so they can come up with a way to explain the AI, it could be a robust way of troubleshooting bias in AI.

I can immediately think of an actionable example. Contrary to popular belief, we have long been using at least rudimentary AI to perpetuate bias. I remember seeing AI-bias-in-action when working for the Hennepin County Department of Corrections in Juvenile Probation, where, like many places, they used a “risk of recidivism score” when trying to decide who to let out of jail. The score was derived from a formula that included multiple inputs, including attributes associated with “prior offenses”. Because I was in Minneapolis, where the police are openly racist, I knew this was biasing the risk score to essentially keep Black people whose guilt was questionable in Minnesota jails. Luckily, one of these Black people had a son who is now the chief judge in Hennepin County who is actively trying to fix such injustice.

But you will see that the County Attorney at the time I was there, who is now US Senator Amy Klobuchar, who I admire greatly, did not call this out or see anything wrong with this system. It was obvious to me, because I myself am Brown and therefore was a police target, and I dealt directly with juvenile probationers and families who were Black and Brown and suffering under this unjust risk score and system. Maybe because Senator Klobuchar is White, she just didn’t see it, because it did not impact her the way it impacted me and our clients. Maybe if Black and Brown people had done a test-run of that risk score, we could have prevented the mess we have today (especially in Minnesota). We could have explained what was wrong with the AI before it was implemented.

Artificial Intelligence Should be “Explainable Enough”

So to answer my own question, we may never be able to totally explain the outputs of AI algorithms, but AI really should be “explainable enough”. Not only is that the ethical way, but as was mentioned in Ohashi’s course, AI is now being made the subject of regulation, in that there are emerging requirements for explanations, especially in healthcare. If scientists can find a way to put a simple number on the complex topic of why people in the community should wear masks to avoid transmitting COVID-19, we should be able to make our AI explainable enough for this exact same community.