Introduction

“The question is not whether intelligent machines can have any emotions, but whether machines can be intelligent without any emotions”.

Marvin Minsky

The ability of AI to recognise emotions is a fascinating subject and has wide-ranging applications across many fields of AI – from self-driving cars to healthcare.

This blog is based on a talk from Maria Pocovi – founder Emotion Research Labs at the Data Science for Internet of Things course at Oxford University – of which I am the Course Director. The course covers complex AI implementations – both on the Cloud and the Edge.

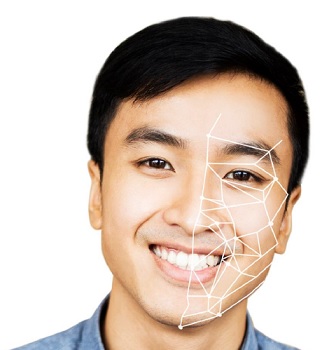

In this post, we explore recognition of emotion using facial emotion recognition. I will also expand how I am working with this technology on research in Artificial Empathy to understand how AI interacts with humans in longer / ongoing relationships (such as coaching). I am collaborating with Livia Ng from the UCL Artificial Intelligence Society on this.

If you have any thoughts/feedback or wish to participate in our research – please email me at ajit.jaokar at feynlabs.ai

Affective Computing

The topic is covered under Affective computing (sometimes called artificial emotional intelligence, or emotion AI)

Affective computing is the study and development of systems and devices that can recognize, interpret, process, and simulate human affects(emotions). It is an interdisciplinary field spanning computer science, psychology, and cognitive science. The more modern branch of computer science originated with Rosalind Picard’s 1995 paper on affective computing.

A motivation for the research is the ability to simulate empathy. The machine should interpret the emotional state of humans and adapt its behavior to them, giving an appropriate response to those emotions.

The difference between sentiment analysis and affective analysis is that the latter detects the different emotions instead of identifying only the polarity of the phrase.

(above adapted from wikipedia)

Artificial Intelligence and Facial Emotion recognition – some background

Facial emotion recognition an important topic in the field of computer vision and artificial intelligence. Facial expressions are one of the main Information channels for interpersonal Communication.

Verbal components only convey 1/3 of human communication – and hence nonverbal components such as facial emotions are important for recognition of emotion.

Facial emotion recognition is based on the fact that humans display subtle but noticeable changes in skin color, caused by blood flow within the face, in order to communicate how we’re feeling. Darwin was the first to suggest that facial expressions are universal and other studies have shown that the same applies to communication in primates.

Microexpressions – Is AI better at facial emotion recognition than humans?

Independent of AI, there has been work done in facial emotional recognition. Plutchik wheel of emotions illustrates the various relationships among the emotions. Ekman and Friesen pioneered the study of emotions and their relation to facial expressions.

Is AI better than humans at recognising facial emotion recognition?

AI’s ability to detect emotion from facial expressions better than humans lies in the understanding of microemotions.

Macroexpressions last between 0.5 to 4 seconds (and hence are easy to see). In contrast, Microexpressions last as little as 1/30 of a second. AI is better at detection microemotions than humans.

Haggard & Isaacs (1966) verified the existence of microexpressions while scanning films of psychotherapy sessions in slow motion.

Microexpressions occurred when individuals attempted to be deceitful about their emotional expressions.

Emotion Research Labs uses the concept of facial action coding system (FACS) – which is a system based on facial muscle changes and can characterize facial actions to express individual human emotions as defined by Basic emotions, Compound emotions, Secondary emotions (ecstasy, rejection, self-rejection) etc

A model for artificial empathy for long engagements between humans and AI

How do we apply this for AI?

One of the areas I am working on is – artificial empathy.

The concept of artificial empathy has been proposed by researchers like Asada and ohers.

Empathy is the capacity to understand or feel what another person is experiencing from within their frame of reference, that is, the capacity to place oneself in another’s position.

The three types of empathy – all need the sharing of experiences. Hence, if AI is to understand human empathy – AI needs people to share experiences to it. This is especially true in a longer relationship between humans and AI – such as coaching example.

The research I am working on is based on the idea of creating an “AI coach” and in the creating of a model for engagement between humans and AI in longer interactions. It is based on the propensity of humans to share experiences with the AI coach. Ultimately, artificial empathy could even be modelled as a reinforcement learni…

If you have any thoughts/feedback or wish to participate in our research –

please email me at ajit.jaokar at feynlabs.ai

Image source: Emotion research labs