Introduction

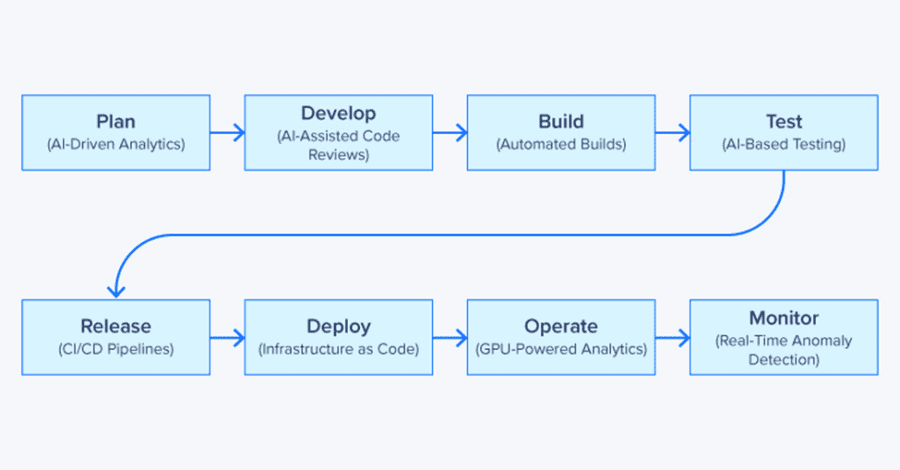

DevOps has long been the backbone of modern software development, enabling faster development cycles and greater operational efficiency. It has been further advanced by integrating Artificial Intelligence (AI), automation, and accelerated computing, reshaping how organizations approach their workflows.

We will cover the necessity of GPUs and HPC to stay competitive, how AI and automation in DevOps revolutionize the workflow, and the impact on various industries.

The role of GPUs and HPC in AI-enhanced DevOps

The transformative power of AI in reshaping business operations is no longer theoretical—it’s a reality driving modern enterprises. As AI models grow increasingly complex, they demand infrastructure robust enough to support seamless deployment within DevOps environments. This is where GPUs and high-performance computing (HPC) have emerged as indispensable tools for managing these challenges effectively.

Foundational large language models like GPT, Deepseek, Gemini, Claude, and Llama have become accessible through APIs or are open-sourced. Businesses and organizations can leverage these complex models without having to do the expensive training portion. For additional cost savings and flexibility, deploy an LLM locally and utilize a high-performance GPU solution to power a foundational model for running DevOps workflows.

Here is how GPUs are essential to evolving and accelerating your DevOps workflows:

- Training Large-Scale ML Models: Training machine learning models for predictive maintenance requires substantial computational power. By utilizing GPUs specifically designed for deep learning tasks, it significantly reduces training and retraining times to deploy faster than ever.

- Real-Time Anomaly Detection: Continuous monitoring systems leverage GPU capabilities to detect anomalies in real-time across various applications—from IT infrastructure monitoring to security threat detection. This proactive approach allows teams to address potential issues before they escalate into significant problems.

- Data-Intensive Tasks: Log aggregation and visualization generate vast amounts of data that need efficient processing. HPC infrastructure facilitates the rapid analysis of these datasets, using distributed computing techniques, while providing insights that drive informed decision-making.

By integrating GPUs and HPC into their workflows, organizations can handle the increasing complexity associated with AI models while improving efficiency across their development processes.

AI & ML in DevOps

Artificial Intelligence is revolutionizing DevOps by enabling predictive analytics, automating complex workflows, and enhancing system observability. AI-driven DevOps, often referred to as AIOps, leverages machine learning models to analyze vast amounts of operational data, identify patterns, and proactively optimize processes.

- AI-Driven CI/CD Pipelines: Traditional Continuous Integration/Continuous Delivery pipelines rely on predefined rules and scripts. AI enhances them by dynamically adjusting testing and deployment strategies based on real-time data. AI can leverage machine learning algorithms to predict failures, optimize build times, and recommend the best deployment configurations.

- Self-Healing Infrastructure: AI-powered monitoring tools detect anomalies in system performance and automatically apply corrective actions, reducing downtime and minimizing the need for manual intervention.

- AI-Augmented Monitoring & Observability: AI enables faster root cause analysis by correlating logs, metrics, and traces across distributed environments, making it easier to detect issues before they impact production.

Automation in DevOps

Automation is the backbone of modern DevOps, eliminating repetitive tasks and ensuring consistency across environments. The rise of AI-powered automation further enhances efficiency, allowing teams to focus on innovation rather than manual operations. By embedding AI and intelligent automation into DevOps, organizations can achieve higher efficiency, reduce operational complexity, and improve software reliability.

- Infrastructure as Code (IaC) Evolution: IaC automates the provisioning and configuration of infrastructure, but AI-powered IaC takes it a step further by dynamically adjusting infrastructure resources based on workload demands.

- AI-Powered Workflow Orchestration: Intelligent automation tools analyze past deployment patterns to optimize build, test, and deployment processes, reducing unnecessary steps and accelerating time to market.

- Policy-Driven Automation: Security and compliance are increasingly enforced through automation, ensuring that policies are applied consistently across development and production environments without manual oversight.

Computational efficiency with DevOps

As AI workloads demand greater computational power, optimizing infrastructure for performance and cost efficiency is critical. DevOps is shifting towards mindful resource utilization to save on cost and promote efficiency. By integrating computational efficiency strategies into DevOps, organizations can support AI-driven applications at scale while managing infrastructure costs effectively.

- Optimizing resource utilization: AI-driven resource management dynamically allocates compute power, ensuring workloads run efficiently while minimizing costs. Techniques like auto-scaling, workload scheduling, and energy-efficient computing are becoming standard.

- Serverless & edge computing trends: DevOps teams are increasingly adopting edge computing to offload processing from centralized data centers, reducing latency and improving efficiency for AI-driven applications.

- Hardware-aware DevOps: As GPUs become essential for AI workloads, DevOps teams must tailor pipelines and infrastructure to optimize their utilization, ensuring compatibility and peak performance.

Industry-specific applications of AI-driven DevOps

The integration of GPUs and HPC into DevOps practices has enabled significant advancements across industries.

Life science & healthcare

In healthcare, AI-driven DevOps accelerates predictive analytics and supports the deployment of AI models for patient care systems. By utilizing GPUs to process vast amounts of medical data quickly, such as electronic health records (EHRs), imaging data, and genomic information, healthcare providers can enhance diagnostic accuracy and improve patient outcomes through timely interventions.

- Medical Imaging & Diagnostics: AI models for medical image analysis require frequent updates. DevOps ensures seamless deployment of improved algorithms with minimal disruptions and automated processes.

- Drug Discovery & Molecular Dynamics: Large-scale computational workloads in biomolecular research can benefit from AI-optimized workload scheduling and cloud resource scaling. This includes molecular dynamics, AI protein folding, and cryo-EM.

- HIPAA-Compliant DevOps: Automated security enforcement ensures that handling of patient data aligns with regulatory requirements.

Finance & Fintech

The fintech sector benefits immensely from real-time fraud detection systems powered by GPU-optimized machine learning models that can alert for anomalies in account access and transactions. These systems analyze transaction patterns at incredible speeds, allowing financial institutions to identify fraudulent activities as they occur. By leveraging GPUs for parallel processing tasks, organizations can enhance their ability to detect anomalies in financial transactions while minimizing false positives.

- Real-time Fraud Detection: AI-driven DevOps pipelines help deploy and update fraud detection models faster by automating testing and model validation.

- AI-Powered Credit Scoring Models: DevOps streamlines the deployment and retraining of AI models that assess loan applications. Read about SnapFinance’s implementation utilizing Exxact hardware.

- CI/CD for Payment APIs: Ensures faster and more reliable deployment of payment integrations while maintaining security.

Engineering & MPD

The manufacturing product design and engineering sector utilizes DevOps to streamline workflows for simulation and prototyping. The process is both digital and physical, thus digital twin environments can also streamline and optimize facility operation.

- Continuous Synchronization with Real-World Data: Automate the integration of real-time sensor data into digital twin simulations for predicting equipment downtime and optimization.

- Automated Data Processing Pipelines: Use DevOps workflows to preprocess, clean, and analyze simulation data before engineers access it. Implement shared repositories where multiple engineers can work on simulation models in parallel.

- Smart Factory Automation: Continuous integration of AI-driven robotics and IoT devices improves production efficiency and quality control. Robotics within the factory will have its own DevOps workflows to work off of.

E-commerce & retail

Enhances retail and e-commerce operations by automating deployments, optimizing infrastructure, and improving customer experiences. It enables real-time inventory management, personalized recommendation engines, and AI-powered chatbots to operate seamlessly. Automated CI/CD pipelines ensure faster feature rollouts, while predictive analytics optimize demand forecasting and supply chain logistics.

- Personalized Recommendation Engines: AI-driven DevOps enables rapid updates to recommendation models, ensuring real-time personalization.

- Automated Inventory Management: Predictive analytics optimize supply chain logistics, reducing waste and improving demand forecasting.

- AI-Powered Chatbots & Customer Support: Automated deployment of NLP-driven support systems enhances customer experience.

Roadmap for adoption

In an evolving landscape increasingly dominated by automation and AI, organizations can position themselves to achieve long-term success. To navigate this transition successfully, consider the following roadmap:

- Infrastructure Assessments: Evaluate current infrastructure capabilities and identify gaps in readiness for future AI-driven enhancements.

- Investments in GPU/HPC: Dedicate resources to purchasing hardware like GPUs or HPC systems for advanced tasks. Consider on-premises hardware for scaling workloads, as cloud computing costs can ramp up quickly. Contact Exxact today for more information.

- Gradual Tool Integration: Implement AI tools one step at a time, giving teams sufficient time to adapt and ensuring smooth integration without disrupting current workflows.

- Continuous Learning Initiatives: Supports ongoing education and skill development in emerging AI methodologies and high-performance computing techniques.

- Monitoring Outcomes Regularly: Regularly measure the affect of new AI solutions using performance metrics. Ensure that the implementation aligns with organizational goals and adjust as needed.

There are some considerations for this AI revolutionary shift:

- The Human-Centric Shift in DevOps: As organizations integrate AI and automation into their DevOps practices, operational changes are inevitable. This shift demands a new mindset prioritizing collaboration between development and operations teams to harness the potential of AI.

- Navigating Changes: The integration of AI necessitates prioritizing team collaboration and communication. Organizations should encourage the company to embrace the changes AI can bring to innovation through experimentation. With pushback, seamless implementation is hindered.

- Upskilling for Advanced Tools: Organizations must invest in training programs that provide their workforce with the necessary skills to use advanced tools that depend on GPU-accelerated computing, like local LLM deployment and building machine learning algorithms.

The combination of AI, automation, and advanced computing power is transforming the way DevOps operates. By fully embracing these innovations, organizations can build smarter, faster, and more resilient workflows equipped to handle the challenges of today’s fast-paced digital world.

Disclaimer: Kevin Vu is a Marketing Specialist at Exxact Corporation, a company that builds innovative computing platforms designed to solve some of the world’s most complex problems. The views and opinions expressed in this article are his own and do not necessarily reflect those of Exxact or Data Science Central. While the article may mention or reference products, services, or solutions offered by Exxact, it is intended solely for informational and educational purposes and should not be interpreted as an endorsement or promotion of any specific commercial interests.