Summary: AI and predictive analytics are now so prevalent in our day-to-day lives that it has raised the attention of the government, particularly about how certain groups might be adversely impacted. As data scientists we naturally want to have free reign to let the data speak as best it can, but as this report from the White House shows, we need to be prepared for some push back.

The last 10 years have been glorious days for data science and predictive analytics as new algorithmic techniques and faster/cheaper computational platforms have allowed massive improvements in all elements of our lives and business. Whether you identify this as Big Data, predictive analytics, data science, or artificial intelligence, these are all part and parcel of the driving force behind huge advancements in convenience, efficiency, productivity, better use of resources, expanded access to knowledge and opportunity, and profitability.

As data scientists we have enjoyed an ever expanding array of tools and techniques that are increasingly sensitive and can deal with previously unobtainable results like blending voice, text, image, and social media into our models. And the businesses that we support have incorporated those advances into better and better products and services.

The ‘man on the street’ today would probably credit most of those things to AI, artificial intelligence but inside the profession we know the landscape is more complex than that.

Our bedrock belief however is that, properly interpreted, the numbers don’t lie. So ‘properly interpreted’ is perhaps a big caveat but all the same, our models are objective reflection of the data, not opinion. They are ground truth. And our goal has been to find increasingly accurate signals in the data that power improvement in all areas of business, personal experience, and society at large.

If there is a better tool, we want to use it. If the result can be made more accurate, we want to find it. The accuracy and value of our work is judged by adoption, business utility, and observable outcomes.

Unfortunately, those days of unfettered objective freedom to pursue better results with better data, tools, and techniques is coming to an end.

Unfortunately, those days of unfettered objective freedom to pursue better results with better data, tools, and techniques is coming to an end.

Outrageous you say! How can this possibly be true? Who will stop me? The answer is government regulation that has been brewing for some time and over the next several years will increasingly restrict what businesses can and cannot do with data science.

Why is this a Problem Now?

We are about to become victims of our own success. Predictive analytics whether embedded in company operating systems or in public-facing AI apps has become so widely adopted that we are now big enough to be a target.

The argument is that purely objective responses guided by machine learning are sensitive only to optimizing their immediate goals of efficiency and profitability but are not sensitive to social goals. Government argues that oversight is necessary to push back against unintentional discrimination and exclusion of certain individuals.

If you are in lending or insurance you already know that certain types of data that identify us as members of protected groups based on factors like sex, age, race, religion, and others can’t be used in building decision models that impact customers. Although this may have resulted in suboptimal decisions about price and risk which equates to higher costs for all of us, our legislators said this was for our own good. For the most part no one has pushed back. As it turns out this was just the camel getting its nose into the tent.

Where is this Coming From?

Before you decide that this is some sort of hyperbolic rant, please take a look at this report “Big Data: A Report on Algorithmic Systems, Opportunity, and Civil Rights”. This was published directly from the Executive Office of the President in May and details exactly what wrongs they will seek to redress, and broadly how.

Before you decide that this is some sort of hyperbolic rant, please take a look at this report “Big Data: A Report on Algorithmic Systems, Opportunity, and Civil Rights”. This was published directly from the Executive Office of the President in May and details exactly what wrongs they will seek to redress, and broadly how.

The report starts off by crediting many benefits of Big Data and algorithmic systems in “removing inappropriate human bias and to improve lives, make the economy work better, and save taxpayer dollars” (part of the original 2014 study for which I was a contributor).

By the second paragraph, the real message emerges:

“As data-driven services become increasingly ubiquitous, and as we come to depend on them more and more, we must address concerns about intentional or implicit biases that may emerge from both the data and the algorithms used as well as the impact they may have on the user and society. Questions of transparency arise when companies, institutions, and organizations use algorithmic systems and automated processes to inform decisions that affect our lives, such as whether or not we qualify for credit or employment opportunities, or which financial, employment and housing advertisements we see.”

You Can’t Attack It Unless You Name It

You Can’t Attack It Unless You Name It

What exactly is it that causes data scientists to be conduits for social injustice? The report has given our sin a name:

“Data fundamentalism—the belief that numbers cannot lie and always represent objective truth—can present serious and obfuscated bias problems that negatively impact people’s lives.”

Anyone with experience in debate knows that you can’t rally the uninformed behind a cause as broad as ‘the social implications of using mathematical algorithms in decision making’. You have to have something catchy, and “Data Fundamentalism” is our new professional fatal flaw.

What Exactly Is Being Proposed

The report offers a wide variety of examples in which specific groups might hypothetically be disadvantaged by data science driven decision making. I emphasize ‘might hypothetically’ since the report offers nothing in the way of evidence that any of this is actually occurring. No wronged person or group has yet sought redress against mathematics in a court of law.

Here are a few condensed examples directly from the study of potential discrimination, their causes, and what restrictions might be necessary to address them.

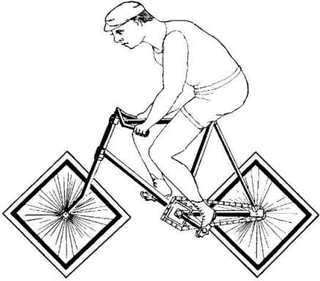

1. GPS Apps Providing Fastest Route to a Particular Destination: This disadvantages groups who do not own smart phones or who rely on public transportation or bicycles. Since routes are defined in terms of roads navigated by cars there is a selection bias discriminating against low income users. The proposed solution is to mandate inclusion of non-automotive routes with constantly updated public transportation schedules.

2. Hiring for Cultural Fit: It is an acknowledged best practice that aside from specific skills that companies should hire for best cultural fit. This results in employees who are more likely to get along, be retained longer, and be more productive. Elements of cultural fit have been embedded in the resume screening programs used by many HR departments to be efficient in which candidates to interview. But as the study contends: “In a workplace populated primarily with young white men, for example, an algorithmic system designed primarily to hire for culture fit (without taking into account other hiring goals, such as diversity of experience and perspective) might disproportionally recommend hiring more white men because they score best on fitting in with the culture.” This perpetuates historical bias in hiring. This type of screening might be disallowed unless certain diversity goals were achieved and reported.

2. Hiring for Cultural Fit: It is an acknowledged best practice that aside from specific skills that companies should hire for best cultural fit. This results in employees who are more likely to get along, be retained longer, and be more productive. Elements of cultural fit have been embedded in the resume screening programs used by many HR departments to be efficient in which candidates to interview. But as the study contends: “In a workplace populated primarily with young white men, for example, an algorithmic system designed primarily to hire for culture fit (without taking into account other hiring goals, such as diversity of experience and perspective) might disproportionally recommend hiring more white men because they score best on fitting in with the culture.” This perpetuates historical bias in hiring. This type of screening might be disallowed unless certain diversity goals were achieved and reported.

3. Any Screening or Scoring System: Any system used for selecting or excluding any consumer, potential student, job candidate, defendant, or the public cannot be secret or proprietary, must be transparent (interpretable) to the effected person, and both the data used and the technique for scoring must be open to direct challenge. Clearly this would extend even to scoring systems that recommend one product or service to a specific customer instead of another. This directly challenges the data used as well as the technique and directly calls out non-interpretable but more accurate techniques like neural nets, SVMs, and ensembles.

4. Simple Recommenders and Search Engines: These may be conduits of discrimination if the advertisements or products shown to a specific individual can be found to be limiting or mis-targeted. The report specifically calls out the potential for discrimination when “algorithms used to recommend such content may inadvertently restrict the flow of information to certain groups, leaving them without the same opportunities for economic access and inclusion as others.”

5. Credit Scoring: The report contends that despite the demonstrable success of credit scoring systems in appropriate pricing and evaluating the risk of loans, they are a primary source of discrimination. This arises because “30 percent of consumers in low-income neighborhoods are “credit invisible” and the credit records of another 15 percent are unscorable.” The proposed solution is to mandate the use of alternative scoring systems not yet developed that might rely on such factors as: “phone bills, public records, previous addresses, educational background, and tax records, … location data derived from use of cellphones, information gleaned from social media platforms, purchasing preferences via online shopping histories, and even the speeds at which applicants scroll through personal finance websites.”

6. College Student Admission: Like the hiring process, many colleges use screening apps designed to identify applicants with the best cultural fit and the highest likelihood of success. These would be disallowed as restricting the access of less qualified groups who after personal review might be admitted.

These are only a half-dozen examples from the many more offered over the 29 pages of the report. In short however you can see that they challenge the most fundamental tenants of data science by:

Restricting the types of data that can be used.

Restricting the types of data that can be used.- Requiring the user to be responsible for any perceived inaccuracy in the data or its interpretation.

- Creating a mechanism by which any person impacted however tangentially by a data-driven algorithm must be able to challenge the result.

- Allowing only algorithms that are interpretable regardless of the lack of accuracy and business inefficiency that practice introduces.

- Requires application developers to directly incorporate the needs of any individual who might use the app regardless of whether that person was part of the target audience.

- Make our recommenders and search engines intentionally inefficient by requiring an intentional detuning of specificity to provide broader access to information and recommendations.

- Essentially invalidating the use of scoring algorithms in recommending a product of service to a specific customer who might challenge that decision.

Is There Any Good News Here?

Unless you happen to be completely aligned with the goals of total social inclusivity it is difficult to find any good news here. If implemented as stated this would undo years of advancements in model accuracy and the broad use of data. It would also impose very direct costs on a large number of companies whose current data-aided processes would be made intentionally less efficient.

I imagine many readers are wondering how long this will take to actually be regulated – probably piecemeal over four or five years. Others may be wondering if they can fly under the radar for an extended period of time – probably depends on how deep your pockets are since it is only a matter of time before the plaintiff’s bar awakens to this new opportunity.

You will notice that many of the arguments made in the study are based on the principle of disparate impact (measurement by equal outcome and not equal opportunity). The new administration coming to Washington in January is probably less anxious to promote regulation and more philosophically inclined toward tests of equal opportunity.

However, now that the services built on our work have such significant impact on society, these reviews and the potential for regulation will not go away. In a Tractica research report on 10 AI Trends to Watch in 2017, they predict that there will be an “AI Czar” as part of the federal government by 2020. And this isn’t only a U.S. issue. They predict that essentially all of the developed countries will move to centralize the review and control of AI on the same time scale. If you do business internationally you will probably face the same uncoordinated and contradictory regulatory environment that exists broadly for the internet today.

About the author: Bill Vorhies is Editorial Director for Data Science Central and has practiced as a data scientist and commercial predictive modeler since 2001. He can be reached at:

Restricting the types of data that can be used.

Restricting the types of data that can be used.