They say that the best ideas sometimes come to you while you are in the shower, and this idea of how to explain two important Neural Network concepts – Backpropagation and Stochastic Gradient Descent – actually did come to me as I was trying to set the perfect water temperature for my morning shower.

As I was struggling to adjust the two shower handles – one handle that controlled scolding hot and the other handle that controlled flash freezing – it occurred to me that I was a simple Neural Network (in spite of the “Snow Miser/Heat Miser” song running through my head). I was using Backpropagation to feed back the error between my desired water temperature versus the actual water temperature to my two handled Neural Network, and Stochastic Gradient Descent to determine how much to adjust the hyperparameters of those two handles.

While I don’t expect that the normal human will ever write their own Neural Network program, the more that you can understand how these advanced technologies work, the better prepared you will be to determine where and how to leverage AI, Machine Learning and Deep Learning technologies to uncover new sources of economic value with respect to customer, product and operational insights

Review of Neural Network Basic Concepts

Let’s review the basic concepts:

- Backpropagation is a mathematically based tool for improving the accuracy of predictions of neural networks by gradually adjusting the weights until the expected model results match the actual model results. Backpropagation solves the problem of finding the best weights to deliver the best expected results.

- Stochastic Gradient Descent is a mathematically based optimization algorithm (think second derivative in calculus) used to minimize some cost function by iteratively moving in the direction of steepest descent as defined by the negative of the gradient (slope). Gradient descent guides the updates being made to the weights of our neural network model by pushing the errors from the model’s results back into the weights.

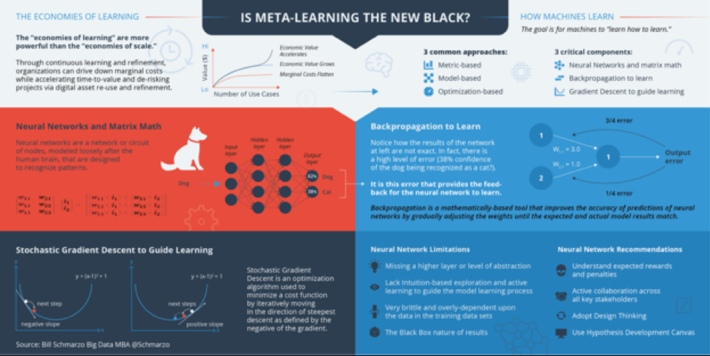

Figure 1: “Neural Networks: Is Meta-learning the New Black?”

My Faucet Lesson of Neural Networks in Greater Detail

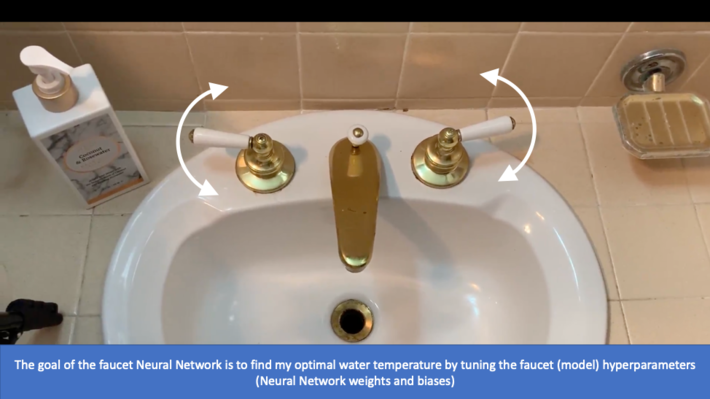

The goal of the faucet Neural Network is to find my optimal water temperature by tuning the faucet (model) hyperparameters (weights and biases). I’ll use my hands to measure the error between actual and desired results and then backpropagate the error (error size and direction) back to the faucet neural network to tweak the hot and cold handles “weights and bias.” I will use the Neural Network concepts of Backpropagation to determine the error between expected versus actual model results and Stochastic Gradient Descent to determine how much to tweak the model parameters (faucets) in order to eliminate error (see Figure 2).

Figure 2: Bathroom Faucet Neural Network and Tweaking Hot and Cold Faucet Weights and Biases

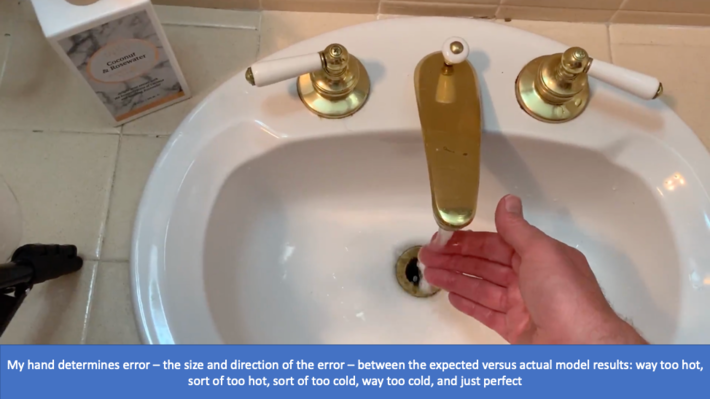

My hand in Figure 3 determines or measures error – the size and direction of the error – between the expected versus actual model results: blazing hot (Heat Miser), mildly hot, slightly hot, slightly cold, mildly cold, chillingly cold (Snow Miser).

Figure 3: Measuring / Determining the Error Between Actual versus Optimal Results

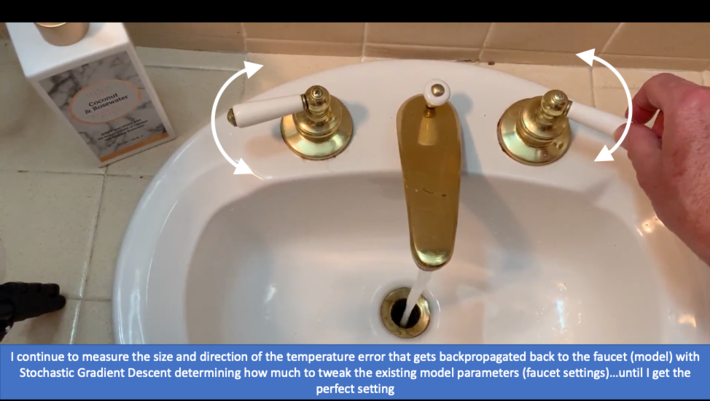

I continue to measure the size and direction of the temperature error that gets backpropagated back to the faucet (model) using Stochastic Gradient Descent to determine how much to tweak the model parameters (faucet settings) until I get the perfect outcome/result (see Figure 4).

Figure 4: Backpropagating the Error Back to the Neural Network Model in Order to Tweak the Model Weights

Understanding Neural Network Basic Concepts

While the actual mechanics of how a neural network works are much more complicated (lots of math and calculus), the basic concepts are really not that hard to understand. And the more that you can understand how these advanced technologies work, the better prepared you will be to determine where and how to leverage AI, Machine Learning and Deep Learning technologies to uncover new sources of economic value with respect to customer, product and operational insights. And maybe, just maybe, as you are uncovering those new sources of economic value, you can join me in a rousing chorus of “I’m Mr. Heat Miser…”

Source: “30 Famous Christmas Songs Lyrics”

For those interested, here is my video explaining the water faucet neural network (and yes, I know that I need to clean the grout).