Summary: If you want to capitalize on all the amazing advancements in data science take a look at these two hot growth areas for IoT. It’s likely that these will be where a lot of venture capital is invested over the next year or two.

A lot of well deserved attention is being directed at speech, image, and text processing. The tools in this area are the CNNs and RNNs we’ve reviewed in recent articles. We’ll continue to exploit and refine these capabilities probably for several more years but if you want to get out in front you really need to be looking for the next wave. We think we’ve spotted two areas of emerging opportunities where there’s not yet a lot of competition but soon will be.

Velocity = Stream Processing + IoT

In terms of the three original Big Data characteristics, today’s frontier action is all about velocity. Not necessarily in absolute speed, though there is some of that, but in the volume of data in motion from sensors and our ability to make use of it through streaming platforms like Spark and specialty analytics, particularly those with a renewed focus on time series. (see Recurrent Neural Nets.)

Three Characteristics of Emerging IoT

There are three emerging technologies that are enabling cutting edge IoT. It’s opportunities that combine these three that we think present the best current place to dig.

- Streaming Platforms – focus on bi-directional. So far the field has focused mostly on one-direction platforms that receive and process the data and then alert actions via data viz or message. Focus on bi-directional platforms that send commands directly back to the sensor source in real time without human intervention required to filter or interpret the action.

- Very Smart Sensors. This is where our cutting edge developments in CNNs and RNNs come into play. Although these NNs are difficult to train, once trained they can be migrated directly onto chip-enabled sensors so that the processing and potentially the response can take place without the need to even send the data back to the platform. Not only can these actions be extremely complex, the platform can be relegated to reporting what the sensor has already done and for future refresh and development of more models. This will be especially true as we move forward with Spiking Neural Nets with their very low power consumption and extraordinarily high processing capability in tiny form function chips.

- Smart Feedback Mechanisms. By smart we mean making decisions that are faster or more consistently correct than a human could make. Some of this will be mechanical responses like detecting a machine problem and either shutting it down or modifying its behavior. It’s the human side of this however where your focus should be.

The IoT Ecosystem

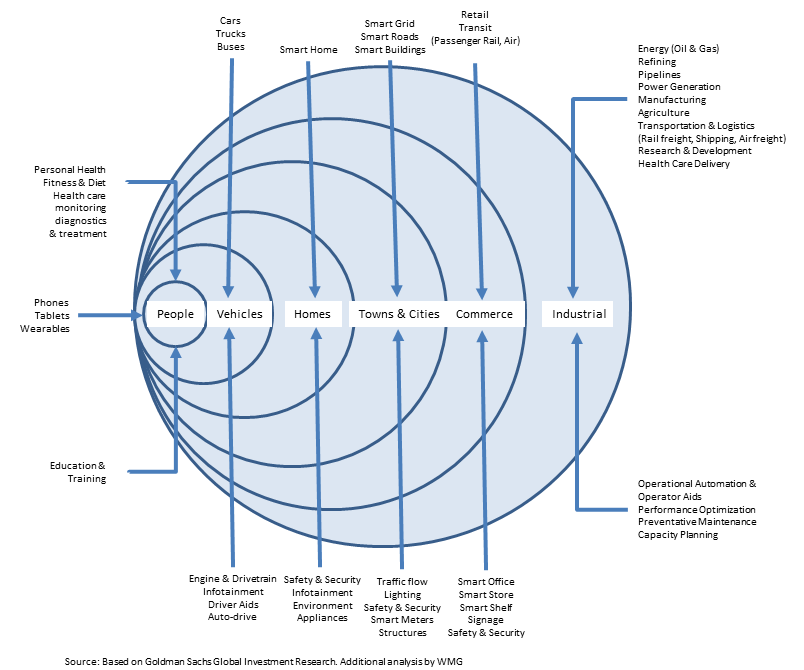

Enough folks in data science, business, and in the investment community have explained the universe of IoT that we now have pretty good agreement on what that looks like. Specifically the one graphic you’ll see over and over is this one from Goldman Sachs.

It’s not clear why they represented these as off center concentric circles or why ‘people’ is the smallest circle but it will serve as a starting point.

When I see these opportunities however, I see a bigger and much more fundamental split, between IoT applied to things, and IoT applied to people which brings us back to my third point above about focusing on human feedback mechanisms

Sensors on Things

Five of the six rings in the Goldman Sachs diagram relate to sensors on things, be they airplanes, streets, machinery, or cars. IoT came into being on machinery long before Hadoop.

Through the 80s and 90s the biggest industrials, utilities, and oil and gas companies implemented SCADA systems (supervisory control and data acquisition) that connected machines and produced data on which early optimization and preventive maintenance models were built.

It should come as no surprise then that the large industrial enterprises (GE, Exxon, Caterpillar, and the automotive manufacturers among others) were locked and loaded when Hadoop and stream processing took the binders off these early systems.

My personal observation is that it will be very difficult for venture funded companies to compete in the ‘sensors on things’ world. First this is true because companies like GE have reframed themselves as data and analytic companies specifically to attack these opportunities with massive resources.

Second, because even where venture-sized companies have achieved some success, and I’m thinking NEST and some of the home security and video surveillance startups, they have quickly become acquisitions for the majors.

Perhaps being quickly acquired isn’t too bad a deal for entrepreneurs, but it’s likely those founders are now on long-term earn out contracts as full time employees of large corporations. That may not be exactly what those entrepreneurs had envisioned.

The Goldmine in Human Wearables

The greatest area of opportunity, diversity, investment, and technological advance is in the application of sensors on humans and the manner in which we provide our IoT feedback to the human user. It’s the resulting action, the feedback, after all that is the actual value in IoT.

Where human wearables are concerned our current feedback mechanisms are pretty archaic. For the most part they are visual displays of numbers or diagrams or simple text messages. Here are two specific directions where big opportunities lie by incorporating vastly smarter and improved feedback devices.

Enterprise Augmented Reality

Although human wearables immediately brings to mind the consumer market, there is substantial opportunity in enterprise applications. The first of many ‘smart glasses’ are already in use. Some will look like Google Glass; some more like this DAQRI Smart Helmet. In this scenario the heads up display is assisting this worker in guiding a robotic welder. The operator is being coached by a remote expert in exactly where and how to make the weld using cameras and heads up displays.

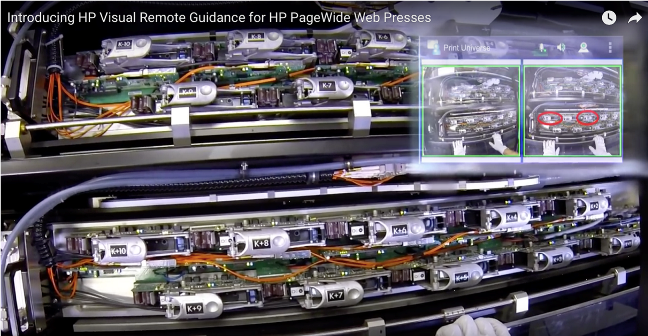

Another current application that is truly augmented reality is the overlay of schematics or instructions via the heads up display onto the actual piece of tech equipment that is being serviced. This is the HP MyRoom Virtual Assistant used with Google Glass.

What should jump out at you is that neither of these human feedback mechanisms use real artificial intelligence, the first using a remote human expert and the second using a static database of visual overlays and instructions.

It should be a small step for some smart company to wed the image/video and text/speech processing capabilities of CNNs and RNNs (transferred onto a chip within the heads up display) plus a decision tree of diagnostic and corrective actions to autonomously guide the technician in real time as he first makes an action that is evaluated by the IoT system, then guided to the next most appropriate action based on the results of the first.

Human Wearables that Change Behavior

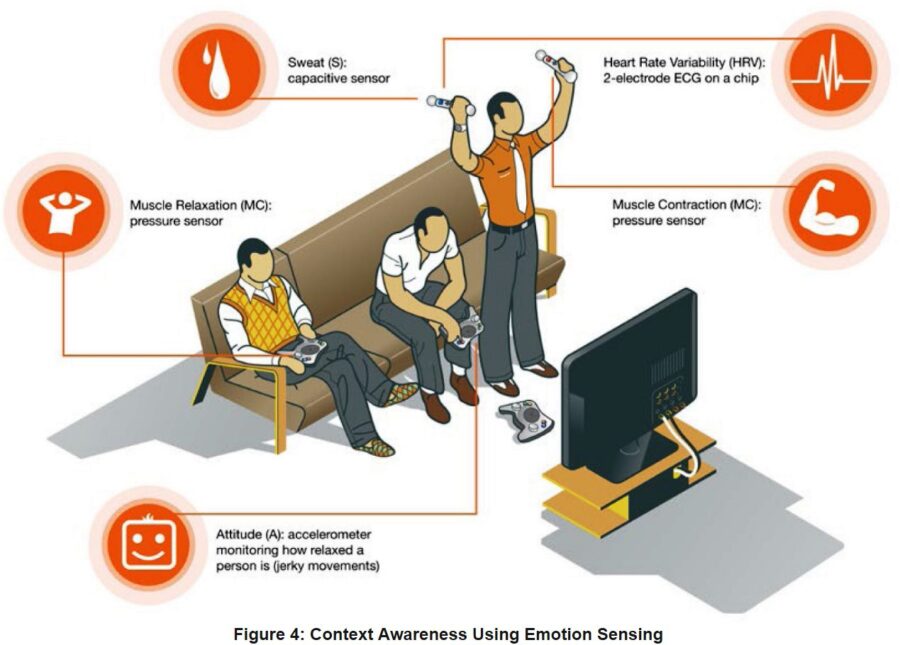

On the consumer side, IoT systems shouldn’t just report facts for us to interpret. They should actually be acting to change our behavior. In August we wrote in ‘These IoT Sensors Want to Know How You Feel – And Maybe Even Change Your Mood’ about a driving game that uses sensors in the controller plus your performance in the game to decide if you are comfortable with the degree of difficulty the game is presenting. The goal is to keep us playing the game. If it’s too easy or too hard we’re likely to quit. So if the algorithms decide we’re having a hard time it will adjust the game settings to make it a little easier, and conversely a little harder if it seems too easy.

Another example; it’s possible to use the rate, steadiness, and frequency of key strokes on your keyboard to determine something about your mood, and a small step to use that feedback to present you with information or an environment that that would calm or excite you.

And still another. My car already has a function where it tries to determine if I am getting drowsy by some combination steering inputs and other factors. If it decides I am too drowsy it will alert me and may not let me reengage the cruise control unless I take specific actions.

In retail and ecommerce it’s widely known that mood influences buying behavior and our openness to new experiences or products. Sensors that could detect mood could be human wearable or could use non-contact sensors like cameras and microphones.

IoT systems combined with wearable sensors and the natural contact objects in our environment like keyboards and automobile controls can take direct action to change or at least directly influence our behavior.

If you’re looking for your next venture idea, try one of these two.

About the author: Bill Vorhies is Editorial Director for Data Science Central.and has practiced as a data scientist and commercial predictive modeler since 2001. He can be reached at: