Summary: Just where are we in the Age of AI, where are we going, and what happens when we get there?

When things are changing fast, sometimes it’s necessary to take a step back and see where you are. It’s very easy to get caught up in the excitement over the details. The individual data science technologies that underlie AI are all moving forward on different paths at different speeds, but all of those speeds are fast. So before you change careers or decide that your business ‘needs some of that AI’ let’s fly up and see if we can make out a larger pattern that will help us understand where we are and where we’re going.

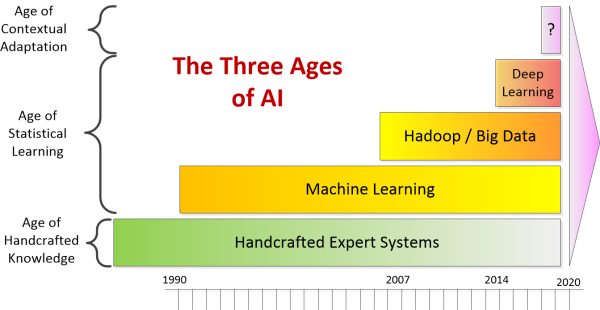

The Three Ages of AI

We tend to think of AI as something new, particularly new technologies and techniques linked for example to deep learning. However we’ve been pursuing AI for decades and it seems illogical to exclude our past successes just because the technology has evolved.

As I have struggled to explain AI to others I have continued to look for some dividing line between the predictive analytics that we’ve been practicing for quite a while and what the press characterizes AI to be today. Recently I came across an explanation by John Launchbury, the Director of DARPA’s Information Innovation Office who has a broader and longer term view. He divides the history and the future of AI into three ages:

1. The Age of Handcrafted Knowledge

2. The Age of Statistical Learning

3. The Age of Contextual Adaptation.

Launchbury’s explanation helped me greatly but while the metaphor of ‘ages’ is useful it creates a false impression that one age ends and the next begins as a kind of replacement. Instead I see this as a pyramid where what went before continues on and becomes a foundation for the next. This also explicitly means that even the oldest of AI technologies can still be both useful and are in fact still used.

I’ve also subdivided Launchburys ‘Age of Statistical Learning’, our current age, into phases I’m sure you all will recognize as major leaps forward and therefore worthy of separate explanation.

1. The Age of Handcrafted Knowledge

The first age is characterized by what we would call today ‘expert systems’. These are systems to augment human intelligence by distilling large bodies of knowledge into elaborate decision trees built by teams of SMEs. Good examples are TurboTax, or logistics programs that do scheduling. These existed at least back into the 1980’s and probably before.

While our ability to utilize machine learning statistical algorithms like regression, SVMs, random forests, and neural nets expanded rapidly starting in roughly the 1990’s the application of these handcrafted systems didn’t entirely go  away. Only recently Launchbury speaks of the successful application of one such system in defending against cyberattacks. And prior to about 2004 similar systems were actually at the heart of early autonomous vehicles (which largely failed as the rules didn’t account for all the variations in the real world).

away. Only recently Launchbury speaks of the successful application of one such system in defending against cyberattacks. And prior to about 2004 similar systems were actually at the heart of early autonomous vehicles (which largely failed as the rules didn’t account for all the variations in the real world).

Launchbury rates the systems of this era as reasonably good on reasoning but only for very narrowly defined problems, with no learning capability and poor handling of uncertainty.

2. The Age of Statistical Learning

This second age is where we find ourselves today. While Launchbury tends to focus on the advances in deep learning, in fact this age has been with us for as long as we have been using computers to find signals in the data. This began decades ago but gained traction in the 90’s and continued to expand with its ability to handle new data types, volumes, and even streaming data.

The Age of Statistical Learning began with explosive growth in our ability to find signal in the data thanks to our growing tool chest of ML techniques like regression, neural nets, random forests, SVMs, and GBMs.

This is a foundational data science practice that’s not going away and is used to answer all of those behavioral questions about consumers (why they come, why they stay, why they go), transactions (is it fraudulent), devices (is it about to fail), and data streams (what will that value be 30 days from now). This augmentation of human intelligence is part of the AI journey that continues today.

There have been at least two additional major revolutions within this age that dramatically enhanced our capability. The first was Hadoop and Big Data. Now we had massive parallel processing and ways of storing and querying big, unstructured, and fast moving data sets. 2007 when Hadoop first becomes open source marks the beginning and of course it hasn’t ended yet.

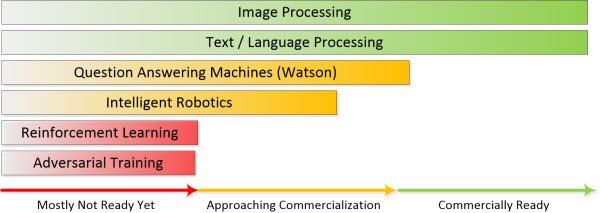

The second mini-revolution would be the rise of the Modern AI Tool Set comprised of these six technologies.

- Natural Language Processing

- Image Recognition

- Reinforcement Learning

- Question Answering Machines

- Adversarial Training

- Robotics

With minor exceptions, these can be combined into a category that depends on deep learning. But if you look at the specifics of how that deep learning works and how those deep neural nets operate you quickly realize that these are cats and dogs.

There is very little in common among convolutional neural nets, recurrent neural nets, generative adversarial neural nets, evolutionary neural nets used in reinforcement learning, and all of their variants. And these in turn have even less in common with Question Answering Machines (Watson), robotics, or variations on reinforcement learning that don’t use deep neural nets.

Perhaps what we should call this is the Era of Featureless Modeling since what these do have in common is that they generate their own features. You still have to train against known and labeled examples, but you don’t have to fill in the columns with predefined variables and attributes. They all also need massive parallel processing across extremely large computational arrays, many times requiring specialized chips like GPUs and FPGAs to get anything done on a human time scale.

So the important distinction is that this second age of AI extends back many decades and has had three major irruptions of new technologies (so far) Machine Learning, Big Data/Hadoop, and Featureless Modeling, but is still within the Age of Statistical Learning that continues to march on.

Launchbury says that where we have ended up so far is with systems that have very advanced and nuanced classification and prediction capabilities but which have no contextual understanding and minimal reasoning ability. As our techniques have advanced the volumes of required training data become a hindrance (not true of our still valuable and valid predictive analytics techniques). But problems that we could not solve early in this phase like self-driving cars, the ability to win at increasingly sophisticated games, image, text, and language processing are now within reach.

Launchbury says that where we have ended up so far is with systems that have very advanced and nuanced classification and prediction capabilities but which have no contextual understanding and minimal reasoning ability. As our techniques have advanced the volumes of required training data become a hindrance (not true of our still valuable and valid predictive analytics techniques). But problems that we could not solve early in this phase like self-driving cars, the ability to win at increasingly sophisticated games, image, text, and language processing are now within reach.

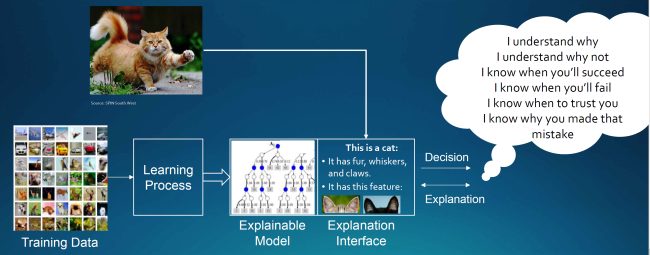

3. The Age of Contextual Adaptation

What comes next? Lauchbury says we need two problems solved that have arisen in our current Age of Statistical Learning.

Models That Explain Their Reasoning: Although our deep neural nets are good at classifying things like images they are completely inscrutable in how they do so. What is needed are systems that can both classify and explain. By understanding the reasoning we can validate the correctness of the process.

Generative Models: These are models that learn from underlying context, for example a learned model of the strokes needed to form each letter of the alphabet instead of brute force classification based on lots of examples of bad  handwriting. Generative models which we’re experimenting with today promise to dramatically reduce the amount of training data required.

handwriting. Generative models which we’re experimenting with today promise to dramatically reduce the amount of training data required.

Given these characteristics, AI systems in this third age would be able to use contextual models to perceive, learn, reason, and abstract. They could take learning from one system and apply it to a different context.

The Complete View

The beginning of a new age does not mean the abrupt end of the previous one. Although some techniques and capabilities may diminish in usefullness it is unlikely they will completely go away. For example, the massive computational power required for the newest techniques as well as complexity of development and training will constrain these to high value problems for some time to come.

In other cases like the Age of Contextual Adaptation it’s likely that we’ll have to wait for a new generation of chips that more closely mimic the human brain. These third generation neural nets called Neuromorphic or Spiking Neural Nets will utilize chips that are in the earliest stages of development today.

Where We are In the Second Age of Statistical Learning Today

Of the three chapters in our current age, most attention is being directed to what’s new, the deep learning, reinforcement learning, and the balance of the six technologies we discussed above that make up this phase.

This is an evolutionary struggle that is starting to bear fruit but most of these new developments are not yet ready for prime time. While we can see directionally where all this is headed only two of these six (maybe three) are at a point where they can be reliably put into commercial operation (image processing and text/speech processing, maybe some limited versions of Watson-like QAMs).

When you try to bolt these together they hang together only loosely and integrating these different technologies is still one of the biggest challenges. We’ll work it out. It’s just that they’re not there yet.

We’ll get there, and even to the Third Age. Before we graduate from this one however, there may be other evolutions or revolutions that we haven’t anticipated.

About the author: Bill Vorhies is Editorial Director for Data Science Central and has practiced as a data scientist and commercial predictive modeler since 2001. He can be reached at: