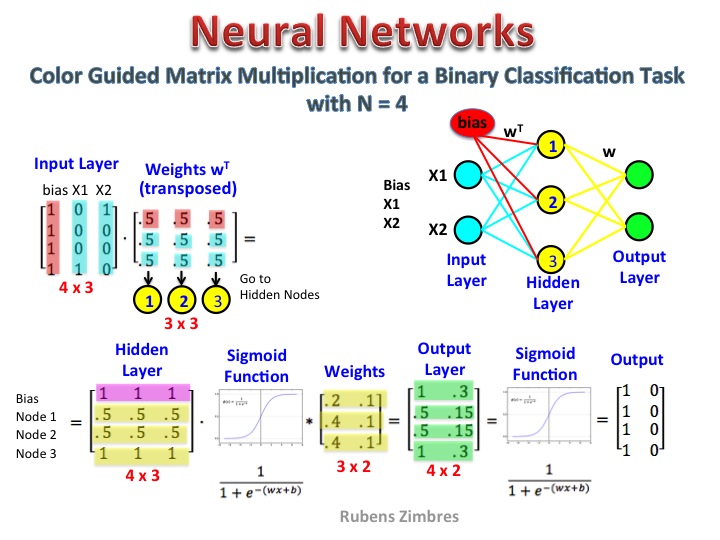

This post is the outcome of my studies in Neural Networks and a sketch for application of the Backpropagation algorithm. It’s a binary classification task with N = 4 cases in a Neural Network with a single hidden layer. After the hidden layer and the output layer there are sigmoid activation functions. Different colors were used in the Matrices, same color as the Neural Network structure (bias, input, hidden, output) to make it easier to understand.

Tags:Uncategorized