Transcending human perception, today autonomous vehicles or AVs have become a reality. Auto giants such as Tesla, BMW, Google, Volkswagen and Volvo have been the front runners in introducing autonomous transportation to ease the pressure off. Meanwhile, the market growth for AV or autonomous vehicles is estimated to touch 40% of CAGR, annually in the next six years.

Before going further, it would be worth knowing how vehicles can function autonomously. An AV or autonomous vehicle is equipped with a vision system, LIDAR or radar based sensing and multiple neural networks trained with data and machine learning algorithms. The multi-layer neural network enables the autonomous cars to identify objects and recognize them. This processed data through machine learning algorithms learns about the objects and enables the vehicle to figure out the next move based on the environment. It may sound simple, however, from object detection and recognition to processing of next move, AVs rely on the neural network data, 360 degree view of the environment using 3D point cloud segmentation and object detection using computer vision.

AV levels and ADAS or Advanced Driver Assist System

In some countries like the US, self driving vehicles are regulated as per the technical specification they are equipped with. ADAS or Advanced Driver Assist System, is a driving assistant support mechanism and is the key indicator of level of autonomy an AV is equipped with. There are following autonomy levels delineated under the ADAS:

- Level 1 (DA or driver assistance) includes adaptive cruise control, emergency brake assist, automatic emergency brake assist, lane-keeping, and lane centering.

- Level 2 (POA or partial operation automation) includes highway assist, autonomous obstacle avoidance, and autonomous parking.

- Level 3 (CA or conditional automation) to include highway driving, driver initiated lane change, automated valet parking.

- Level 4 (AOA or advanced operation automation) sustainable and operational design; limited implementation of DDT or dynamic driving task.

- Level 5 is when the vehicle is fully autonomous; no human intervention.

Deep learning: Algorithms, neural network mesh with ADAS inclusion

Object detection, recognition, image localization and prediction of the next movement form the core when it comes to autonomous vehicles. The localization remains a challenging task in autonomous vehicles which enables them to understand its own positioning on the ground. This challenge is dealt with satellite based navigation systems and inertial navigation systems. The ADAS works for long range detection while CNNs play a critical role in lane detection, pedestrian detection and redundant object detection as well.

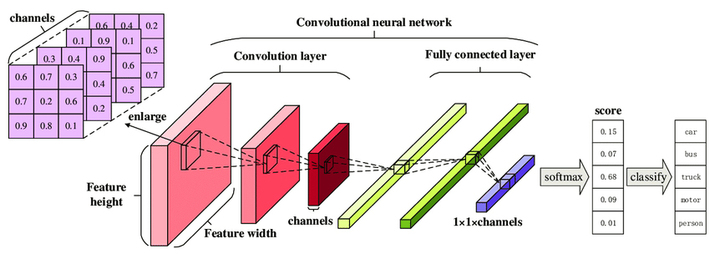

The CNNs or convolutional neural network are powered by machine learning algorithms that work and process the sensor data in real-time to produce actionable steps of the vehicle. This is an extremely crucial and data-intense operation, and requires fast execution. Therefore, most autonomous vehicles are built with specific hardware requirements based on the multi-format data interaction and simultaneous processing. The hardware requirements include GPU, TPU, FPGA for training and deployment to make the vehicle functional

Image credit: researchgate

Autonomous vehicles employ deep learning and there is a concrete reason for this. AVs function on end-to-end learning and everything takes place in real-time, hence the data processing must be at lightning fast speed. The method of processing images for example, applied in the vehicles, wherein, the camera images are directly processed into CNN which reproduces steering angle as output. The deep learning (mesh) is performed by the massive technology structure that enables the vehicle to perceive, plan, coordinate and control. The overall movement of the vehicle encompasses these:

- For perception: Localization or environmental perception

- For plan: Mission, behavior and motion planning

- For control: Path or trajectory tracking

Combined with machine learning algorithms, the data utilized for processing via deep learning pass through algorithms like pattern recognition methods – SVM or support vector machine with histogram of oriented gradients and PCA or Principle Component Analysis. The clustering algorithm is also utilized to find out the most relevant and appropriate imagery to understand the environment. While, the decision matrix algorithm – Adaboost is used for making overall prediction and decision of cars movement.

End note

The autonomous vehicles are under trial for a few years. An increased focus on making the movement of the vehicle safe and efficient around the cities across the world can be seen. Pertinently, localization has been the most crucial challenge to work on and the recent development and advancement in the concept of SLAM (Simultaneous localization and mapping) has significantly addressed some of the localization challenges. The perception of the environment of an autonomous vehicle has undergone many changes in the past couple of years and still continues to leverage the sensor data and semantic data of the topology in order to perform consistently as per the shifting environment. With millions of accidents happening due to human error, AVs are a revolutionary concept for mobility in the smart cities or cities of the future. They can contribute significantly in reducing human dependency and avert human errors on the road; and also reduce stress and congestion on roads. Electric AVs will aid in reducing the carbon emissions as well. The introduction of autonomous vehicles will also help in going to the next level and achieving

Vehicle-to-Vehicle communication. Googles self-driving cars program, Waymo, has recorded the most successful run in the autonomous vehicles category, until now. More is expected in the AV domain in the coming years, something to wait and watch out for.