Key topics of this blog:

- Economies of scalehave historically given large enterprises unsurmountable market advantages through the exploitation of mass production, distribution and marketing.

- In Digital Transformation, “Economies of Learning”are more powerful than “Economies of Scale” because of the ability to learn and deploy those learnings within digital assets faster.

- Classic ERP “Big Bang”approachto deploying technology is giving way to use-case-by-use-case approach that enables learnings from one use case to be reapplied to future use cases.

- “Curated” data in the Data Lakebecomes the foundation for collaborating with business stakeholders to create new sources of customer, product and operational value.

- The “Schmarzo Economic Digital Asset Valuation Theorem”articulates why organizations need to invest in the creation, operationalization and reuse of their digital assets.

- Data Silos and Orphaned Analytics are the great destroyers of the economic value of digital assets.

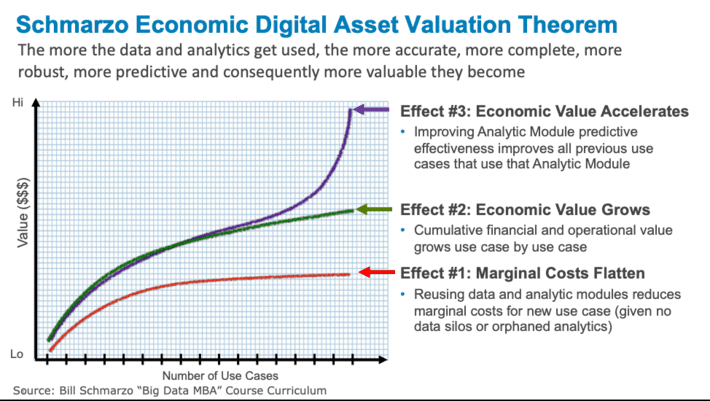

My blog “Why Tomorrow’s Leaders MUST Embrace the Economics of Digital Transf…” introduced the concept of the Schmarzo Economic Digital Asset Valuation Theorem (my attempt for a Nobel Prize in Economics). But since that blog, I’ve been overwhelmed with messages and customer requests to explain the concept in more detail (see Figure 1).

Figure 1: Schmarzo Economic Digital Asset Valuation Theorem

But before I deep dive into explaining the details behind Schmarzo Economic Digital Asset Valuation Theorem, let’s first have a little economics lesson.

History Lesson on Economies of Scale

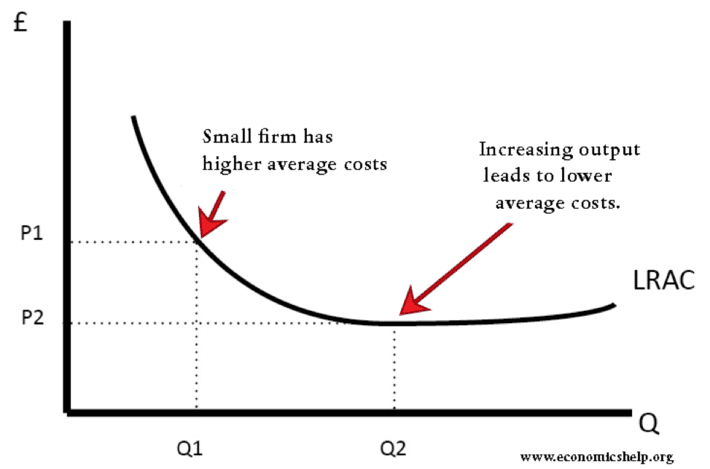

Historically, organizations sought competitive advantage through business models that exploited the economies of scale. Economies of scale manifest themselves in cost advantages that enterprises can enjoy due to their scale of operation (typically measured by volume of output), with cost per unit of output decreasing with increasing scale. Organizations leveraged the world of “mass” – mass procurement, production, distribution, marketing, sales – to erect insurmountable barriers of entry to competitors (see Figure 2).

Figure 2: Source: Definition of Economies of Scale

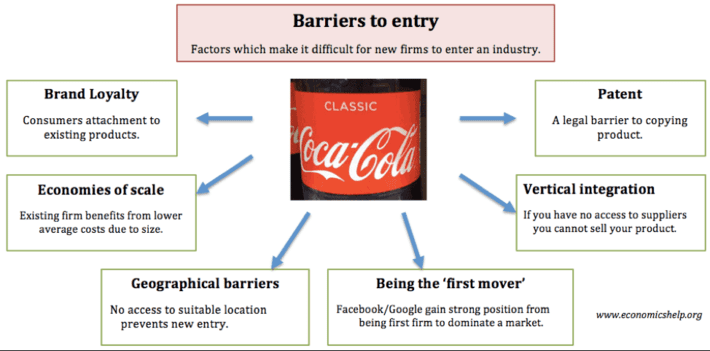

Volume affords an enormous competitive advantage. It not only spreads large fixed costs over high volumes of output to drive down costs per unit, but it also creates a forbidding barrier to entry that hinders new competitors from easily entering markets. See Figure 3 for an example of how Coca-Cola has leveraged economies of scale to acquire and defend its dominate market position.

Figure 3: Coca-Cola Barriers to Entry

Unfortunately, with economies of scale, the large fixed investments impede an organization’s agility and nimbleness, and ultimately preclude the organization from exploiting the Economics of Learning.

The Economics of Learning

In my blog “The Death of “Big Bang” IT Projects“, I discussed the concept of “Economies of Learning.” The book “The Lean Startup” by Eric Ries highlights the power of the “Economics of Learning” concept with a story about stuffing 100 envelopes. Instead of the traditional Economies of Scale (and the associated Division of Labor) approach of folding all 100 newsletters first, and then stuffing all 100 newsletters into the envelopes, and then sealing all 100 envelopes and finally stamping all 100 envelopes, we discover that the optimal way to stuff newsletters into envelopes turns out to be one at a time. The reason the “one at a time” approach is more effective is because:

- From a process perspective, you learn what’s most efficient by stuffing one envelope at a time. You can them immediately reapply those learnings in stuffing the next envelopes.

- You don’t have to wait until the end to realize that you have made a costly or fatal error (like folding all of the envelopes the wrong way before you realize that they won’t fit into the envelopes).

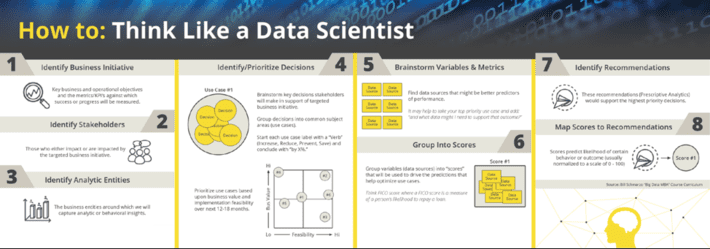

At Hitachi Vantara, we exploit the Economies of Learning in how we manage our data science (machine learning, deep learning, artificial intelligence) projects: we deploy data science on a use-case-by-use-case basis so that we can share and reapply data and analytic learnings to the next use case. The entirety of our “Thinking Like A Data Scientist” methodology revolves around driving organizational alignment and adoption around a process that seeks to identify, validate, value and prioritize the use cases necessary to support the organization’s key business or operational initiatives (see Figure 4).

Figure 4: Thinking Like A Data ScientistMethodology

So now that we understand that the sharing and reapplication of learnings is a powerful concept (the economies of learning versus the economies of scale), let’s review the 3 economic “effects” that power the Schmarzo Economic Digital Asset Valuation Theorem.

Digital Economics Effect #1: Marginal Costs Flatten

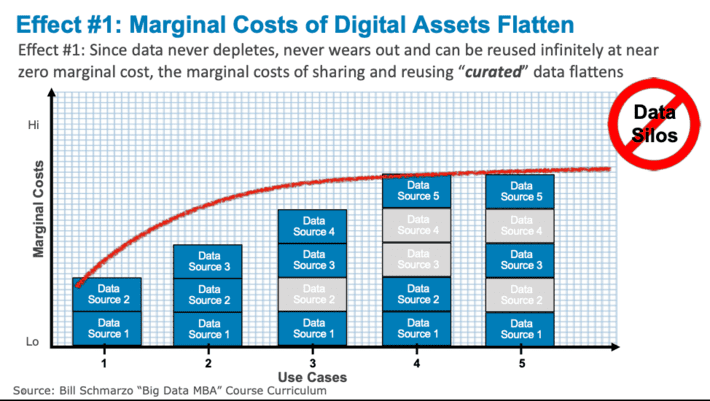

Effect #1: Since data never depletes, never wears out and can be reused infinitely at near zero marginal cost, the marginal costs of sharing and reusing “curated” data flattens (see Figure 5).

Figure 5: Effect #1: Marginal Costs of Digital Assets Flatten

It is the sharing and reuse of the “curated” data, not the raw data, that enables the organization to exploit the data lake to flatten the marginal costs of data. “Curated” data is a data set in which investment has been made to improve its cleanliness, completeness, accuracy, granularity and latency, enhanced metadata, and governance to ensure compliance in its use and continued enrichment so it can be easily accessed, understood and re-used with minimal to no additional/incremental investment.

If organizations are seeking to drive down their data lake costs via the sharing and reuse of data across multiple use cases, then putting curated data into the data lack is critical. Loading raw data just turns your data lake into a data swampby forcing each application to have to pay the full cost of preparing to use the data.

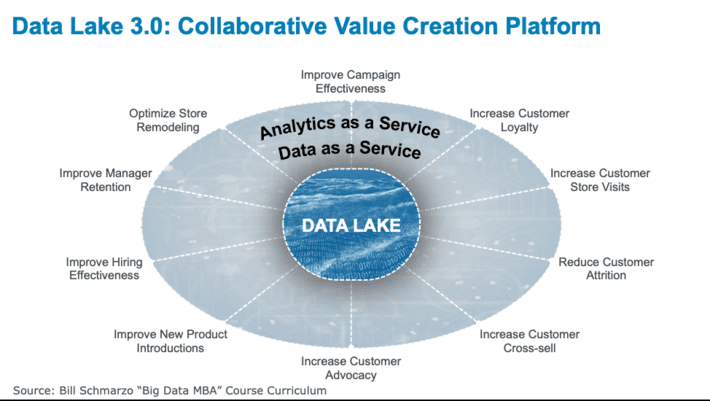

See the blog “Chief Data Officer Toolkit: Leading the Digital Business Transforma…” for more on the role of the data lake as the collaborative value creation platform; that when properly constructed as a business asset, can support the organization’s data monetization efforts (see Figure 6).

Figure 6: Data Lake 3.0: The Collaborative Value Creation Platform

Ultimately, the data lake becomes the organization’s “collaborative value creation” platform that facilitates the sharing of data across the organization, and across multiple use cases.

Note: Data Silos are the killers of the economic value of data. Data Silos are data repositories that remain under the control of one department and are isolated from the rest of the organization, which destroys the economic value of sharing and reusing the same data across multiple use cases.

Digital Economics Effect #2: Economic Value of Digital Assets Grows

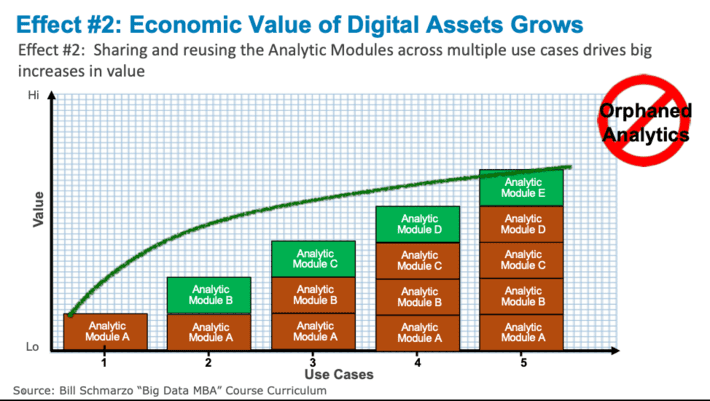

Effect #2: Sharing and reusing the Analytic Modules (Solution Cores in Hitachi terminology) across multiple use cases drives big improvements in analytics time-to-value (see Figure 7).

Figure 7: Effect #2: Economic Value of Digital Assets Grows

Unfortunately, “Orphaned Analytics” negates this time-to-value advantage. “Orphaned Analytics” are one-off analytics developed to address a specific business need but never “operationalized” or packaged for re-use across multiple use cases. Unfortunately, many organizations lack an overarching architecture and packaging process to ensure that the resulting analytics can be captured and re-used across multiple use cases.

Surprisingly, sometimes it’s the more advanced analytics organizations that suffer from “Orphaned Analytics.” These organizations become so comfortable with “banging out some analytics” to solve an immediate problem, that there is not sufficient effort invested to operationalize the analytics for sharing and reuse; operationalization just gets in the way of attacking the next analytics opportunity.

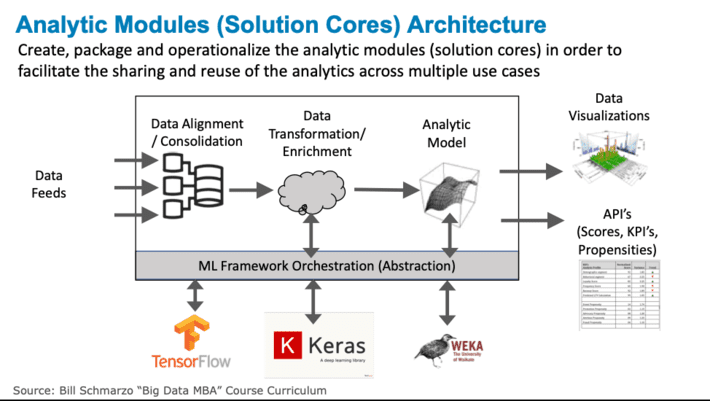

Organizations need to focus their analytics development efforts on creating, packaging and operationalizing their analytic modules (solution cores) to avoid orphaned analytics and to facilitate the sharing and reuse of the analytic modules across multiple use cases which accelerates time-to-value and de-risks projects (see Figure 8).

Figure 8: Create, Package and Operationalize Your Analytic Modules

See the blog “How to Avoid ‘Orphaned Analytics” for more details on the value of destroying Orphaned Analytics.

Digital Economics Effect #3: Economic Value of Digital Assets Accelerates

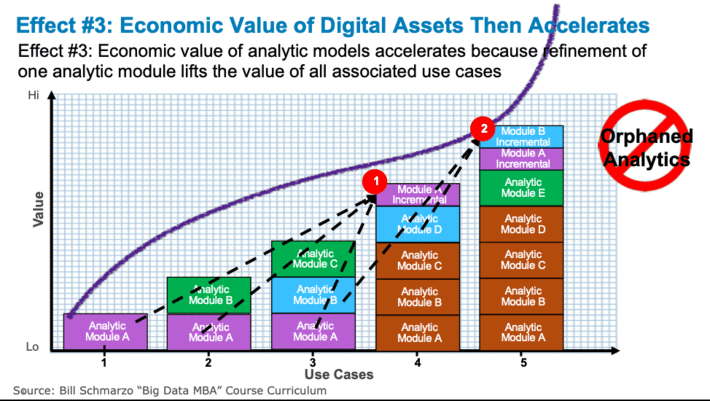

Effect #3: Across multiple use cases, the value of analytic model “refinement” has accelerated economic value impact because the refinement of one analytic module lifts the value of all associated use cases (see Figure 9).

Figure 9: Effect #3: Economic Value of Digital Assets Then Accelerates

Let me explain the “refinement” effect as shown in Figure 9:

- Analytic Module A (for example, Anomaly Detection 1.0), which was used in the first 3 use cases, is “refined” (Anomaly Detection 1.1) to deliver more effective predictive results for purposes of the 4thuse case. The “refinement” of Analytic Module A for the 4thuse case ripples back through the first 3 use cases, making them all slightly more effective at near zero marginal cost. The cumulative “refinement” value is reflected as u on Figure 9.

- The same “refinement” effect occurs when Analytic Module B (Remaining Useful Life 1.0) is “refined” for the benefit of use case #5 (Remaining Useful Life 1.1), and the economic benefits of the Analytic Module B “refinement” (reflected as von Figure 9) ripple from the previous use cases that used the original version of the analytic module (use cases #3 and #4).

This overall analytic model “refinement” economic effect is shown by the increasing angle of the slope in Figure 9. But this economic value acceleration cannot happen if the applications are not built as products that can be shared, reused and “refined”.

Summary

The “Schmarzo Economic Digital Asset Valuation Theorem” provides a compelling economic theory for why organizations need to make the necessary investments in the creation, sharing and reuse of their digital assets. These investments should be into:

- Curated data, which is data in which there has been the necessary investments to improve the data set’s cleanliness, completeness, accuracy, granularity and latency, enhanced metadata, and governance to ensure compliance in its use and continued enrichment so data can be easily accessed, understood and re-used with minimal to no incremental investmentand

- Analytic modules (solution cores) are analytics that have been created, packaged and operationalized to facilitate the sharing, reuse and “refinement” of the analytics across multiple use cases.

The killers of the economic value of digital assets include:

- Data Silos, which are data repositories that remain under the control of one department and isolated from the rest of the organization.

- Orphaned Analytics, which are one-off analytics developed to address a specific business need, but never “operationalized” or packaged for re-use in future use cases.

Understanding and exploiting the 3 Digital Assets Value Theorem “effects” that underpin the economic value of digital assets:

- Effect #1: Since data never depletes, never wears out and can be reused infinitely at near zero marginal cost, the marginal costs of sharing and reusing “curated” data flattens.

- Effect #2:Sharing and reusing the Analytic Modules across multiple use cases drives big increases in value.

- Effect #3: Across multiple use cases, the value of analytic model “refinement” has accelerated value impact because the refinement of one analytic module lifts the value of all associated use cases.

While I may not get a Nobel Prize for Economics for this blog (to be released in a more detailed research paper with the University of San Francisco), my intent is to increase the awareness and significance of the unique economic characteristics of data and analytics.