Mobile applications have already made a mark on the digital front. With a large number of applications already on the Google Play Store and Apple Store. There are applications for almost everything today. But, as the markets of mobile apps expand, they face new challenges and obstacles to be overcome.

Deep Learning is a subsidiary technology for Artificial Intelligence. It uses algorithms to parse the data and provide deep insights into the applications and their issues. Often, time constraints and deadline pressures get the better of developers and do not allow the developers or higher management to test the app properly before the grand launch and here, deep learning can help automate the mobile application testing and deployment.

The interactions between the user and system are facilitated through the GUI(Graphic User Interface). Especially, an interaction may include clicking, scrolling, or inputting text into a GUI element, such as a button, an image, or a text block. An input generator can produce interactions for several tests,

Humanoid System:

The humanoid system tends to approach testing the application through automation and yet make the system work like a human tester. It is an automated GUI test generator that can learn how humans interact with mobile apps and then use the learned model to guide test generation as a human tester.

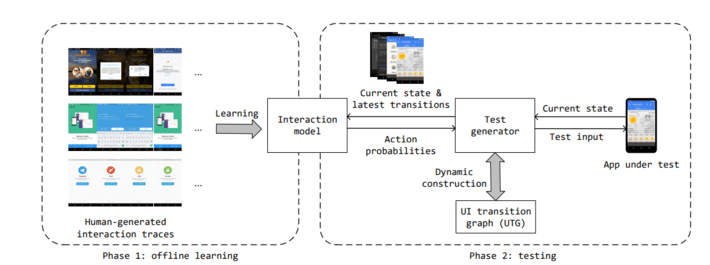

This approach can be divided into two phases:

Offline phase:

It is an offline phase for training the model with human-generated interaction traces. Deep Neural Networks are used to identify the relation between the GUI(Graphics User Interface) and user-performed interactions. A GUI is represented as visual information in the current UI.

An interaction is represented as the action type (touch, swipe, etc.) and the location coordinates of the action. After learning from a huge amount of human interaction traces, Humanoid is able to predict a probability distribution of the action type and action location for a new user interface. As the UI is considered one of the most important factors in apps development, businesses look to hire mobile app developers, who can optimize the UI of apps and yet the predicted distribution is used for the prediction of each UI element being interacted, with by humans and how to interact with it.

Online Phase:

During the online testing phase, Humanoid structures a GUI model named UI transition graph (UTG) for the app under test (AUT). Humanoid uses the GUI model and the interaction model to decide the test input to be sent. The UTG guides the Humanoid system to navigate between explored UIs, while the interaction model guides the exploration of new Uis.

Interaction Trace Preprocessing:

In order to train a user interaction model, a large dataset with the human interaction traces is used which is a key component of Humanoid. A raw human interaction trace is like a continuous stream of motion events to the stream.

Each motion represents the position of the cursor that enters, moves and leaves the screen. The change of the cursor’s state is continuous due to animations and dynamically loaded content.

Model Learning:

It uses machine learning algorithms to learn the human interaction patterns from the human interaction traces. End-users interact with an app according to their needs and requirements.

They interact with the app through the GUI. Considering the UI context vector, the output of the interaction model is “action” that is likely to be performed by humans in the current state. This predicted “action” is a probability distribution of types and locations of the expected human-like actions.

Convolutional Layer:

This is a very popular approach for image feature extraction. It has proven to be very effective when it comes to computer vision tasks on real-world datasets. The width and height of the input are reduced to half through stride-2 max-pooling layer that is placed right after the convolutional layer. The pooling layers also help the model to identify UI elements having similar characteristics with different surroundings.

Residual LSTM modules:

LSTM stands for Long Short Term Memory, which is a network used for sequence modeling problems such as, video classification or machine translation. The last dimension of input and output of normal LSTM are directly added through a residual path and such a residual structure makes the neural network optimization easier. It also guides developers on the location of an action that should be inside a UI element.

De-convolutional layers:

This component generates high-resolution probability distributions from the low-resolution output of residual LSTM modules. Options like bilinear interpolation, deconvolution, etc. can be considered for achieving high-resolution probability distributions. The de-convolutional layer is used as it is easier to integrate it with DNN and more general than the interpolation methods.

Benefits of Humanoid Approach:

- Humanoid can prioritize the human-like actions into the top 10% for most UI states.

- the time overhead that the humanoid model would bring to the test generator is minimal.

- Humanoid can assign the highest probability to the human-generated action for more than 50% of the UI states.

- Model-based testing tools can achieve better test performance for mobile application testing.

- It is highly effective on both the types of apps, whether it is open-sourced or market apps.

Limitations:

- The humanoid model does not include all the inputs from human interaction.

- For some applications, the coverage achieved through humanoid is less than 10%

- Textual information is not yet explored by the humanoid model.

- The humanoid model is largely based on human interactions and avoids non-human interactions.

Conclusion:

As Artificial Intelligence related technologies will get more attention and research support, there will be highly advanced mobile applications that can change the way we see out smartphones. As the humanoid model learns from the human interaction traces and provides probability distribution to render highly intelligent apps and provide more real-time responses for every user request. It is able to achieve higher test coverage and faster than other testing tools and hence automates the testing process for both open-sourced and market apps and this saves costs and time for many businesses and firms. Further, the use of a machine learning algorithm can make the process error-free.