This article was written by Jim Frost.

Regression is a very powerful statistical analysis. It allows you to isolate and understand the effects of individual variables, model curvature and interactions, and make predictions. Regression analysis offers high flexibility but presents a variety of potential pitfalls. Great power requires great responsibility!

In this post, I offer five tips that will not only help you avoid common problems but also make the modeling process easier. I’ll close by showing you the difference between the modeling process that a top analyst uses versus the procedure of a less rigorous analyst.

Tip 1: Conduct A Lot of Research Before Starting

Before you begin the regression analysis, you should review the literature to develop an understanding of the relevant variables, their relationships, and the expected coefficient signs and effect magnitudes. Developing your knowledge base helps you gather the correct data in the first place, and it allows you to specify the best regression equation without resorting to data mining.

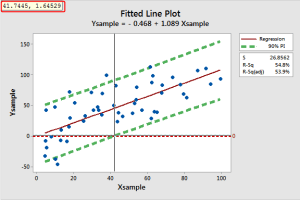

Regrettably, large data bases stuffed with handy data combined with automated model building procedures have pushed analysts away from this knowledge based approach. Data mining procedures can build a misleading model that has significant variables and a good R-squared using randomly generated data!

In my blog post, Using Data Mining to Select Regression Model Can Create Serious Problems, I show this in action. The output below is a model that stepwise regression built from entirely random data. In the final step, the R-squared is decently high, and all of the variables have very low p-values!

Automated model building procedures can have a place in the exploratory phase. However, you can’t expect them to produce the correct model precisely. For more information, read my Guide to Stepwise Regression and Best Subsets Regression.

Tip 2: Use a Simple Model When Possible

It seems that complex problems should require complicated regression equations. However, studies show that simplification usually produces more precise models.* How simple should the models be? In many cases, three independent variables are sufficient for complex problems.

The tip is to start with a simple a model and then make it more complicated only when it is truly needed. If you make a model more complex, confirm that the prediction intervals are more precise (narrower). When you have several models with comparable predictive abilities, choose the simplest because it is likely to be the best model. Another benefit is that simpler models are easier to understand and explain to others!

As you make a model more elaborate, the R-squared increases, but it becomes more likely that you are customizing it to fit the vagaries of your specific dataset rather than actual relationships in the population. This overfitting reduces generalizability and produces results that you can’t trust.

Learn how both adjusted R-squared and predicted R-squared can help you include the correct number of variables and avoid overfitting.

Tip 3: Correlation Does Not Imply Causation . . . Even in Regression

Correlation does not imply causation. Statistics classes have burned this familiar mantra into the brains of all statistics students! It seems simple enough. However, analysts can forget this important rule while performing regression analysis. As you build a model that has significant variables and a high R-squared, it’s easy to forget that you might only be revealing correlation. Causation is an entirely different matter. Typically, to establish causation, you need to perform a designed experiment with randomization. If you’re using regression to analyze data that weren’t collected in such an experiment, you can’t be certain about causation.

Fortunately, correlation can be just fine in some cases. For instance, if you want to predict the outcome, you don’t always need variables that have causal relationships with the dependent variable. If you measure a variable that is related to changes in the outcome but doesn’t influence the outcome, you can still obtain good predictions. Sometimes it is easier to measure these proxy variables. However, if your goal is to affect the outcome by setting the values of the input variables, you must identify variables with truly causal relationships.

For example, if vitamin consumption is only correlated with improved health but does not cause good health, then altering vitamin use won’t improve your health. There must be a causal relationship between two variables for changes in one to cause changes in the other.

To read the rest of the article, click here.