As companies increase their investments in artificial intelligence (AI), there is growing pressure on developers and engineers to deploy AI projects more quickly and at greater scale across the enterprise.

Simply evaluating the ever-expanding universe of AI tools and services— often designed for different users and purposes—is a significant challenge in this growing and fast-moving environment.

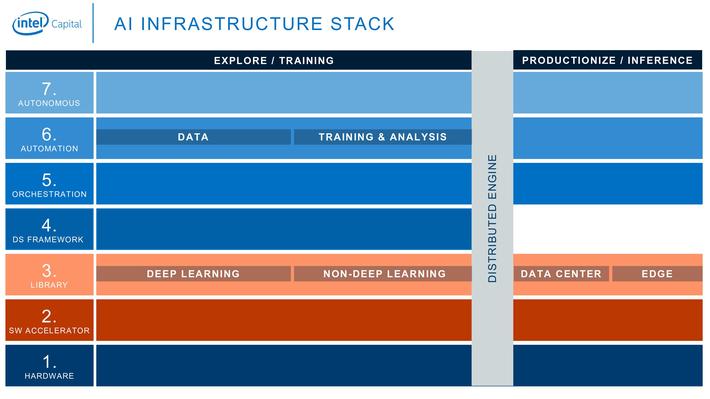

To address this challenge, we have created the AI Infrastructure Stack, a landscape map that brings greater clarity to the AI ecosystem by charting the layers of the AI technical stack and the vendors within each layer.

At Intel Capital this helps us identify the investments we believe will have the greatest positive impact on the future of AI, but it also helps developers and engineers identify the resources they need to deliver their AI projects in the most efficient and effective way possible.

Figure 1: AI Infrastructure Stack (Source: Intel Capital).

This technical infrastructure stack is focused on horizontal solutions that address fundamental needs in developing AI, regardless of the type of company or industry where it’s being deployed. We do not include vertical solutions that target specific industries.

The stack consists of seven layers—each divided into two parts, which comprise solutions built for very different workloads, amounts of data, compute and memory requirements, and SLAs:

- Explore/training solutions, which crunch data and create models algorithmically.

- Production/inference solutions, which respond using the trained models when recommendations are requested—e.g., to identify “you might also like” product suggestions on an e-commerce site or determine when to apply the brakes in a self-driving car.

Connecting everything is the enterprise’s distributed engine—the computation platforms that allocate workloads across compute resources.

The layers of the stack are:

- Hardware. The right hardware is the foundation of training solutions, which run in the data center, as well as of inference solutions, which run in data centers and edge devices.

- Software Accelerator. These are compilers and low-level kernels used to optimize machine learning (ML) libraries.

- Library. These are the libraries used to train ML models.

- Data Science Framework. This layer comprises tools that integrate libraries with other tools.

- Orchestration. These tools package, deploy, and manage the execution of ML training and model inference. DevOps wouldn’t be possible without this layer.

- Automation. These tools simplify and partially automate the preparation of data for model training, model training, and other ML tasks.

- Autonomous. Tools in this layer automate aspects of building, deploying, or maintaining ML models. This is where AI trains AI.

The tools and services in each layer accelerate the development and deployment of AI; however, as with all emerging technologies, there are tradeoffs when deciding which to use. AutoML can fast-track the development of ML models, for example, but the training may not be as accurate as custom models.

Users must decide which tools and services to consume within each layer based on the requirements of their projects.

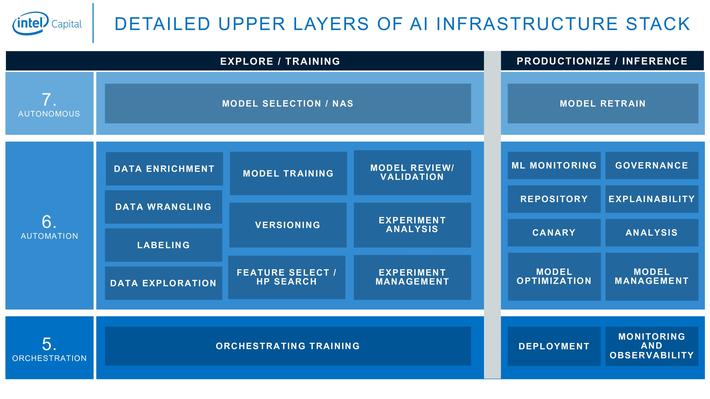

Figure 2: A detailed look at higher layers of our AI Infrastructure Stack (Source: Intel Capital).

Figure 2: A detailed look at higher layers of our AI Infrastructure Stack (Source: Intel Capital).

At the top of the AI value chain are the Orchestration, Automation, and Autonomous layers—with the Autonomous layer becoming more critical for democratizing AI by making it more accessible and easy to use by anyone, not just data scientists.

Although these are the newest layers of the stack, where AI tools and services are enabling continuous integration and continuous deployment (CI/CD) of AI, note that innovation is occurring across the entire stack— breaking new boundaries, increasing usability, and bringing AI to new communities.

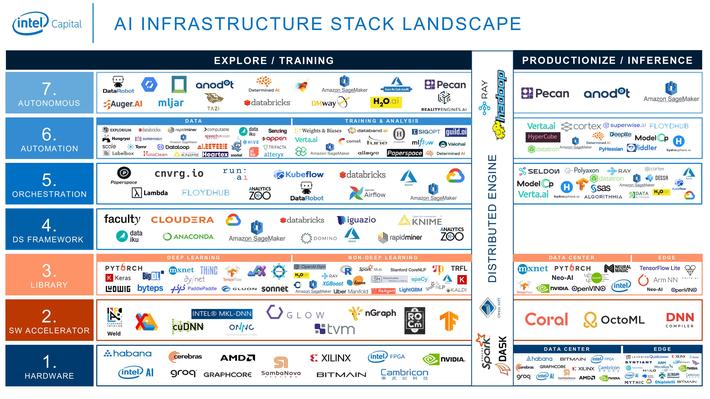

Finally, we populated our model with examples of tools, services, and companies—many of them open source. They don’t include all marketplace options; they are a sample for those considering AI solutions, with a robust range of options for each layer.

Figure 3: Representative examples of tools, service, and companies in our AI infrastructure stack (Source: Intel Capital).

AI is no longer in its infancy. For companies seeking to use AI to improve their products and services, or to drive greater efficiency and improved decision-making, there’s now a rich ecosystem of tools and services for building, deploying, and monitoring ML and AI models.

Keeping track of all that’s going on in the field, and how it fits together, can be the difference-maker when it comes to the success of your AI project.

_________________________________________________

Assaf Araki is an investment manager focused on AI and data analytics platforms and products at Intel Capital.

Ben Lorica, host of The Data Exchange podcast and program chair of the Spark+AI Summit and the Ray Summit, contributed to this post.

Graphics by Josiane Ishimwe, equity investor at Intel Capital.