Last year before Christmas at Hochschule München, Fakultät für Informatik and Mathematik I presented about Deep Learning (nbviewer, github, pdf).

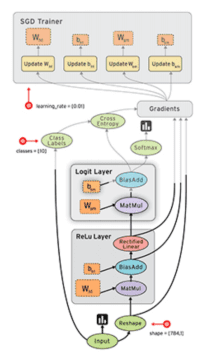

Mainly concepts (what’s “deep” in Deep Learning, backpropagation, how to optimize …) and architectures (Multi-Layer Perceptron, Convolutional Neural Network, Recurrent Neural Network), but also demos and code examples (mainly using TensorFlow).

Source: click on the pdf link (above)

It was/is a lot material to cover in 90 minutes, and conceptual understanding / developing intuition was the main point. Of course, there is great online material to make use of, and you’ll see my preferences in the cited sources ;-).

This year, having covered the basics, I hope to be developing use cases and practical applications showing applicability of Deep Learning even in non-Google-size (resp: Facebook, Baidu, Apple…) environments.

Stay tuned!

For original post click here.