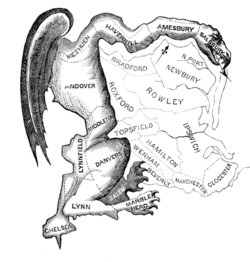

Gerrymandering has long been a problem in the process of choosing elected officials globally, but it is especially a problem in the United States, where political redistricting occurs every two years in response to changes in population distribution and density. The term itself arose in 1820 in Massachusetts as the Democratic-Republican governor, Elbridge Gerry, approved a redistricting plan that stretched around from the Southwest to the Northwest part of the map (see the image above).

Gerrymandering has long been a problem in the process of choosing elected officials globally, but it is especially a problem in the United States, where political redistricting occurs every two years in response to changes in population distribution and density. The term itself arose in 1820 in Massachusetts as the Democratic-Republican governor, Elbridge Gerry, approved a redistricting plan that stretched around from the Southwest to the Northwest part of the map (see the image above).

Pundits quickly seized upon the new district’s resemblance to a dragon or salamander, and the portmanteau term “gerrymander” entered the English language as a political district map clearly designed to protect the interests of one party or another. It has factored fairly heavily in politics, as a gerrymandered map can entrench a given party for decades. In one recent case, Wisconsin’s district maps produced an outcome where the Republican party received 48% of the vote in the state but ended up taking 62% of the legislative seats.

The challenge that gerrymandering faces is that there have historically been few effective means to determine whether a given district map is fair or not, and because of this lack the Supreme Court has typically been loathe to take on what they perceive as political cases. Without a compelling standard, the Court sees making any such decision as calling the impartiality of the court into question.

Ultimately the question of fairness as a measurable quality has been raised because in general a fair map is more likely one where there is a consensus among state voters as to the direction of a party. When a minority party is effectively able to control the direction of policy, it means that the dissonance between what the party wants and what the population wants grows wider. In statistical terms, this is what bias really means – the estimated mean and the actual mean diverge significantly, In natural processes, this is usually due to hidden variables or processes that haven’t been modeled into the sampling algorithm, in political processes, it typically is due to institutional efforts to retain power by one group or another.

Wendy Tam Cho, a Senior Researcher with the National Center for SuperComputing at the University of Illinois, is hoping to change that. She, along with her research team, have been using machine learning algorithms to sample districting maps from both US and international sources in conjunction with partisan biases in terms of legislative representation to determine what kind of correlations exist, then, given that, use the corresponding models to determine if a given representation is potentially gerrymandered and the extent to which it is.

The algorithm, if accepted by legal scholars,could both provide tools to better ascertain a baseline for when proposed maps fall too closely into the clearly gerrymandered category and can also generate maps given population distributions that minimize overall bias, not favoring one coalition over another.

Kurt Cagle is the Community Editor for Data Science Central.