The National Institute of Standards and Technology (NIST) has released it’s Artificial Intelligence Risk Management Framework (AI RMF 1.0), a guidance document for voluntary use by organizations designing, developing, deploying, or using AI systems to help manage the many risks of AI technologies.

The NIST AI Risk standards provide a practical and bipartisan perspective for managing AI risk

The standard was produced in close collaboration with the private and public sectors and It is intended to adapt to the AI landscape as technologies continue to develop.

This voluntary framework aims to help develop and deploy AI technologies in ways that enable the United States, other nations, and organizations to enhance AI trustworthiness while managing risks based on democratic values. It aims to accelerate AI innovation and growth while keeping civil rights, civil liberties, and equity for all into consideration.

As per the standard, compared with traditional software, AI poses a number of different risks. AI systems are trained on data that can change over time, sometimes significantly and unexpectedly, affecting the systems in ways that can be difficult to understand. These systems are also “socio-technical” in nature, meaning they are influenced by societal dynamics and human behavior. AI risks can emerge from the complex interplay of these technical and societal factors, affecting people’s lives in situations ranging from their experiences with online chatbots to the results of job and loan applications.

The framework equips organizations to think about AI and risk differently. It promotes a change in institutional culture, encouraging organizations to approach AI with a new perspective — including how to think about, communicate, measure and monitor AI risks and its potential positive and negative impacts.

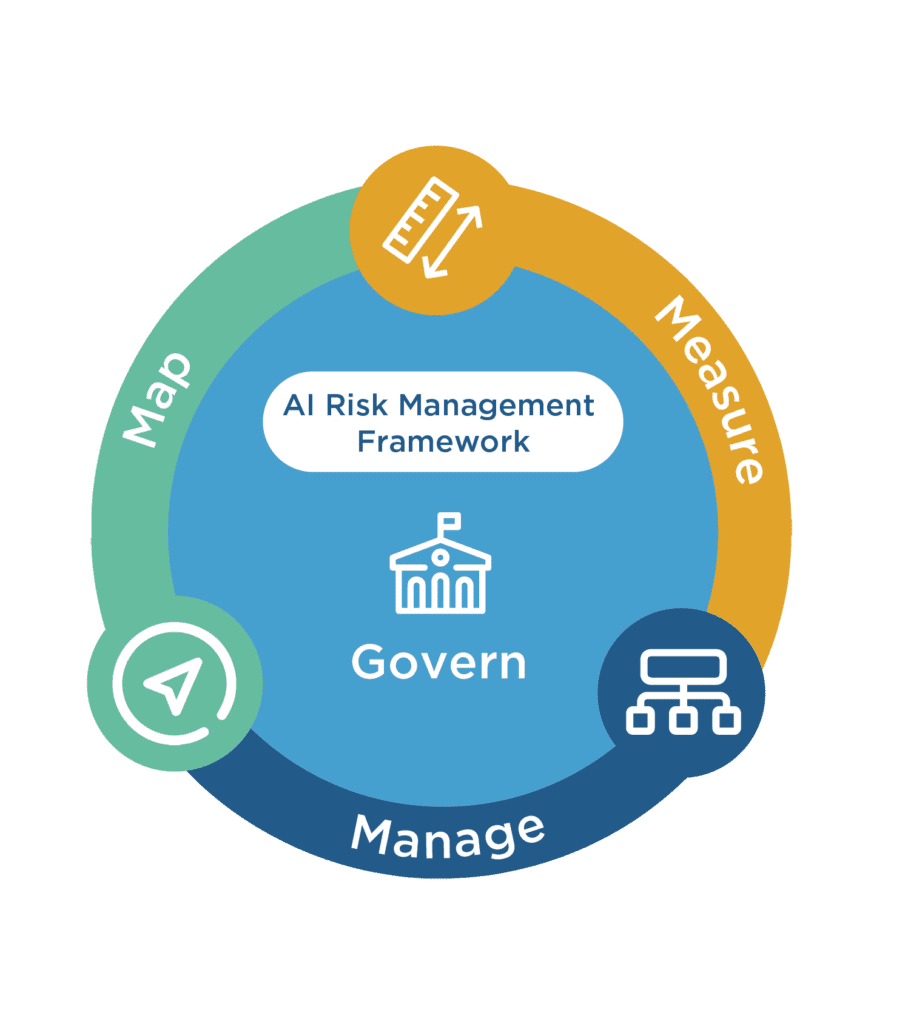

The AI RMF is divided into two parts. The first part discusses how organizations can frame the risks related to AI and outlines the characteristics of trustworthy AI systems. The second part, the core of the framework, describes four specific functions — govern, map, measure, and manage — to help organizations address the risks of AI systems in practice. These functions can be applied in context-specific use cases and at any stage of the AI life cycle.

The AI RMF refers to an AI system as an engineered or machine-based system that can, for a given set of objectives, generate outputs such as predictions, recommendations, or decisions influencing real or virtual environments. AI systems are designed to operate with varying levels of autonomy (Adapted from: OECD Recommendation on AI:2019; ISO/IEC 22989:2022).

This is a comprehensive definition with autonomy inbuilt into it

The standards can be accessed from the links below

References

Perspectives:

Roadmap

Standard(pdf download)

https://nvlpubs.nist.gov/nistpubs/ai/NIST.AI.100-1.pdf

Press release

Images source: