“I’ll tell you the problem with the scientific power that you’re using here: it didn’t require any discipline to attain it. You read what others had done and you took the next step. You didn’t earn the knowledge for yourselves, so you don’t take any responsibility for it. You stood on the shoulders of geniuses to accomplish something as fast as you could and before you even knew what you had, you patented it and packaged it and slapped it on a plastic lunchbox, and now you’re selling it, you want to sell it!”

– Dr. Ian Malcolm (Jeff Goldblum), “Jurassic Park”

When I started this blog series, I never planned on a Part III, but the feedback and comments I got from so many people put the pressure on me to respond. So, where we go…

In Part I of the blog series “It’s No Big Deal, but ChatGPT Changes Everything”, we were introduced into the world of ChatGPT, chatbots, and generative Artificial Intelligence (AI). We talked about how in the same way that Netflix, Google, Spotify, Waze, and Amazon changed users’ expectations about AI-powered recommendations, ChatGPT – and Generative AI – will change our expectations with respect to what we expect from AI in engaging with our operating environment.

In Part II, we talked about the potential power of Generative AI to power Your Own Digital Assistant – or YODA – to help guide your analysis and decision-making. Generative AI holds the potential to create a YODA-like experience in helping us to assess our current situation, analyze our history of engagements and interactions and preferences, tap into a world-wide knowledge repository of experience and expertise, and recommend actions or options to make more informed, more relevant, safer decisions.

In Part III, we want to explore the ethics of ChatGPT, and what we as professionals and society need to do to ensure that we are leveraging the power of Generative AI to “do good” and prosper in an imperfect world.

But first, a warning from our sponsors…

Dangers of ChatGPT Unintended consequences

“There are known knowns. These are things we know that we know. There are known unknowns. That is to say, there are things that we know we don’t know. But there are also unknown unknowns. There are things we don’t know we don’t know.” – Donald Rumsfeld

We don’t know what we don’t know about Generative AI. So, extraordinarily rigor must be invested to envision and brainstorm the potential unintended consequences of tools using Generative AI like ChatGPT. A couple of areas of potential concern are already emerging.

1. Confirmation Bias. We have already seen grave consequences from the confirmation bias exhibited by poorly constructed AI models. We are becoming more and more aware of how AI model confirmation bias is negatively impacting people in areas such as lending and finance, housing, employment, criminal justice, and even healthcare (Figure 1).

Figure 1: AI Confirmation Bias and Amazon’s AI Recruiting Tool

2. Mental Atrophy. Atrophy is the gradually decline in effectiveness or vigor due to underuse or neglect. We see this in our physical abilities as we get older (I can no longer dunk a basketball, which I blame on increases in gravity more than on my decline in physical skills). Some folks stop exercising as they get older and realize, sometimes too late, that they have reached a point of atrophy in their physical capabilities and overall wellness. And yes, the same thing can happen to our brains.

The article “Use It or Lose It: The Principles of Brain Plasticity” discusses the principles and challenges of brain plasticity as we turn over more and more of our thinking to technology.

“This inattention to detail is substantially a product of modern culture. Modern culture is all about minimizing environmental challenges and surprises… about enabling brainless stereotypy in our basic actions so that our brains can be engaged at more abstract levels of operations. We’re no longer interested in the details of things in our world. Because our brains are highly dependent in their functional operations in recording information in detail, they slowly deteriorate. Without that recorded detail, memory and brain speed are compromised.”

We must embrace opportunities to challenge our brains. We must seek out opportunities to challenging our convention thinking and long-held beliefs to continue to build the neurons and connective tissue that keeps are mental capabilities sharp. For example, struggling to recall the name of an actor in a particular movie is a good exercise for your brain as it can rewire neurons and improve your memory and cognitive function.

ChatGPT: Establishing Credibility

It is very easy to blindly trust the answers you get from ChatGPT. It’s human-like responses makes it easy to accept its answers without giving full consideration to the sources of the answers and its underlying assumptions.

Information without validation is opinion and making decisions based upon opinion can be dangerous.

So, what could OpenAI do to increase our confidence in the answers given to us by ChatGPT? Here are a few ideas that I hope the folks at OpenAI are exploring:

- State the Sources. The one thing that Google search has over ChatGPT is that I can see the sources for the answers it returns. The answer isn’t a black box that I just have to trust because I’m a skeptic. And I hope that everyone is a skeptic in a world where it is easy to confuse opinion for fact.

- Credibility Score. When I know the source of the answer, then I can then assess the credibility of that source (there are certain cable news shows – who I will not name for reasons of embarrassing a couple of my friends – which have zero credibility from my experience). What if ChatGPT presented a “Credibility Score” with its answers? That “Credibility Score” could be based upon its vast knowledge base, fact checking against multiple sources, and aggregating credibility feedback from users?

- Data Transparency. If there are numbers being presented, state the source of those numbers – and provide access to the numbers itself – so that users can judge for themselves the reasonableness and quality of the assessments based upon those numbers.

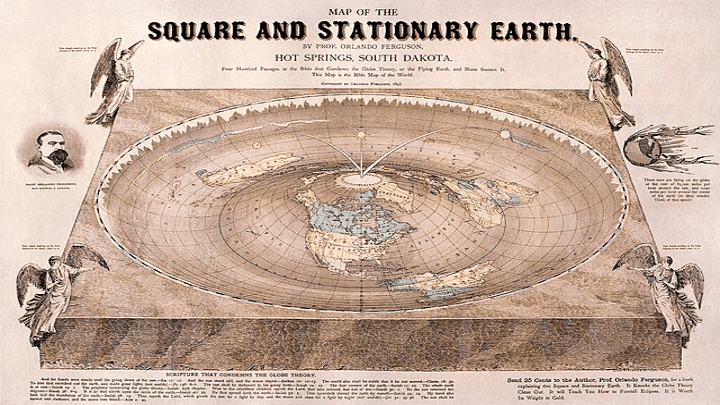

- Peer Reviewed. Data sources and information that has been peer reviewed by credentialed sources should be scored or rated higher than some random person with a random conspiracy theory. And just because a large number of people believe that something is true, doesn’t make it true. For example, 6.6M Americans believe the Earth is flat[1]. Really?! By the way, I got this bridge in Brooklyn… (Figure 2).

Figure 2: See, The Earth is Flat!!

Even if ChatGPT and other similar services were to act on some of these ideas and recommendations, it does not excuse us from thinking for ourselves. In an era where too many folks want to be spoon fed the answers, the importance of critical thinking cannot be over-stated.

Importance of Critical Thinking

“Spoon feeding in the long run teaches us nothing but the shape of the spoon.” ― E.M. Forster

We live in a society that seems eager to be spoon fed how to think. This propensity has probably been around for some time but has been super-charged by cable news, podcasts, and social media. Now along comes Generative AI (in the guise of ChatGPT) that is providing human-like answers based upon unknown sources of data.

“You are entitled to your opinion. But you are not entitled to your own facts.” ― Daniel Patrick Moynihan

Yes, everyone has an opinion and is eager for you to embrace their opinion. But opinion is not fact, and opinion is certainly not knowledge! Critical thinking is more important than ever in a world where availability of non-credible opinions are as prevalent as credible facts.

Critical thinking is the judicious and objective analysis, exploration and evaluation of an issue or a subject in order to form a viable and justifiable judgment.

Key components of critical thinking include:

- Never accept the initial answer as the right answer. It’s too easy to take the initial result and think that it’s good enough. But good enough is usually not good enough, and one needs to invest the time and effort to explore if there is a better “good enough” answer.

- Be skeptical. Never accept someone’s “statement of fact” as “fact.” Learn to question what you read or hear. It’s very easy to accept at face value whatever someone tells you, but that’s a sign of a lazy mind. And learn to discern facts from opinions. You know what they say about opinions…

- Consider the source. When you are gathering requirements, consider the credibility, experience and maybe most importantly, the agenda of the source. Not all sources are of equal value, and the credibility of the source is highly dependent upon the context of the situation (see the article “Reasons Why Doctors Can’t Manage Money”).

- Don’t get happy ears. Don’t listen for the answer that you want to hear. Instead focus on listening for the answers that you didn’t expect to hear. That’s the moment when learning really starts.

- Embrace struggling. The easy answer isn’t always the right answer. In fact, the easy answer is seldom the right answer when it comes to complex situations faced not in the world of data science, but also faced in society and the business world.

- Stay curious; have an insatiable appetite to learn. This is especially true in a world where technologies are changing so rapidly. Curiosity may have killed the cat, but I wouldn’t want a cat making decisions for me anyway.

- Apply the reasonableness test. Is what you are reading making sense from what you have seen or read elsewhere (sorry, the Pope didn’t vote in the last US election)? And while technologies are changing so rapidly, society norms and ethics really aren’t.

- Pause to think. Find a quiet place where you can sequester yourself away to really think about everything that you’ve pulled together. Take the time to think and contemplate before rushing to the answer.

- Conflict is good…and necessary. Life is full of tradeoffs that require striking a delicate balance between numerous competing factors (increase one factor while reducing another). These types of conflicts are the fuel for innovation (see the blog “Embracing Conflict to Fuel Digital Innovation” for more details).

ChatGPT Changes Everything Summary – Part III

ChatGPT and similar Generative AI models will reward those who can apply knowledge, not those who can memorize and regurgitate knowledge

Yes, there will be a “ChatGPT Changes Everything – Part IV” and it will explore the challenge that professionals will face in a world where Generative AI and ChatGPT-type capabilities will impact every profession. I believe that your professional differentiation will be less about the creation of mathematical (machine learning) models and more about the creation of economic (value creation) models.

There is much good that can be realized from the potential of Generative AI and ChatGPT to jump start our thinking and challenge our assumptions. And Generative AI and ChatGPT could be a foundational piece for creating Your Own Digital Assistant (YODA), an intelligent, personalized guide to help us make informed decisions by forcing us to contemplate a more holistic range of factors and variables, challenge our long-held assumptions, and force us to think versus just respond.

Because if we allow these AI developments to do our thinking for us, then why do these AI machine need us around…

“Once men turned their thinking over to machines in the hope that this would set them free. But that only permitted other men with machines to enslave them.” ― Frank Herbert, Dune

“Computer Code 601” – The Andromeda Strain

[1] The source of this 2% number is a Forbes article titled “Only Two-Thirds Of American Millennials Believe The Earth Is Round”