I read an article from the world economic forum which proposed an AI labeling system for AI products designed for children

Today, for the first time, children are growing up in a world shaped by artificial intelligence (AI) and decisions are being made for children implicitly by AI. Algorithms need data that is collected and analyzed. The article proposes a toolkit that can be used by companies to develop trustworthy artificial intelligence (AI) for children and youth and to help parents, guardians, children, and youth responsibly buy and safely use AI products.

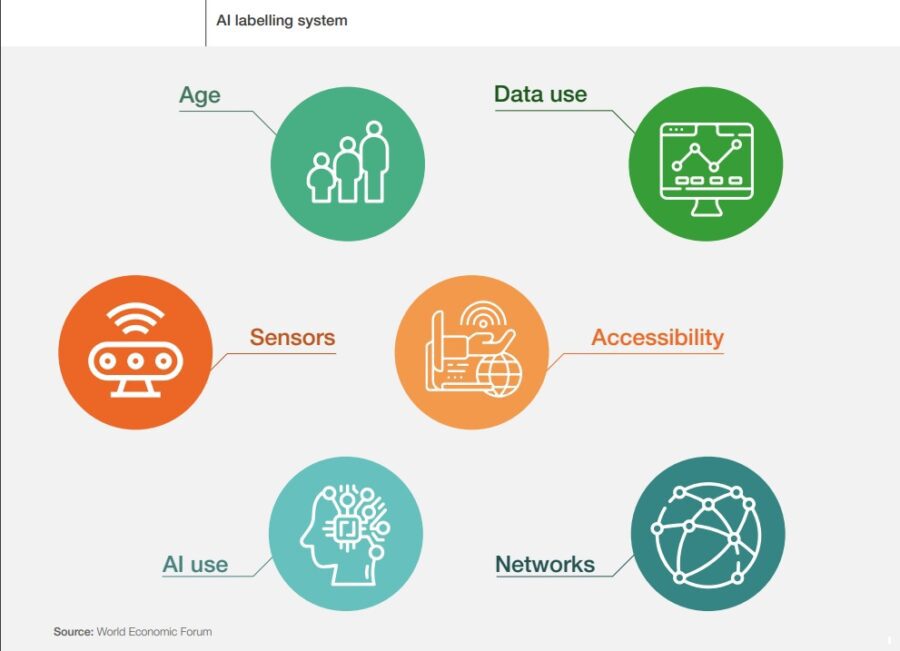

The article provides a number of suggestions to develop a child-centric AI labeling system that would be like a food labeling system.

Guidelines for building child-centric AI are

- Employ proactive strategies for responsible governance

- Use ongoing ethical thinking and imagination

- Employ ethical governance for fairness

- Test and train with data to understand the behavior of the model and its areas of bias

- Build research plans, advisory councils, and participant pools that represent high variability in the target audience

- Actively seek user experience failures that create experiences of exclusion

- Test and train with data to understand the behavior of the model and its areas of bias

- Build advisory councils and research participant pools that represent high variability in the target audience

- Actively seek user experience failures that create negative experiences

- Overcommunicate privacy and security implications

- Build conviction around the behavior of the AI and how it might adjust to a user’s development stag

- Conduct user research to inform scenario planning for nefarious use cases and mitigation strategies Build a multivariate measurement strategy

- Build a transparent, explainable, and user data-driven relationship model between the child, guardian, and technology to identify and mitigate harm

- Have the product team develop subject-matter expertise in technology concerns related to children and youth

- Build a security plan that takes children’s and youth’s cognitive, emotional, and physical safety into account

- Confirm the terms of use are clear, easy to read, and accessible to a non-technical, literate user Clearly disclose the use of high-risk technologies, such as facial recognition and emotion recognition, and how this data is managed

- Explicitly mention the geographic regions whose data protection and privacy laws are honored by the technology

- Use more secure options as default and allow guardians to opt into advanced features after reading their specific terms of use

- Clearly specify the age group for which the application is built

- Provide guidelines for the environment in which the technology is meant to be used

- Create alert mechanisms for guardians to intervene in case a risk is identified during usage

The idea of the AI label could help develop the system

source and image source AI for Children WEF