Introduction

Artificial intelligence (AI) and machine learning (ML) systems are becoming increasingly integral to decision-making processes across various industries, including healthcare, finance, education, law enforcement, and employment. The ethical use of these technologies becomes paramount. Ethics-driven model auditing and bias mitigation are essential for ensuring the fairness of AI systems.

However, these systems can unconsciously disseminate biases, leading to unfair outcomes that disproportionately affect marginalized groups for various reasons. That’s what the ethics-driven model auditing and bias mitigation aim to address by systematically evaluating AI systems for fairness, transparency, and accountability factors. In recent years, this approach has prioritized ethical principles to ensure that AI systems align with societal values and do not exacerbate existing inequalities.

This article explores the key aspects of ethics-driven model auditing and AI bias mitigation, including best practices, limitations, benefits, challenges, and future considerations.

Understanding bias in AI models

Understanding bias in AI models for primary tasks is crucial, as the type of bias determines the appropriate steps to handle it. Different kinds of biases exist; we will work on them based on their nature.

Types of AI bias

Historical bias: It occurs when AI models are trained on data that reflects past societal prejudices or inequalities, such as discriminatory hiring practices. This bias perpetuates unfair outcomes, like favouring certain groups in loan approvals based on outdated trends. Addressing it requires auditing historical data for systemic inequities and adjusting datasets accordingly.

It’s challenging because it often involves incomplete or biased historical records that are hard to rectify.

Representation bias: This occurs when certain classes or demographics are underrepresented in the training data, resulting in a skewed model performance. For example, if a facial recognition system is trained mostly on light-skinned faces, it may fail to identify darker-skinned individuals accurately. Mitigating this involves augmenting datasets with diverse samples or reweighting to balance representation. However, obtaining comprehensive data can be resource-intensive and may still miss edge cases.

Measurement bias: It stems from using inaccurate or inappropriate proxies for real-world phenomena, distorting model predictions. For instance, using zip codes as a proxy for income might overlook socioeconomic diversity within areas. This type of bias requires the careful selection of features and validation against ground-truth data to ensure relevance. It’s challenging to detect without in-depth domain knowledge and can lead to unintended consequences if left unaddressed.

Algorithmic bias: It emerges during the training or optimization process when the model’s design or learning algorithm introduces unfairness. This can happen if optimization prioritizes accuracy for the majority group, neglecting minorities, or if the model overfits to biased patterns. Mitigation involves incorporating fairness constraints, like adversarial training, during model development. However, balancing fairness with performance remains a complex trade-off that requires ongoing monitoring and adjustment.

Defining ethical AI and fairness

Definition: Ethics-driven auditing involves systematically evaluating AI systems for compliance with ethical standards, such as fairness, accountability, transparency, and privacy (FAT-P).

Ethical AI refers to the development and deployment of AI systems that adhere to principles such as fairness, accountability, transparency, and respect for user rights. Fairness in AI involves ensuring that models do not produce discriminatory outcomes based on protected attributes like race, gender, or socioeconomic status. Auditing for fairness requires defining metrics, such as demographic parity (equal outcomes across groups) or equal opportunity (equal true positive rates), to measure and address disparities.

The stakeholders are Data scientists, domain experts, ethicists, legal teams, and impacted communities.

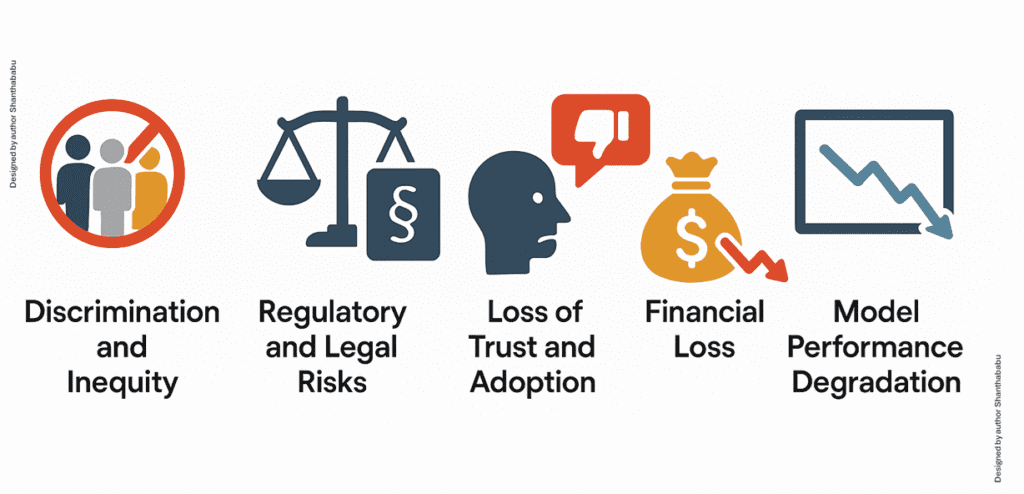

Impacts of bias in AI Models

Bias in AI models can lead to significant ethical, legal, and operational issues, particularly in high-stakes domains such as healthcare, finance, hiring, and law enforcement. Here’s a breakdown of the key impacts:

Discrimination and Inequity: AI may produce outputs that unfairly disadvantage specific groups (e.g., based on race, gender, age, or location). It leads to impacts such as Legal consequences, reputational damage, and exclusion of underrepresented groups.

Example: A recruitment AI tool that favours male resumes due to biased training data.

Regulatory and Legal Risks: Biased decisions can violate anti-discrimination laws (e.g., GDPR, Equal Credit Opportunity Act)—significant impacts in payments, lawsuits, and government scrutiny.

Example: Denying loans to minorities at a higher rate without justification.

Loss of Trust and Adoption: Users lose confidence in AI systems that show biased or inconsistent behaviour. Potential Impacts: low adoption, user pushback, and brand damage.

Example: A medical AI giving poor diagnostics for women or minorities.

Financial Loss: Incorrect decisions based on bias can cause financial errors or missed opportunities. Impacts lead to revenue loss, customer churn, and operational inefficiency.

Example: An AI-based fraud detection model that blocks legitimate remittances from certain regions.

Model Performance Degradation: Bias leads to poor generalization across diverse users or unseen data distributions. Leads to decreased accuracy and a bad user experience.

Example: A voice assistant poorly recognizing non-native accents due to skewed training data.

Limitations of Bias

- Complexity of Bias: Bias can stem from multiple sources, such as data, algorithms, or human decision-making, making it challenging to identify and address comprehensively.

- Metric Trade-offs: Fairness metrics often conflict (e.g., achieving demographic parity may reduce accuracy), complicating mitigation efforts.

- Context Dependence: What constitutes fairness varies across cultures, domains, and use cases, making universal solutions difficult.

- Resource Constraints: Auditing and mitigation require significant computational and human resources, which may be inaccessible for smaller organizations.

- Evolving Standards: Rapid advancements in AI and changing ethical norms can outpace auditing frameworks, leading to outdated practices

Benefits of ethics-driven model auditing

- Improved Fairness: Auditing and mitigation reduce discriminatory outcomes, fostering equitable AI systems.

- Enhanced Trust: Transparent and ethical AI systems build trust among users and stakeholders.

- Regulatory Compliance: Adhering to ethical standards ensures compliance with emerging AI regulations, reducing legal risks.

- Better Decision-Making: Mitigating bias leads to more accurate and reliable outcomes, particularly in high-stakes domains such as healthcare or criminal justice.

- Social Impact: Ethics-driven AI promotes social justice by addressing systemic inequalities and amplifying marginalized voices.

Challenges

- Data Quality and Availability: Incomplete or biased datasets can undermine auditing efforts, as models reflect the limitations of their training data.

- Scalability: Auditing large-scale models or real-time systems is computationally intensive and time-consuming.

- Subjectivity in Fairness: Defining fairness is subjective and often contested, leading to disagreements among stakeholders.

- Adversarial Risks: Malicious actors may exploit mitigation techniques, such as manipulating inputs to bypass fairness constraints.

- Balancing Ethics and Performance: Mitigation strategies can reduce model accuracy or efficiency, creating trade-offs that require careful consideration.

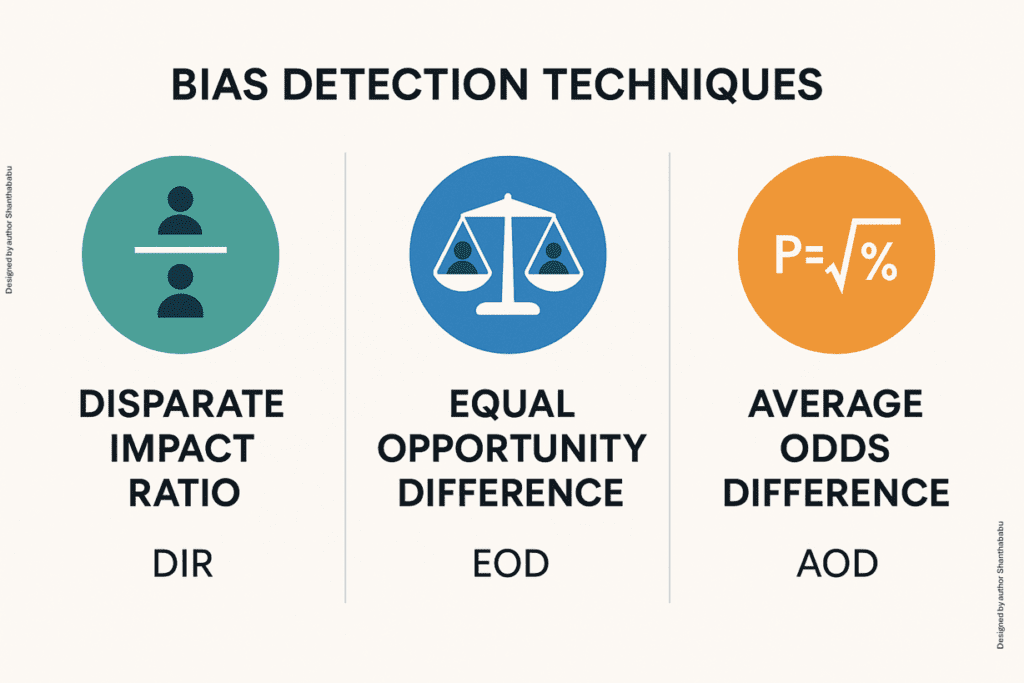

AI bias detection techniques

AI Bias detection techniques are critical for ensuring AI systems operate fairly and ethically across diverse groups. They identify disparities in model outcomes, such as unequal treatment based on race or gender, using metrics like Disparate Impact Ratio, Equal Opportunity Difference, and Average Odds Difference.

AI Bias detection techniques identify unfair outcomes in AI models by analyzing performance across groups. The following is a concise explanation of each bias detection technique.

- Disparate Impact Ratio (DIR): It measures fairness by comparing the proportion of favourable outcomes (e.g., loan approvals) between protected groups (e.g., gender or race). It is calculated as the ratio of the favourable outcome rate for a disadvantaged group to that of an advantaged group. A DIR close to 1 indicates fairness, while values significantly below 1 suggest bias.

- For example, if 80% of men and 40% of women are approved, DIR = 0.5, indicating potential discrimination. DIR is widely used but may oversimplify complex fairness scenarios.

- Equal Opportunity Difference (EOD): It evaluates fairness by comparing true positive rates (TPR) across protected groups. It calculates the difference in TPR (e.g., correct predictions of loan repayment) between groups, aiming for a value near zero.

- For instance, if TPR is 70% for one group and 50% for another, EOD = 20%, signalling bias. EOD focuses on equalising correct positive predictions, which is crucial in domains such as hiring or medical diagnosis. However, it may not capture other fairness aspects, such as false positives.

- Average Odds Difference (AOD) : It assesses fairness by comparing both true positive rates (TPR) and false positive rates (FPR) across groups. It calculates the average of the absolute differences in TPR and FPR between protected groups, aiming for a value close to zero.

- For example, if one group has a TPR of 70% and an FPR of 20%, while another has a TPR of 60% and an FPR of 10%, AOD quantifies the disparity. AOD provides a balanced view of prediction errors but can be sensitive to class imbalances. It’s helpful in contexts like criminal justice or credit scoring

Bias detection tools and frameworks

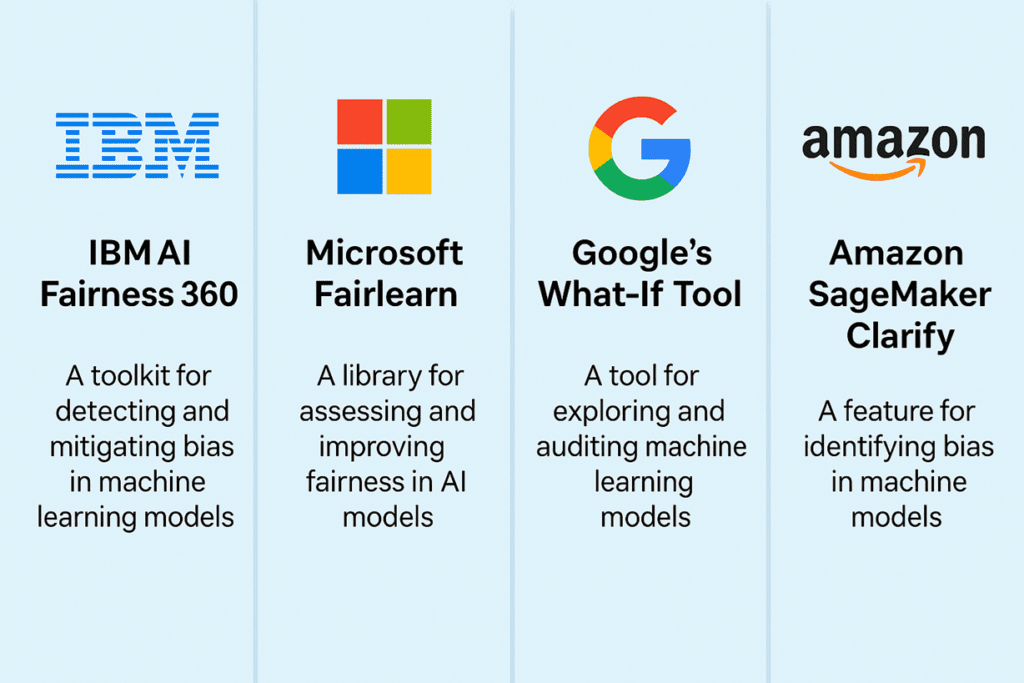

Bias detection tools and frameworks are software solutions designed to identify, measure, and mitigate biases in AI and machine learning models, ensuring fairness and ethical outcomes. They provide metrics like Disparate Impact Ratio, Equal Opportunity Difference, and Average Odds Difference to evaluate disparities in model performance across protected groups (e.g., race, gender).

Examples include IBM AI Fairness 360, Microsoft Fairlearn, Google’s What-If Tool, and Amazon SageMaker Clarify, which offer functionalities for data auditing, model analysis, and bias mitigation across various stages of the AI pipeline. Let’s explore them briefly.

- IBM AI Fairness 360: IBM AI Fairness 360 (AIF360) is an open-source toolkit designed to detect and mitigate bias in machine learning models. It provides a comprehensive set of metrics, including Disparate Impact Ratio and Equal Opportunity Difference, to evaluate fairness across protected groups. It supports bias mitigation through pre-processing, in-processing, and post-processing techniques, including reweighting and adversarial training. The tool integrates with popular machine learning (ML) frameworks, such as TensorFlow and scikit-learn, making it versatile for developers. It’s widely used for its extensive documentation and community support, but requires technical expertise to implement effectively.

- Microsoft Fairlearn: This is an open-source library designed to assess and improve fairness in AI models, particularly in the context of generative AI bias and bias in machine learning. It offers metrics such as demographic parity and equalized odds, alongside visualization tools to analyze group disparities. Fairlearn supports mitigation through algorithms such as Exponentiated Gradient and Threshold Optimiser, striking a balance between fairness and accuracy. It’s user-friendly, with integration into Python-based workflows and Azure ML. However, its scope is narrower than some tools, focusing primarily on classification tasks.

- Google’s What-If Tool: This is an interactive, browser-based tool for exploring and auditing the performance and fairness of bias in machine learning (ML) models. It enables users to visualize model predictions, analyse feature importance, and test counterfactual scenarios to identify bias. WIT supports metrics like Equal Opportunity Difference and integrates with TensorFlow and other platforms. Its intuitive interface is ideal for non-technical users, but it’s less suited for large-scale or automated auditing. It excels in hypothesis testing and model interpretability.

- Amazon SageMaker Clarify: It Clarify is a feature within AWS SageMaker for detecting and mitigating bias in machine learning. It provides metrics like Disparate Impact and Average Odds Difference, analyzing bias at both data and model levels. Clarify generates detailed reports on feature importance and bias, with visualization tools for stakeholder review. It integrates seamlessly with AWS ecosystems, making it scalable for enterprise use.

However, its proprietary nature and cost may limit accessibility for smaller organizations.

Model Auditing Frameworks

Ethics-driven model auditing involves systematic processes to evaluate AI systems and ensure their reliability. There are various frameworks, including the IEEE Ethically Aligned Design, the EU AI Act, the OECD AI Principles, and the ISO/IEC standards.

Frameworks like AI Fairness 360 (IBM) or Fairlearn (Microsoft) provide tools to assess bias in datasets and models, offering significant support beyond a framework on paper. The preceding frameworks typically include:

- Data Audits: Examining datasets for representation biases, missing data, or historical inequities.

- Model Performance Analysis: Evaluating model outputs across subgroups to detect disparities.

- Interpretability Checks: Ensuring model decisions can be explained to stakeholders. Audits should be conducted at multiple stages—pre-deployment, during operation, and post-deployment—to ensure ongoing fairness and transparency.

Model Auditing Methodologies

Pre-Deployment Auditing: Pre-deployment auditing involves analyzing the model before it goes live. This includes checking for dataset imbalances, evaluating how features impact predictions, and running fairness simulations, such as demographic parity, to ensure that no group is disproportionately affected. It helps prevent bias from entering production systems.

- Dataset checks (distribution, balance)

- Feature impact analysis

- Fairness simulations (e.g., demographic parity checks)

Post-Deployment Auditing: After deployment, continuous auditing ensures that the model maintains fairness and accuracy. This involves detecting data or concept drift, monitoring performance across different user subgroups (e.g., age, gender), and tracking feedback loops or recurring errors that might indicate bias over time.

- Drift detection

- Performance monitoring across subgroups

- Feedback loops and error tracking

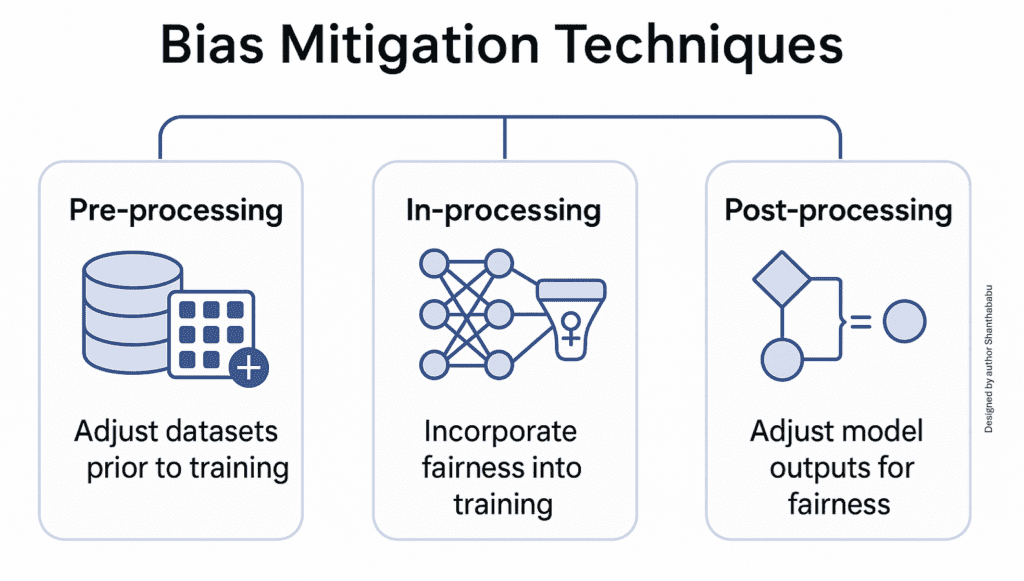

Bias Mitigation Techniques

Bias mitigation techniques are crucial for ensuring AI systems deliver fair and equitable outcomes, preventing discrimination against protected groups. They enhance trust, align models with ethical standards, and ensure compliance with regulations like the EU’s AI Act. By addressing biases, these techniques improve the reliability of decision-making in critical areas such as healthcare and criminal justice. They promote social justice by reducing systemic inequalities embedded in data or algorithms. Ultimately, they aim to foster inclusive AI that benefits diverse populations without causing harm.

The primary objective is to mitigate unfair outcomes in AI models across three stages: pre-processing, in-processing, and post-processing.

Bias mitigation strategies can be applied at different stages of the AI pipeline:

Pre-processing: This technique adjusts datasets before model training to ensure balanced representation across protected groups, such as race or gender. Methods include reweighting samples to give more importance to underrepresented groups or augmenting data to include more diverse examples. This approach reduces bias rooted in skewed or historical data but may require significant efforts in data collection. It’s effective for addressing data imbalances but doesn’t account for biases introduced during model training.

In-processing: This incorporates fairness constraints directly into the model training process to minimize bias in predictions. Techniques like adversarial training use a secondary model to ensure predictions are independent of protected attributes, such as gender. This approach can produce inherently fairer models but may increase computational complexity and reduce overall accuracy. It’s ideal for scenarios where fairness is prioritized during model development.

Post-processing: Post-processing modifies model outputs after training to achieve fairness, such as adjusting decision thresholds to equalize outcomes across groups. For example, thresholds can be tuned to ensure equal approval rates for loans across demographics. This method is flexible and doesn’t require retraining, but may compromise model performance or consistency. It’s best suited for fine-tuning deployed models to meet fairness goals.

Governance, Compliance, and Transparency

Governance, compliance, and transparency are crucial pillars in ensuring the responsible deployment of AI.

- Explainability (XAI): Ensures stakeholders understand model decisions.

- Ethical Review Boards: Interdisciplinary teams that oversee model development.

- Documentation: Model cards, data sheets, and system logs for audit trails.

- Regulatory Compliance: GDPR (EU), AI Bill of Rights (US), and sector-specific standards.

Conclusion

Ethics-driven model auditing and bias mitigation are essential for building AI systems that are fair, transparent, and accountable. By implementing robust auditing frameworks, engaging diverse stakeholders, and applying targeted mitigation strategies, organizations can address biases and align AI with ethical principles. Despite challenges like subjectivity, resource constraints, and evolving standards, the benefits of improved fairness, trust, and social impact make these efforts worthwhile. As AI continues to shape society, an ongoing commitment to ethical auditing and bias mitigation will be critical to ensuring that technology serves all communities equitably. Future advancements should focus on developing scalable, context-aware solutions and fostering global collaboration to standardize ethical AI practices.

Ethics-driven model auditing and bias mitigation are no longer optional — they are essential practices in responsible AI development. As AI continues to shape our societies, the need for fairness, transparency, and accountability grows stronger. Addressing bias in AI systems is not a one-time task but an ongoing responsibility that spans data collection, model development, deployment, and feedback. By embedding ethical considerations into every phase of the AI lifecycle, organizations can create technologies that are not only intelligent but also just and inclusive.

Ultimately, the goal is to ensure that AI serves all people equitably, and that begins with building AI systems we can trust.