Introduction

The cybersecurity landscape is experiencing unprecedented transformation as organizations scramble to integrate artificial intelligence and machine learning capabilities into their security solutions. For product managers navigating this complex terrain, the challenge is particularly acute: how to harness the power of AI without getting bogged down in technical implementation details that can derail product strategy and timeline.

Enter LangChain—an open-source framework that democratizes the development of Large Language Model (LLM) applications. By providing an intuitive, modular approach to AI integration, LangChain enables product managers to enhance their cybersecurity offerings without requiring deep programming expertise. This paradigm shift allows teams to focus on what matters most: delivering value to customers while maintaining competitive advantage in a rapidly evolving market.

The significance of this approach cannot be overstated. While the cybersecurity industry has witnessed extensive research into advanced AI applications, there’s been a conspicuous gap in making these technologies accessible to product managers who drive strategic decisions but may lack extensive coding backgrounds. LangChain bridges this divide, transforming AI integration from a technical bottleneck into a strategic enabler.

The modern product manager’s dilemma

Today’s cybersecurity product managers operate in a high-stakes environment where they must simultaneously:

- Meet evolving customer security needs in an increasingly sophisticated threat landscape

- Maintain competitive positioning against agile startups and established players alike

- Integrate cutting-edge technologies without overwhelming their development resources

- Balance innovation with reliability in mission-critical security applications

Traditional approaches to AI integration have created significant barriers to innovation. Product managers often find themselves caught between ambitious AI visions and the harsh realities of implementation complexity. Development teams become bottlenecked by the technical intricacies of machine learning pipelines, API integrations, and model management—time that could be better spent on core product functionality and user experience optimization.

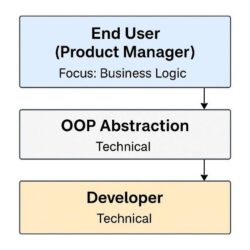

LangChain fundamentally changes this dynamic by abstracting away the technical complexity while preserving the strategic control that product managers need to make informed decisions about AI feature development.

Understanding LangChain

The power of LangChain lies not in its technical sophistication but in its conceptual simplicity. Product managers don’t need to understand neural network architectures or transformer models—they need to understand building blocks that can be assembled to create valuable security features.

The foundation: Chains and prompts

At its core, LangChain operates on two fundamental concepts that product managers can easily grasp:

Chains are workflows that connect different AI operations together, much like a assembly line for processing information. Prompts are the instructions that guide AI behavior, similar to detailed specifications you might give to a human analyst.

Consider this practical example for threat detection:

from langchain.llms import OpenAI

from langchain.prompts import PromptTemplate

from langchain.chains import LLMChain

# Product managers focus on crafting the business logic

template = """

Analyze this security alert and determine if it requires immediate attention:

Alert: {alert_text}

Please provide:

1. Severity Level (Critical/High/Medium/Low)

2. Recommended Actions

3. Whether this needs immediate escalation (Yes/No)

4. Confidence Level in Assessment

Analysis:

"""

# Technical implementation handled by development team

prompt = PromptTemplate(input_variables=["alert_text"], template=template)

llm_chain = LLMChain(llm=OpenAI(), prompt=prompt)The beauty of this approach is the clear separation of concerns. Product managers can focus on defining what the AI should analyze and how it should respond, while developers handle the implementation details of connecting to AI models and managing the technical infrastructure.

Advanced workflows with LangGraph

For more sophisticated cybersecurity workflows—such as multi-step incident response, dynamic threat hunting, or collaborative security operations—LangGraph extends LangChain’s capabilities into stateful, branching logic systems.

LangGraph enables product managers to:

- Visualize complex security workflows as connected decision trees

- Define conditional logic without writing complex code

- Enable agent coordination for tasks requiring multiple AI systems to work together

- Create adaptive responses that change based on real-time threat intelligence

Practical example: Automated security incident triage

Consider a real-world scenario where your security team receives hundreds of alerts daily. A LangGraph-powered triage system might work as follows:

- Initial Assessment: AI evaluates the alert severity using contextual threat intelligence

- Decision Branch: Based on severity level, the system routes to different response paths

- High Severity: Immediately escalates to security team with detailed analysis

- Medium Severity: Initiates additional monitoring and schedules follow-up review

- Low Severity: Logs the incident and auto-closes with documentation

This workflow represents sophisticated automation that would traditionally require extensive custom development. With LangGraph, product managers can design and iterate on these workflows collaboratively with their technical teams.

Implementation strategy: From concept to product

The most significant advantage of LangChain for product managers is how it transforms the feature development process. Instead of writing detailed technical specifications for complex AI implementations, product managers can focus on articulating clear, language-driven tasks that deliver customer value.

Identifying AI-ready use cases

LangChain excels in scenarios involving:

- Text analysis and classification (threat reports, policy documents, compliance requirements)

- Automated decision-making (alert prioritization, access approvals, incident routing)

- Content generation (security reports, compliance documentation, threat summaries)

- Interactive assistance (security chatbots, guided remediation, training tools)

Example: Security policy analysis

Rather than building complex rule engines or hiring additional compliance specialists, a product manager can leverage LangChain to create an intelligent policy analyzer:

# Business logic defined by product management

policy_template = """

Review this security policy and identify potential gaps or improvements:

Policy Document: {policy_text}

Please analyze for:

1. Regulatory Compliance (GDPR, SOX, HIPAA as applicable)

2. Industry Best Practices alignment

3. Common Security Vulnerabilities coverage

4. Implementation feasibility

5. Recommended updates or additions

Provide a structured analysis with specific recommendations:

"""

# Simple implementation that can be extended and modified

policy_analyzer = LLMChain(

llm=OpenAI(),

prompt=PromptTemplate(

input_variables=["policy_text"],

template=policy_template

)

)This approach enables rapid iteration and testing. Product managers can refine prompts based on user feedback, adjust analysis criteria based on regulatory changes, and extend functionality without extensive development cycles.

Technical comparison: LangChain vs. direct API integration

To illustrate LangChain’s value proposition, consider how the same security alert analysis task would be implemented using different approaches:

Traditional OpenAI API approach

import openai

openai.api_key = "your_api_key"

alert_text = "Multiple failed login attempts detected from IP 192.168.1.5"

prompt = f"""

Analyze this security alert and determine if it requires immediate attention:

Alert: {alert_text}

Please provide:

1. Severity Level

2. Recommended Actions

3. Whether this needs immediate escalation

Analysis:

"""

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[{"role": "user", "content": prompt}],

temperature=0.5,

)

print(response['choices'][0]['message']['content'])import openai

openai.api_key = "your_api_key"

alert_text = "Multiple failed login attempts detected from IP 192.168.1.5"

prompt = f"""

Analyze this security alert and determine if it requires immediate attention:

Alert: {alert_text}

Please provide:

1. Severity Level

2. Recommended Actions

3. Whether this needs immediate escalation

Analysis:

"""

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[{"role": "user", "content": prompt}],

temperature=0.5,

)

print(response['choices'][0]['message']['content'])

This approach requires developers to manage API authentication, handle response parsing, implement error handling, and maintain prompt formatting manually. Product managers have limited ability to iterate on the prompt structure without developer involvement.

LangChain-powered approach

from langchain.llms import OpenAI

from langchain.prompts import PromptTemplate

from langchain.chains import LLMChain

# Reusable template that product managers can modify

template = """

Analyze this security alert and determine if it requires immediate attention:

Alert: {alert_text}

Please provide:

1. Severity Level

2. Recommended Actions

3. Whether this needs immediate escalation

Analysis:

"""

prompt = PromptTemplate(input_variables=["alert_text"], template=template)

llm_chain = LLMChain(llm=OpenAI(), prompt=prompt)

# Clean execution with built-in error handling

result = llm_chain.run(alert_text="Multiple failed login attempts detected from IP 192.168.1.5")

print(result)

The LangChain approach provides several strategic advantages:

- Clear separation of concerns: Product managers own the prompt design; developers handle infrastructure

- Reusability: The same chain structure can be applied across multiple use cases

- Maintainability: Changes to business logic don’t require technical implementation changes

- Scalability: Built-in handling of model management, caching, and error recovery

Conclusion

LangChain represents more than just a technical framework—it’s a paradigm shift that empowers cybersecurity product managers to harness the full potential of artificial intelligence without becoming bogged down in implementation complexity. By abstracting away the technical intricacies of LLM integration, LangChain enables product teams to focus on what they do best: understanding customer needs, defining valuable features, and delivering competitive advantage.

The framework’s approach to modular, prompt-driven AI development aligns perfectly with modern product management methodologies. It enables rapid experimentation, iterative improvement, and cross-functional collaboration while maintaining the reliability and security standards that cybersecurity customers demand.