Artificial intelligence and machine learning applications have been revolutionizing many industries for the last decade, but due to generative AI models like ChatGPT, Bard, Midjourney, etc., they have become more popular and are being used by individuals and businesses that might never have previously considered using them.

Despite demonstrating tremendous potential, AI models, in reality, don’t have true intelligence. Instead, AI and ML applications are systems that can identify patterns within large and complex datasets and then present these relationships to users in a meaningful way. These systems heavily depend on model optimization techniques to improve performance and drive desirable, context/ domain-specific results.

Prompt engineering and fine-tuning are two key model optimization techniques used in training GenAI models, especially large language models.

What is prompt engineering?

User inputs to a generative AI model play a pivotal role in influencing the result it produces. These inputs are referred to as prompts, and the process of writing these prompts is referred to as prompt engineering. A prompt engineer crafts optimal prompts to interact with other inputs in a GenAI tool. These prompts help coax generative AI applications into giving better answers, leading the model to improve its performance, such as writing articles, generating codes, interacting with customers, and even producing music and videos.

Prompt engineering to elicit better answers from the AI model

Your results are only as good as your prompts. Prompt optimization is the art of crafting more precise and detailed prompts or experimenting with words and phrases to elicit better outputs from a generative AI model. In other words, prompt engineers write a prompt in different ways to get the most desirable and contextually relevant results.

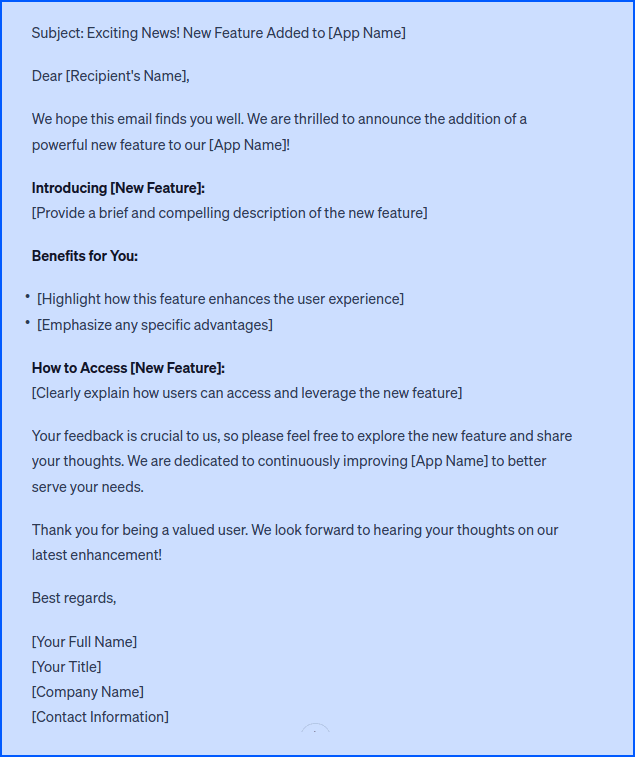

Here is an example. Say you need to write an email to inform about the new feature on your loan app, InstaCash. You write a simple prompt in ChatGPT: “Write a crisp email regarding the additional feature to our loan app.” The output looks like this:

The content is quite ordinary and is not likely to catch the attention and interest of your target audience.

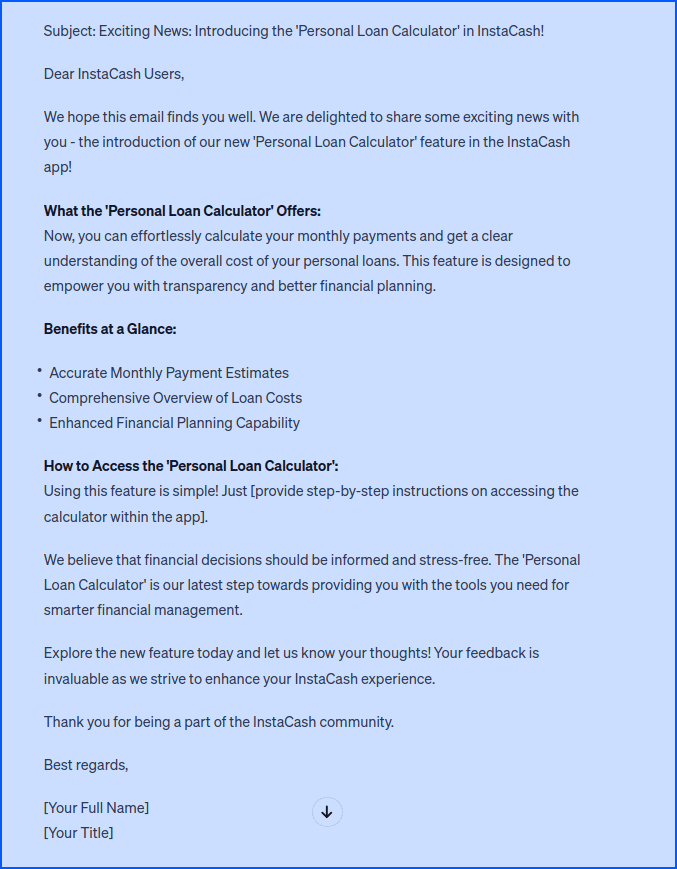

Let’s try again with a more carefully phrased and specific prompt: “Craft an email announcing the addition of the ‘Personal Loan Calculator’ feature to our InstaCash app. This feature allows borrowers to calculate monthly payments and the overall cost of their loans.” The output is:

As you can see a prompt can be engineered by providing a generative AI model with more detailed and specific input.

What is fine-tuning

Fine-tuning is another technique to unlock the full potential of generative AI models. Businesses can leverage fine-tuning for harnessing LLM capabilities to tackle specific tasks and achieve optimal results. The process involves building on the pre-existing GenAI model that has been trained and customizing it to suit the specific needs of individuals seeking to make the most of AI and align it with their personalized goals.

Essentially, fine-tuning is used to refine pre-trained models to deliver better performance on specific tasks by training them on a more carefully labeled dataset that is closely related to the task at hand. It enables models to adapt to niche domains, such as customer support, medical research, legal analysis, etc. The power of fine-tuning depends on the additional data and training. Additional datasets refer to new raw information, and training is often coupled with a feedback or rating system that evaluates the outputs produced by the model, and guides it to better results.

Fine-tuning an AI model incurs additional costs, but it is often much more cost-effective in the long run compared to training a language model from scratch. Moreover, using prompt engineering techniques for every single exchange with a chatbot is impractical.

Prompt engineering vs fine-tuning

Both prompt engineering and fine-tuning strategies play an important role in enhancing the performance of AI models. However, they are different from each other in several important aspects:

Prompt engineering empowers users to elicit highly accurate responses, whereas fine-tuning helps in optimizing the performance of a pre-training AI model on specific tasks.

Prompt engineering requires users to craft the prompt in different ways and provide more context by giving effective inputs, adding new information, clarifying the query, and even making requests in sequence. Fine-tuning, on the other hand, focuses on training an AI model on additional datasets to improve knowledge and tailor it to meet specific requirements of businesses.

While prompt engineering is a precision-focused approach that offers more control over a model’s actions and outputs, fine-tuning focuses on adding more in-depth information to topics that are relevant to the language model.

As prompts are created by humans, prompt engineering needs no or minimal computer resources. Fine-tuning is resource-intensive and involves training a language model on additional datasets, which may require substantial computing resources.

Prompt engineering and fine-tuning are both effective techniques for enhancing model performance in delivering accurate and desirable outputs. However, they play different roles in developing a model: while fine-tuning involves updating the model’s internal settings to customize it for specific needs, prompt engineering is the process of crafting optimal prompts during the interface to obtain better outputs from a generative AI model. The effectiveness of both techniques depends on the human experts in the loop.