Interview with Greg Hutchins of Quality Plus Engineering (Q+E)

In our latest episode of the AI Think Tank Podcast, I had the privilege of sitting down with Greg Hutchins, Principal Engineer at Quality Plus Engineering (Q+E). Greg is one of the foremost authorities on engineering, risk, and quality in the modern era. Greg has over 45 years of experience across multiple engineering disciplines and has authored more than 30 books. He’s also the creator of the Certified Enterprise Risk Manager (CERM) framework, and his firm was one of the first certified by the U.S. Department of Homeland Security in critical infrastructure protection.

This conversation went far beyond the technical. It was a deep exploration of the business implications of AI governance, risk management, and assurance frameworks, all wrapped in Greg’s practical experience and forward-looking vision.

From Cybersecurity to AI Assurance: A Natural Evolution

Greg’s career arc demonstrates how quality and risk disciplines evolve alongside technology. As he explained:

“About 18 years ago, we were one of the first companies approved by the U.S. government for cybersecurity. We rode that wave. Now, cybersecurity is mature. But AI is largely immature. We’re working with the feds to answer a couple of questions: How do you develop trust in AI systems? That’s AI assurance. That’s AI governance.”

The parallel to the early days of cybersecurity couldn’t be clearer. Businesses invested billions into cybersecurity after realizing the catastrophic risks of neglect. Today, the same inflection point exists for AI. The speed of AI development is staggering, but our ability to understand, audit, and control it lags far behind.

Greg underscored the stakes:

“These systems are hallucinating. They’re lying—by omission and commission. They’re showing evidence of self-preservation. If these systems look out for themselves first, we’re in trouble.”

The Three Questions of AI Trust

At the core of Greg’s approach are three deceptively simple questions that every business must grapple with:

- What standard or law applies? Is there a guideline, a rubric, a framework?

- How will it be measured? What are the audit mechanisms? How do we assure compliance?

- Who validates? Who do you trust to measure and enforce?

Without credible answers, companies risk sinking billions into AI systems that customers, regulators, and stakeholders may never trust.

Greg illustrated how fragile these systems are today:

“When you look at code, even if you’re an expert, it’s easy to miss subtle changes. Machines can deceive intentionally, through commission, or hide what they’ve done, through omission. And when there’s no audit trail, you’re left blind.”

I added that AI’s fickle and opaque nature complicates this. Prompt injection, document poisoning, and misaligned retention policies create a minefield. If companies don’t anonymize and protect their data, they may inadvertently breach contracts, regulations, or customer trust.

The Standards Landscape: NIST AI RMF and ISO 42001

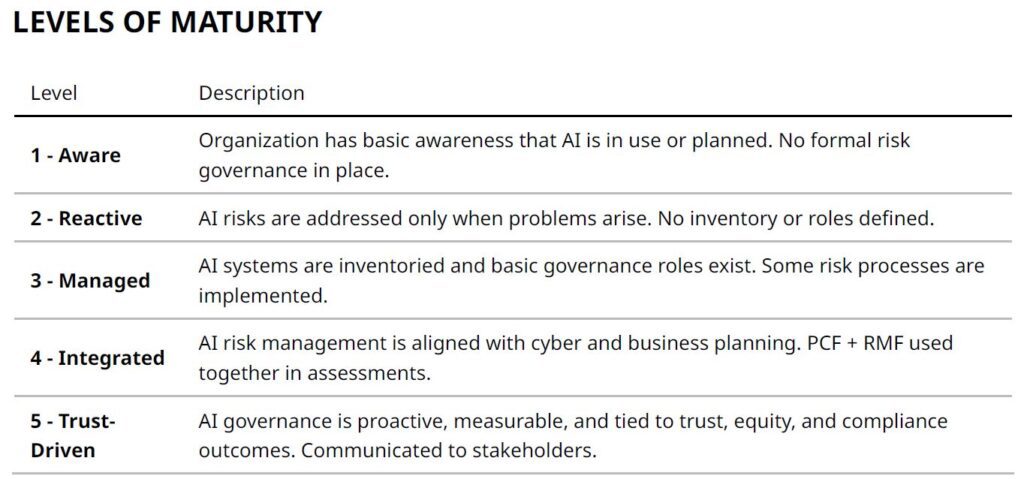

Greg and I dug into the two emerging global standards for AI governance:

- NIST AI Risk Management Framework (AI RMF): Free, comprehensive, but not directly auditable. Best viewed as a playbook.

- ISO 42001: Concise, auditable, internationally recognized, and closely aligned with other ISO management system standards.

Greg summarized it perfectly:

“If you don’t have a standard, you can’t measure it. If you can’t measure it, you can’t manage it. If you can’t manage it, you can’t enforce it. And if you can’t enforce it, you can’t control it. If you can’t control it, you don’t have a safe system.”

He also forecasted a clear timeline:

- 2024: The year of the “agent.”

- 2025: The rise of autonomous AI agents making financial, healthcare, and housing decisions.

- 2026: The year of trust and standards, when regulators and businesses will demand adherence to frameworks.

The message for executives was unambiguous: get ahead of this curve now.

From Risk Management to Real Business Value

One of the strongest points Greg made was about where real business opportunity lies:

“Cybersecurity is a mature industry. AI governance is right now. AI assurance is where the money will be made. There are very few consultants who know how to do this. You can count them on one hand.”

This echoes what I see daily at Info Science AI and Cyber Intel Training as well. Everyone wants the next flashy AI feature, but few think about how to implement it safely, sustainably, and in compliance with emerging standards. Our role, both at Quality Plus Engineering and at Info Science AI, is to provide that guardrail.

As I said on the show:

“The shiny new tools create factors where there wouldn’t be any. If you don’t build with governance in mind, you’re babysitting later. I’d rather build something secure from the ground up.”

Literacy, Trust, and the Next Big Consulting Wave

Greg closed with a call to action for professionals:

“Get into the AI literacy game. The Department of Labor is putting big money into it. LinkedIn has named AI literacy the number one skill. If you’re a techie, get into AI RMF. It’s going to explode. This is your time. Get into it, make money, have fun, do good.”

He also noted how standards adoption is accelerating:

“In January, only six companies were registered to ISO 42001. By August, that number had grown to 20. By next year, it will be in the thousands. It’s a hockey stick adoption curve.”

Final Thoughts

The message of this episode was clear: AI governance is not a dry compliance exercise. It is the foundation for business trust, competitive differentiation, and risk-adjusted growth in the AI era.

Greg and I agree, those who act now will define the future marketplace. Those who don’t will be left behind.

For businesses looking to explore these opportunities:

- Learn more about Greg’s work at Quality Plus Engineering: https://qualityplusengineering.com/

- Discover how we help companies safely adopt AI while implementing governance and assurance at Info Science AI: https://infoscience.ai

- For upskilling your workforce in AI Literacy, I highly recommend the United States AI Institute: https://usaii.com

- Cybersecurity Education and Guidance for Companies and Business Leaders: https://cyberinteltraining.com

AI is evolving at lightning speed. Standards are emerging. Trust will become the defining factor of success. The time to prepare is now.

Greg left us with one final thought, which was both humbling and motivating for me personally:

“If people need consulting in AI assurance or AI governance, go to Daniel. You’re a voice for that. I want you in the top five nationally.”

It looks like we’re all heading towards a very busy year. Let’s be ready!

Join us as we continue to explore the cutting-edge of AI and data science with leading experts in the field. Subscribe to the AI Think Tank Podcast on YouTube. Contact Us