Several of my friends have challenged me to get involved in the AI ethics discussion. I certainly do not have any special ethics training. But then again, maybe I do. I’ve been going to church most Sundays (and not just on Christmas Eve) since I was a kid and have been taught a multitude of “ethics” lessons from the Bible. So, respectively, let me take my best shot at sharing my thoughts about the critical importance of the AI Ethics topic.

What is AI Ethics?

Ethics is defined as the moral principles that govern a person’s behavior or actions, where moral principlesare the principles of “right and wrong” that are generally accepted by an individual or a social group. “Right or wrong” behaviors …not exactly something that is easily codified in a simple mathematical equation. And this is what makes the AI ethics discussion so challenging and so important.

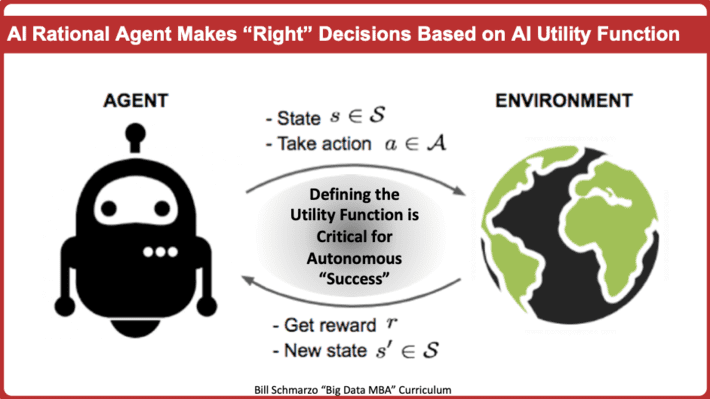

To understand the AI ethics quandary, one must first understand how an AI model makes decisions:

- The AI model relies upon the creation of “AI rational agents” that interact with the environment to learn, where learning is guided by the definition of the rewards and penalties associated with actions.

- The rewards and penalties against which the “AI rational agents” seek to make the “right” decisions are framed by the definition of value as represented in the AI Utility Function.

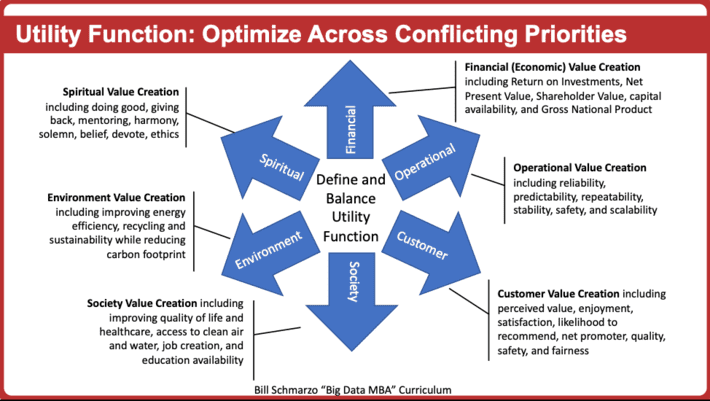

- In order to create an “AI rational agent” that makes the “right” decision, the AI Utility Function must be comprised of a holistic definition of “value” including financial/economic, operational, customer, society, environmental and spiritual.

Bottom-line: the “AI rational agent” determines “right and wrong” based upon the definition of value as articulated in the AI Utility Function (see Figure 1).

Simple, right?

Figure 1: AI Rational Agent Makes “Right” Decisions based on AI Utility Function

It isn’t the AI models that scare me. I’m not afraid that the AI models won’t work as designed. My experience-to-date is that AI models work great. But the thing is AI models will strive to optimize exactly what they have been programmed to optimize by humans via the AI Utility Function.

And that’s where we should focus the AI ethics conversation because humans tend to make poor decisions.Just visit Las Vegas if you doubt that statement (or read “Data Analytics and Human Heuristics: How to Avoid Making Poor Decisions”). The effort by humans to define the rules against which actions will be measured sometimes results in unintended consequences.

Ramifications of Unintended Consequences

Shortcutting the process to define the measures against which to monitor any complicated business initiative is naïve…and ultimately dangerous. The article “10 Fascinating Examples of Unintended Consequences” details actions “believed to be good” that ultimately led to disastrous outcomes, including:

- The SS Eastland, a badly-designed, ungainly vessel, was intended to be made safer by adding several lifeboats. Unfortunately, the extra weight of the lifeboats caused the ship to capsize, thereby trapping and killing 800 passengers below the decks.

- The Treaty of Versailles dictated surrender terms to Germany to end World War I. Unfortunately, the terms empowered Adolf Hitler and his followers, leading to World War II.

- The Smokey Bear Wildfire Prevention campaign created decades of highly successful fire prevention. Unfortunately, this disrupted normal fire process that are vital to the health of the forest. The result is megafires that destroy everything in their path, even huge pine trees which had stood for several thousand years through normal fire conditions.

See “Unintended Consequences of the Wrong Measures” for more insights into the challenges of properly defining the criteria against which progress and success will be measured. And if you want to know just had bad it can get, check out “Real-World Data Science Challenge: When Is ‘Good Enough’ Actually ‘Good Enough ” to understand the costs of false positives and false negatives. Yea, improperly defined metrics and inadequate understanding of the costs of False Positives and False Negatives can lead to disastrous results (see Boeing Max 737)!

One can mitigate unintended consequences and the costs associated with False Positives and False Negatives by bringing together diverse and even conflicting perspectives to thoroughly debate and define the AI Utility Function.

Defining the AI Utility Function

To create a “rational AI agent” that understands how to differentiate between “Right and Wrong” actions, the AI model must work off of a holistic AI Utility Function that contemplates “value” across a variety of often-conflicting dimensions. For example, increase financial value, while reducing operational costs and risks, while improving customer satisfaction and likelihood to recommend, while improving societal value and quality of life, while reducing environmental impact and carbon footprint (see Figure 2).

Figure 2: “Why Utility Determination is Critical to Defining AI Success”

And ethics MUST be one of those value dimensions if we are to create AI Utility Functions that leverage AI rational agents that can make the right versus wrong decisions. Which brings us to a very important concept – the difference between Passive Ethics versus Proactive Ethics.

Passive Ethics versus Proactive Ethics

When debating ethics, we must contemplate the dilemma between Passive Ethics and Proactive Ethics. And it starts with a story that we all learned at a very young age – The Parable of the Good Samaritan.

The story is told by Jesus about a Jewish traveler who is stripped of clothing, beaten, and left for dead alongside the road. First a priest and then a Levite comes by, but both cross the road to avoid the man. Finally, a Samaritan (Samaritans and Jews despised each other) happens upon the traveler and helps the battered man anyway. The Samaritan bandages his wounds, transports him to an Inn on his beast of burden to rest and heal, and pays for the Samaritan’s care and accommodations at the Inn.

The priest and the Levite operated under the Passive Ethics of “Do No Harm”. Technically, they did nothing wrong.

But that mindset is totally insufficient in a world driven by AI models. Our AI models must embrace Proactive Ethics by seeking to “Do Good”; that is, our AI models and the AI Utility Function that guides the operations of the AI model must proactively seek to do good.

There is a HUGE difference between “Do No Harm” versus “Do Good,” as the Parable of the Good Samaritan very well demonstrates.

AI Ethics Summary

Let’s wrap up this blog on AI Ethics with a simple ethics test that I call the “Mom Test”. Here’s how it works: If you were to tell your mom what decisions or actions you took in a particular matter, would she be proud or disappointed in your decision? Unless your mom is Ma Baker, that simple test would probably minimize many of our AI ethics concerns.

As humans define the AI Utility Function that serves to distinguish between right and wrong decisions, we MUST understand the differences between Passive Ethics versus Proactive Ethics. Otherwise, get ready to live in world being hunted by the Terminator in multitude because as Arnold says, “I’ll be back”…