Why do data silos, and now analytic silos, continue to exist? It can’t be due to technical issues. Data silos appeared in the 1990s when we were trying to make Relational Data Base Management Systems (RDMBS) – that were architected for single-record transaction processing – perform massive table scans to identify the trends, patterns, and relationships buried in the data. Thank God those days are over. Technology innovations such as massively parallel processing (MPP) databases, key-value stores, and cloud-based data architectures like Amazon S3 have eliminated many of the technical issues that necessitated these isolated data and analytic repositories.

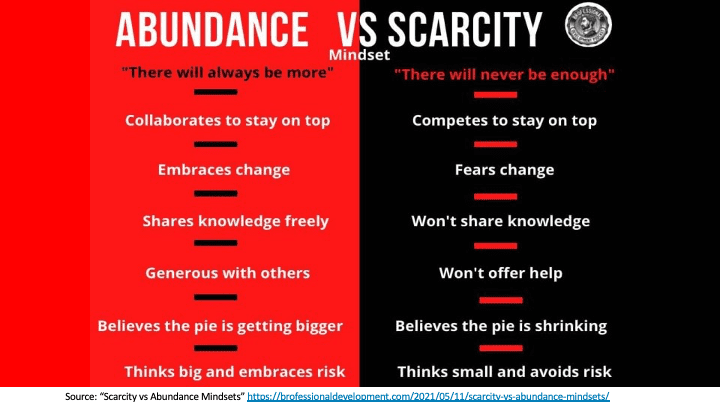

Today, data and analytic silos tend to be a cultural issue that ferments under the belief what I have, and others don’t have, makes me stronger while making them weaker. These data and analytic silos persist due to an outdated “scarcity mentality” – a mindset where opportunities, resources, and successes are limited and as such must be guarded or hoarded.

A Scarcity Mentality is an “I win, you lose” mentality

On the other hand, an “abundance mentality” is a willingness to freely share and synergize with others with the realization that the more you share and blend, the more you gain. Those with an abundance mentality believe there is plenty of resources, relationships, wealth, and opportunities for everyone (Figure 1).

Figure 1: Professional Podcast “Abundance versus Scarcity Mindset”

Stephen Covey in his book “The Seven Habits of Highly Effective People” said that an abundance mentality as a way of thinking in which a person believes there are enough resources and successes to share with others.

Now in a knowledge-based world where the “economies of learning” are more powerful than the “economies of learning”, a scarcity mentality prevents organizations from fully exploiting the unique economic characteristics of data and analytics – economic assets that can continuously learn, adapt, and refine the more that they are shared and reused.

Data and analytics are economic assets that not only never deplete, never wear out, and can be used across an unlimited number of use cases at zero marginal costs. Also, data and analytic assets can appreciate in value, not depreciate in value, the more that they are shared and reused…economic assets that can continuously learn, adapt, and refine.

Let’s see what we can learn from open-source software about the power of an abundance mentality and the economies of learning.

Economies of Learning Lessons from Open-Source Software Movement

The open-source software movement highlights the economics of sharing, reusing, and continuously refining. Open-source software exploits the economies of learning by nurturing the collaborative development and refinement of critical digital technologies.

For example, Google open-sourced TensorFlow to gain tens of thousands of additional users across thousands of new use cases to improve the predictive and operational effectiveness of the TensorFlow AI/ML library that runs Google’s incredibly profitable business. Google uses TensorFlow to power its Personal Photo App (it auto-magically groups your photos into storyboards), recognize spoken words (natural language processing), translate foreign languages, and serve as the basis for its insanely profitable search engine. Arguably, TensorFlow is the foundation for everything that makes Google money.

By open-sourcing TensorFlow, Google can accelerate TensorFlow’s learning by exposing it to a wider variety of use cases, ultimately making the engine that runs Google even that much more effective – beyond what Google could do on their own. They are letting the world – including their competitors – improve the very engine that powers Google’s business models and in effect, challenging anyone to beat them at their own game…a ballsy and brilliant move.

Bottom-line: the open-source software movement – driven by the ability to share, reuse, and continuously refine these software assets – changed the very economics of software development.

See my blog “Economic Value of Learning and Why Google Open Sourced TensorFlow” for more on the economies of learning lesson from Google’s decision to open-source TensorFlow.

“Economies of Learning” versus “Economies of Scale”

Historically, organizations have created competitive advantage and an impregnable market position through “economies of scale”. These organizations built massive market and operational moats to competition through massive investments in scale – mass procurement, mass production, mass distribution, and mass marketing.

Economies of scale are the cost advantages that an organization can achieve due to their scale of operation with the objective of driving down the cost per unit of production or output.

The economies of learning have eroded the powerful inverse relationship between fixed costs and output that defined economies of scale. Agile, fast learning companies can capture and dominate rapidly evolving markets and successfully challenge “economies of scale” companies that are weighed down by decades of investments in scale.

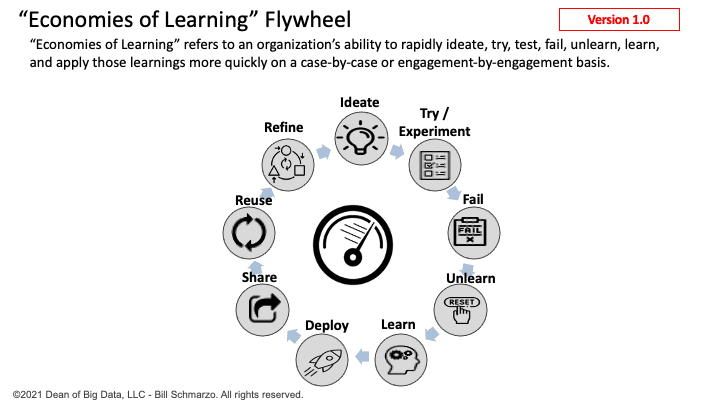

Economies of Learning refers to an organization’s ability to rapidly ideate, try, test, fail, unlearn, learn, and apply those learnings more quickly on a case-by-case or engagement-by-engagement basis (Figure 2).

Figure 2: Economies of Learning Flywheel

Characteristics of companies that can exploit the “economies of learning” include:

- Compete in knowledge-based, dynamic markets where environment, economic, social, political, and operational conditions and customer and market demands are constantly changing.

- Embrace applying the “Scientific method” to every decision to learn what works, what doesn’t work, and reapply those learnings to the next series of decisions.

- Focus on learning, not optimization, since optimizing what works today can result in “optimizing the cow path” in markets and environments under constant change.

- Exploit the “law of compounding” where a series of small learning-driven improvements can have significant impact (i.e., a 1% improvement compounded 365 times yields a nearly 38X improvement in performance and effectiveness).

- Embrace a culture of learning from failures. Failures are only bad if 1) one doesn’t thoroughly contemplate the ramifications of failure before taking the action, and 2) the lessons from the failure aren’t captured and shared across the organization.

Note: Interesting how the “Economies of Learning” that we can apply to organizations are similar to the Deep Learning concepts of Stochastic Gradient Descent and Backpropagation.

- Backpropagation is a mathematically based tool for improving the accuracy of predictions of neural networks by gradually adjusting the weights until the expected model results match the actual model results. Backpropagation solves the problem of finding the best weights to deliver the best expected results.

- Stochastic Gradient Descent is a mathematically based optimization algorithm (think second derivative in calculus) used to minimize some cost function by iteratively moving in the direction of steepest descent as defined by the negative of the gradient (slope). Gradient descent guides the updates being made to the weights of our neural network model by pushing the errors from the model’s results back into the weights.

See my blog “Using a Bathroom Faucet to Teach Neural Network Basic Concepts” to learn more about the Neural Network concepts of Stochastic Gradient Descent and Backpropagation.

Abundance Mentality Drives Innovation

Nurturing a culture of abundance and sharing is one of the keys to driving innovation.

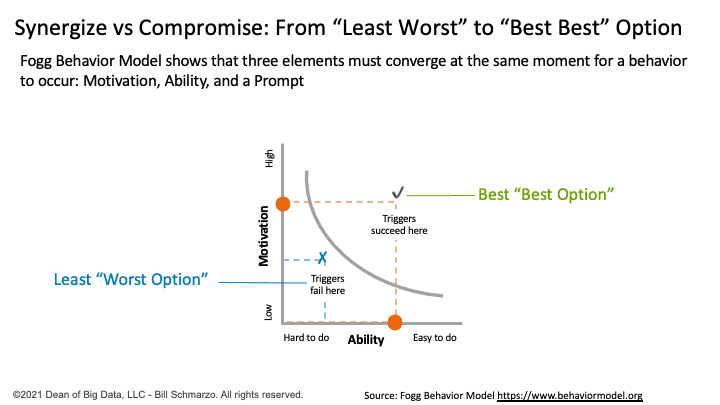

Trying to align the diverse perspectives across the organization around a critical organizational decision is mind-numbing. Many organizations operate with a scarcity mentality that deploys a “wear’em down” decision-making approach that leads to compromise and the “Least Worst” option that offends the fewest “important” stakeholders. This “lowest common denominator” approach leads to sub-optimal decisions from the perspective of what’s important to your customers and your organization. That’s like having Steph Curry on your basketball team but forcing everyone on the team to play at the level of Pete Chilcutt (sorry man). The “Least Common Denominator” decision-making makes no one happy, makes no organization stronger, and benefits the target customers not.

On the other hand, organizations that embrace an abundance mentality – a mentality that everyone can win – can seek to identify and blend the diverse set of personnel “assets” across the organization in a way that yields the “Best Best” Option. An abundance mentality drives the process of identifying and understanding everyone’s assets (e.g., skill sets, experiences, methods, tools, relationships), and then blending, bending, and synergizing those individual assets in a way that fuels the innovation that leads to the “Best Best” option (Figure 3).

Figure 3: Synergizing vs Compromising: From “Least Worst” to “Best Best” Options

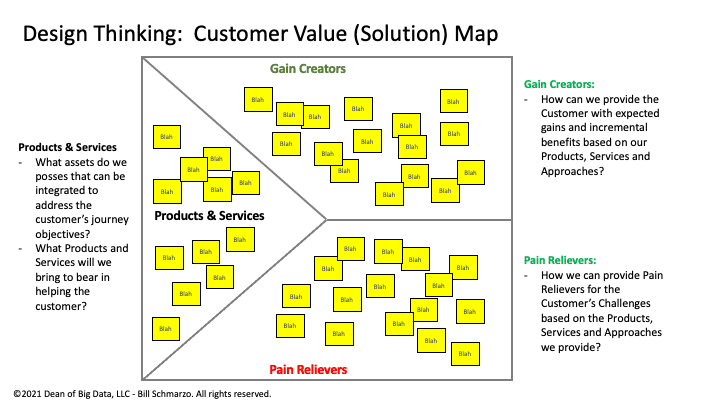

An abundance mentality fuels the synergizing mindset by leveraging Design Thinking techniques, tools, and mindset to bring together and blend different, sometimes conflicting, perspectives in an effort to achieve the “Best Best” option by:

- Seeking to clearly understand the targeted customer challenge using Stakeholder Persona Profiles and Customer Journey Maps.

- Triaging the customer’s journey including jobs-to-be-done (and KPIs against job effectiveness is measured), gains (benefits), and pains (impediments).

- Identify the broad range of organizational “assets” (i.e., skill sets, experiences, methods, tools, relationships) that exist across the organization.

- Finally, envision and ideate how to integrate and blend the different organizational “assets” to create the “Best Best” option in support of the customer’s jobs-to-be-done, gains, and pains (Figure 4).

Figure 4: Design Thinking: Customer Value (Solution) Map

Adopting an Abundance Mentality Summary

Economies of scale are the ability to rapidly learn, apply those learnings, and continuously learn and adapt from the application of those learnings.

The economics of data and analytics is built around the concept of sharing, reusing, and continuously refining. That is, the more that we share and reuse, the more valuable these digital assets become.

“Economies of learning” organizations are better prepared for success in dynamic markets than those “economies of scale” organizations that seek to build large, cumbersome moats that try to block out business models and tactics based on yesterday’s learning.

At least that’s what the French learned at the Maginot Line…