The intelligence in AI is computational intelligence, and a better word could be Automated Intelligence. But when it comes to good judgment, AI is not smarter than the human brain that designed it. Many automated systems perform poorly, to the point that you are wondering if AI is an abbreviation for Artificial Innumeracy.

Critical systems – automated piloting, running a power plant – usually do well with AI and automation, as considerable testing is done before deploying these systems. But for many mundane tasks, such as spam detection, chatbots, spell checking, detecting duplicate or fake accounts on social networks, detecting fake reviews or hate speech in social networks, search engine technology (Google) or AI-based advertising, a lot of progress must be made. It works just like in the video below, featuring a drunken robot.

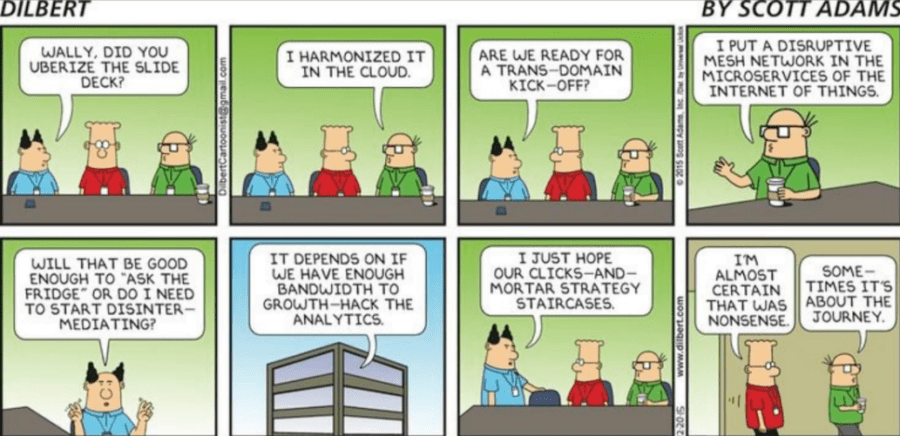

Why can driverless cars recognize a street sign, but Facebook algorithms can not recognize if a picture contains text or not ? Why can’t the Alexa robot understand the command “Close the lights” but understands “Turn off the lights”? Sometimes the limitation of AI just reflects the lack of knowledge of the people who implement these solutions: they might not know much about the business operations and products, and are sometimes glorified coders. In some cases, the systems are so poorly designed that they can be used in unintended, harmful ways. For instance, some Google algorithms automatically detect bad websites using tricks to be listed at the top on search results pages. These algorithms will block you if you use such tricks, but indeed you can use these tricks against your competitors to get them blocked, defeating the purpose of the algorithm.

Why is AI still failing on mundane tasks?

I don’t have an answer. But I think that tasks that are not critical for the survival of a business (such as spam detection) receive little attention from executives, and even employees working on these tasks might be tempted to not do anything revolutionary, and show a low profile. Imagination is not encouraged, beyond some limited level. Is is as “if it ain’t broken, don’t fix it.”

For instance, if advertising dollars are misused by some poorly designed AI system (assuming the advertising budget is fixed) the negative impact on the business is limited. If, to the contrary it is done well, the upside could be great. The fact is, for non-critical tasks, businesses are not willing to significantly change the routine, especially for projects where ROI is deemed impossible to measure accurately.. For tiny companies where the CEO is also a data scientist, things are very different, and the incentive to have performing AI (to beat competition or reduce workload) is high.

DSC Resources

- Services: Hire a Data Scientist | Search DSC | Classifieds | Find a Job

- Contributors: Post a Blog | Ask a Question

- Follow us: @DataScienceCtrl | @AnalyticBridge

Popular Articles

AI has great potential, but its limitations become apparent in everyday tasks like spam detection, chatbots, and social media moderation.

While critical systems such as automated piloting are well-tested, mundane AI applications often struggle due to poor design or lack of understanding from developers.

As AI evolves, addressing these flaws will be essential for improving reliability and trustworthiness in its use.