This is a nightmare! Tadayoshi Kohno, Professor at Department of Computer Science and Engineering, University of Washington, manipulated a STOP sign in a typical “graffiti way”, that it was recognized as 45 mph SPEED limit by typical AI software, such as built into a Tesla S. It’s very likely, that it will become a sport to send Tesla drivers to hell.

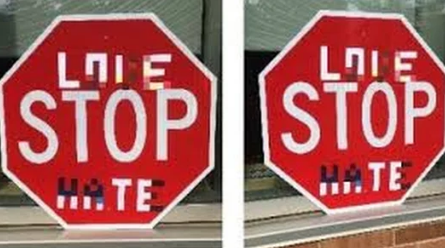

Compromised stop sign to confuse driver-less cars

Compromised stop sign to confuse driver-less cars

Here is the abstract of this paper:

Deep neural network-based classifiers are known to be vulnerable to adversarial examples that can fool them into misclassifying their input through the addition of small-magnitude perturbations. However, recent studies have demonstrated that such adversarial examples are not very effective in the physical world–they either completely fail to cause misclassification or only work in restricted cases where a relatively complex image is perturbed and printed on paper. In this paper we propose a new attack algorithm–Robust Physical Perturbations (RP2)– that generates perturbations by taking images under different conditions into account. Our algorithm can create spatially-constrained perturbations that mimic vandalism or art to reduce the likelihood of detection by a casual observer. We show that adversarial examples generated by RP2 achieve high success rates under various conditions for real road sign recognition by using an evaluation methodology that captures physical world conditions. We physically realized and evaluated two attacks, one that causes a Stop sign to be misclassified as a Speed Limit sign in 100% of the testing conditions, and one that causes a Right Turn sign to be misclassified as either a Stop or Added Lane sign in 100% of the testing conditions.

DSC Resources

- Services: Hire a Data Scientist | Search DSC | Classifieds | Find a Job

- Contributors: Post a Blog | Ask a Question

- Follow us: @DataScienceCtrl | @AnalyticBridge

Popular Articles