New & Notable

The hidden cost of over-instrumentation: Why more tracking can hurt product teams

Lenard Lim | June 17, 2025 at 10:22 amTop Webinar

Recently Added

The hidden cost of over-instrumentation: Why more tracking can hurt product teams

Lenard Lim | June 17, 2025 at 10:22 amStop tracking everything: Rethink your data strategy If you’ve ever opened a product analytics dashboard and scrolled ...

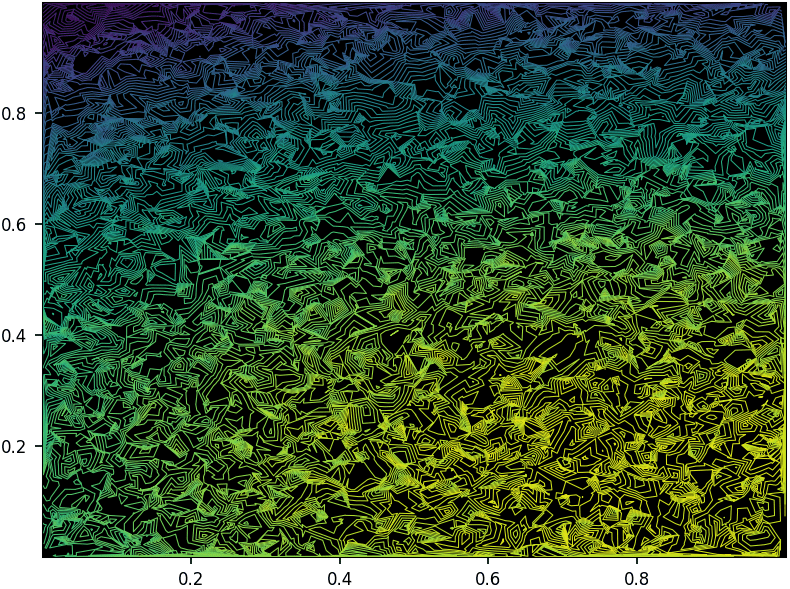

How to Build and Optimize High-Performance Deep Neural Networks from Scratch

Vincent Granville | June 13, 2025 at 5:06 pmWith explainable AI, intuitive parameters easy to fine-tune, versatile, robust, fast to train, without any library other...

Unlocking the value of AI with DevOps accelerated MLOps

Janne Saarela | June 11, 2025 at 3:27 pmSince the early 2000s, the advent of digital transformation has compelled businesses across industries to reimagine thei...

Revamping enterprise content management with language models

Jelani Harper | June 9, 2025 at 11:23 amThe relatively recent capacity for front-end users to interface with backend systems, documents, and other content via n...

Decentralized ML: Developing federated AI without a central cloud

Tosin Clement | June 3, 2025 at 9:49 amIntroduction – Breaking the cloud barrier Cloud computing has been the dominant paradigm of machine learning for y...

How to make AI work in QA

Saqib Jan | June 2, 2025 at 11:23 amAI has radically changed Quality Assurance, breaking old inefficient ways of test automation, promising huge leaps in sp...

How enterprises can secure their communications

Gaurav Belani | May 30, 2025 at 3:31 pmThis post breaks down the biggest threats to enterprise communication and succinctly walks you through the strategies th...

AI skills for the modern workplace: A guide for knowledge workers

Dan Lawyer | May 27, 2025 at 11:03 amWhile AI is being adopted across organizations, knowledge gaps still exist in using it mindfully and effectively in ever...

Stay ahead of the sales curve with AI-assisted Salesforce integration

Anas Baig | May 19, 2025 at 4:52 pmAny organization with Salesforce in its SaaS sprawl must find a way to integrate it with other systems. For some, this i...

Generative AI in software development: Does faster code come at the cost of quality?

Devansh Bansal | May 16, 2025 at 4:39 pmFrom creating comprehensive essays to writing intriguing fiction, there’s hardly anything untouched by the impact of g...

New Videos

A/B Testing Pitfalls – Interview w/ Sumit Gupta @ Notion

Interview w/ Sumit Gupta – Business Intelligence Engineer at Notion In our latest episode of the AI Think Tank Podcast, I had the pleasure of sitting…

Davos World Economic Forum Annual Meeting Highlights 2025

Interview w/ Egle B. Thomas Each January, the serene snow-covered landscapes of Davos, Switzerland, transform into a global epicenter for dialogue on economics, technology, and…

A vision for the future of AI: Guest appearance on Think Future 1039

As someone who has spent years navigating the exciting and unpredictable currents of innovation, I recently had the privilege of joining Chris Kalaboukis on his show, Think Future.…