This article was written by Prashant Gupta. Gupta is a machine learning engineer, Android developer, and tech enthusiast.

Tired of getting low accuracy on your machine learning models? Boosting is here to help. Boosting is a popular machine learning algorithm that increases accuracy of your model, something like when racers use nitrous boost to increase the speed of their car.

Boosting uses a base machine learning algorithm to fit the data. This can be any algorithm, but Decision Tree is most widely used. For an answer to why so, just keep reading. Also, the boosting algorithm is easily explained using Decision Trees, and this will be focus of this article. It builds upon approaches other than boosting, that improve accuracy of Decision Trees.

Bootstrapping:

I would like to start by explaining an important foundation technique called Bootstrapping. Assume that we need to learn a decision tree to predict the price of a house based on 100 inputs. Prediction accuracy of such a decision tree would be low, given the problem of variance it suffers from. This means that if we split the training data into two parts at random, and fit a decision tree to both halves, the results that we may get could be quite different. What we really want is a result that has low variance if applied repeatedly to distinct data sets.

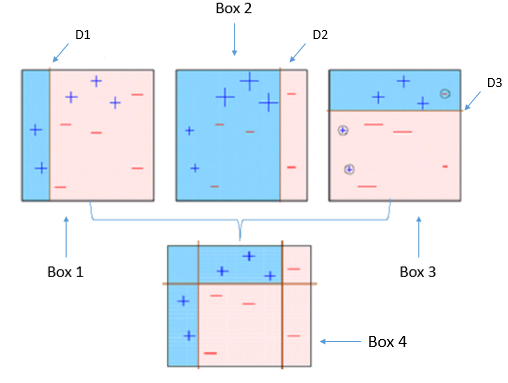

We can improve the prediction accuracy of Decision Trees using Bootstrapping:

- Create many (e.g. 100) random sub-samples of our dataset with replacement (meaning we can select the same value multiple times).

- Learn(train) a decision tree on each sample.

- Given new dataset, Calculate the prediction for each sub-sample.

- Calculate the average of all of our collected predictions(also called bootstrap estimates) and use that as our estimated prediction for the data.

The procedure can be used in similar way for classification trees. For example, if we had 5 decision trees that made the following class predictions for an input sample: blue, blue, red, blue and red, we would take the most frequent class and predict blue.

In this approach, trees are grown deep and are not pruned. Thus each individual tree has high variance, but low bias. Averaging these trees reduces the variance dramatically.

Bootstrapping is a powerful statistical method for estimating a quantity from a data sample. Quantity can be a descriptive statistic such as a mean or a standard deviation. The application of the Bootstrapping procedure to a high-variance machine learning algorithm, typically decision trees as shown in the above example, is known as Bagging(or bootstrap aggregating).

Error Estimation:

An easy way of estimating the test error of a bagged model, without the need for cross-validation is Out-of-Bag Error Estimation. The observations not used to fit a given bagged tree are referred to as the out-of-bag (OOB) observations. We can simply predict the response for the ith observation using each of the trees in which that observation was OOB. We average those predicted responses, or take a majority vote, depending on if the response is quantitative or qualitative. An overall OOB MSE(mean squared error) or classification error rate can be computed. This is an acceptable test error rate because the predictions are based on only the trees that were not fit using that observation.

Random Forests:

Decision trees aspire to minimize the cost, which means they make use of strongest predictors/classifiers for splitting the branches. So, most of the trees made from bootstrapped samples would use the same strong predictor in different splits. This relates the trees and leads to variance.

We can improve the prediction accuracy of Bagged Trees using Random Forests:

While splitting branches of any tree, a random sampled of m predictors is chosen as split candidates from the full set of p predictors. The split is then allowed to only use one of those m predictors. A fresh sample of mpredictors is taken at each split. You can try different values and tune it using cross validation.

- For classification a good default is: m = sqrt(p)

- For regression a good default is: m = p/3

Thus, on average, (p — m) / p of the splits will not even consider the strong predictor. This is known as decorrelating the trees, as we fix the issue of each tree using same strong predictor.

If m = p then random forests is equal to bagging.

Feature Importance:

One problem with computing fully grown trees is that we cannot easily interpret the results. And it is no longer clear which variables are important to the relationship. Calculating drop in the error function for a variable at each split point gives us an idea of feature importance. It means that we record the total amount that the error is decreased due to splits over a given predictor, averaged over all bagged trees. A large value then indicates an important predictor. In regression problems this may be the drop in residual sum of squares and in classification this might be the Gini score.

To read the full original article click here. For more machine learning models related articles on DSC click here.

DSC Resources

- Services: Hire a Data Scientist | Search DSC | Classifieds | Find a Job

- Contributors: Post a Blog | Ask a Question

- Follow us: @DataScienceCtrl | @AnalyticBridge

Popular Articles