Imagine an advanced fighter aircraft is patrolling a hostile conflict area and a bogie suddenly appears on radar accelerating aggressively at them. The pilot, with the assistance of an Artificial Intelligence co-pilot, has a fraction of a second to decide what action to take – ignore, avoid, flee, bluff, or attack. The costs associated with False Positive and False Negative are substantial – a wrong decision that could potentially provoke a war or lead to the death of the pilot. What is one to do…and why?

No one less than the Defense Advanced Research Projects Agency (DARPA) and the Department of Defense (DoD) are interested in not only applying AI to decide what to do in hostile, unstable and rapidly devolving environments but also want to understand why an AI model recommended a particular action.

Welcome to the world of Explainable Artificial Intelligence (XAI).

A McKinsey Quarterly article “What AI Can And Can’t Do (Yet) For Your Business” highlights the importance of XAI in achieveing AI mass adoption:

“Explainability is not a new issue for AI systems. But it has grown along with the success and adoption of deep learning, which has given rise both to more diverse and advanced applications and to more opaqueness. Larger and more complex models make it hard to explain, in human terms, why a certain decision was reached (and even harder when it was reached in real time). This is one reason that adoption of some AI tools remains low in application areas where explainability is useful or indeed required. Furthermore, as the application of AI expands, regulatory requirements could also drive the need for more explainable AI models.”

Being able to quantify the predictive contribution of a particular variable to the effectiveness of an analytic model is a critically important for the following reasons:

- XAI is a legal mandate in regulated verticals such as banking, insurance, telecommunications and others. XAI is critical in ensuring that there are no age, gender, race, disabilities or sexual orientation biases in decisions dictated by regulations such as the European General Data Protection Regulation (GDPR) and the Fair Credit Report Act (FCRA).

- XAI is critical for physicians, engineers, technicians, physicists, chemists, scientists and other specialists whose work is governed by the exactness of the model’s results, and who simply must understand and trust the models and modeling results.

- For AI to take hold in healthcare, it has to be explainable. This black-box paradox is particularly problematic in healthcare, where the method used to reach a conclusion is vitally important. Responsibility for making life and death decisions, physicians are unwilling to entrust those decisions to a black box.

Let’s drill into what DARPA is trying to achieve with their XAI initiative.

DARPA XAI Requirements

DARPA is an agency of the United States Department of Defense responsible for the development of emerging technologies for use by the military. DARPA is soliciting proposals to help address the XAI problem. Information about the research proposals and timeline can be found at “Explainable Artificial Intelligence (XAI)”.

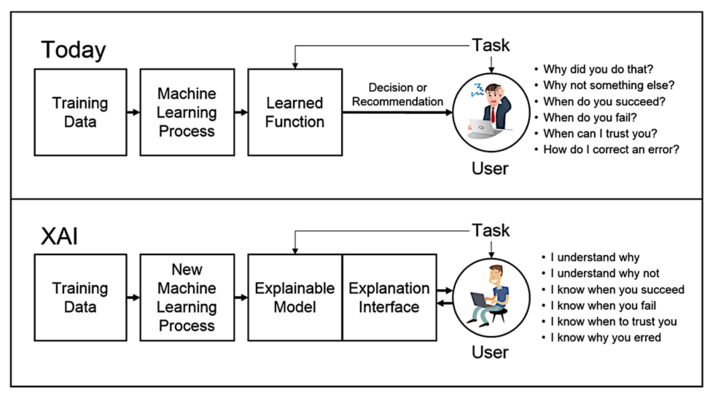

The target persona of XAI is an end user who depends on decisions, recommendations, or actions produced by an AI system, and therefore needs to understand the rationale for the system’s decisions. To accomplish this, DARPA is pushing the concepts of an “Explainable AI Model” and an “Explanation Interface” (see Figure 1).

Figure 1: Explainable Artificial Intelligence (XAI)

Figure 1 presents three XAI research and development challenges:

- How to produce more explainable models? For this, DARPA envisions developing a range of new or modified machine learning and deep learning algorithms to produce more explainable models versus today’s black box deep learning algorithms.

- How to design the explanation interface? DARPA anticipates integrating state-of-the-art human-computer interaction (HCI) techniques (e.g., visualization, language understanding, language generation, and dialog management) with new principles, strategies, and techniques to generate more effective and human understandable explanations.

- How to understand the psychological requirements for effective explanations? DARPA plans to summarize, extend, and apply current psychological theories of explanation to assist both the XAI developers and evaluator.

These are bold but critically-important developments if we hope to foster public confidence in the use of AI in our daily lives (e.g., autonomous vehicles, personalized medicine, robotic machines, intelligent devices, precision agriculture, smart buildings and cities).

How Deep Learning Works

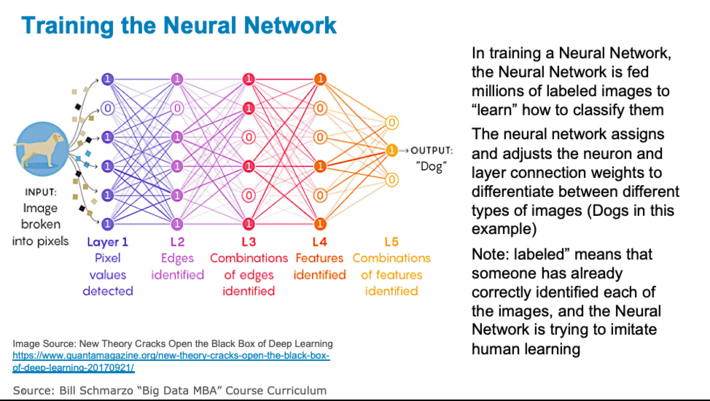

Deep learning systems are “trained” by analyzing massive amounts of labeled data to classify the data. These models are then used to predict the probability of matches to new data sets (cats, dogs, handwriting, pedestrians, tumors, cancer, plant diseases). Deep Learning goes through three stages to create their classification models.

Step 1: Training. A Neural Network is trainedby feeding thousands, if not millions, of labeled images into the Neural Network in order to create the weights and biases across the different neurons and connections between layers of neurons that are used to classify the images (see Figure 2).

Figure 2: Training the Neural Network

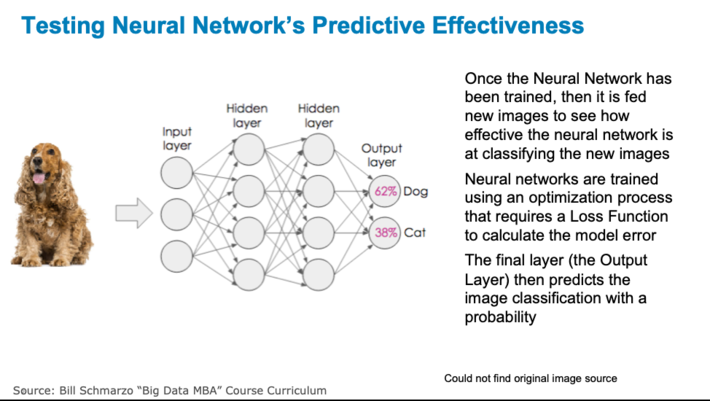

Step 2: Testing.The Neural Network’s predictive effectiveness is testedby feeding new images into the Neural Network to see how effective the neural network is at classifying the new images (see Figure 3).

Figure 3: Testing Neural Network

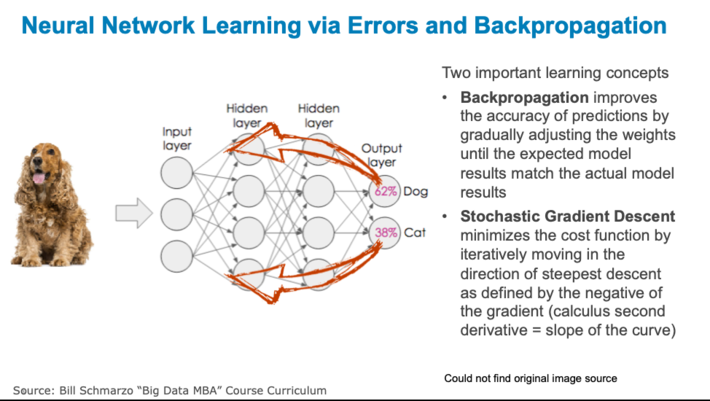

Step 3: Learning. Learning or refining of the Neural Network model is driven by rippling learnings from the model errors in order to minimize the square of errors between expected versus actual results.

Figure 4: Learning and Refining the Model

As I covered in the blog “Neural Networks: Is Meta-learning the New Black?,” a neural network “learns” from the square of its errors using two important concepts:

- Backpropagation is a mathematically-based tool for improving the accuracy of predictions of neural networks by gradually adjusting the weights until the expected model results match the actual model results. Backpropagation solves the problem of finding the best weights to deliver the best expected results.

- Stochastic Gradient Descent is a mathematically-based optimization algorithm (think second derivative in calculus) used to minimize some cost function by iteratively moving in the direction of steepest descent as defined by the negative of the gradient (slope). Gradient descent guides the updates being made to the weights of our neural network model by pushing the errors from the models results back into the weights.

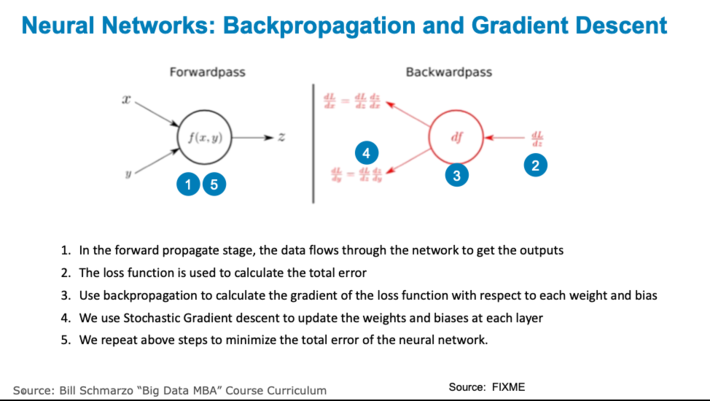

In summary, we are propagating the total error backward through the connections in the network layer by layer, calculating the contribution (gradient) of each weight and bias to the total error in every layer, and then using gradient descent algorithm to optimize the weights and biases, and eventually minimizing the total error of the neural network (see Figure 5).

Figure 5: Neural Networks: Backpropagation and Gradient Descent

As powerful as Neural Networks are in analyzing and classifying data sets, the problem with a Neural Network in a world of XAI is that none of the variables and their associated weights are known, so it is impossible to explain why and how a deep learning model came up with its predictions.

Note as we discussed in the opening scenario, there are many scenarios where the costs of model failure – False Positives and False Negatives – can be significant, maybe even disastrous. Consequently, organizations must invest the time and effort to thoroughly detail the consequences of False Positives and False Negatives. See the blog “Using Confusion Matrices to Quantify the Cost of Being Wrong” for more details on understanding and quantifying the costs of False Positives and False Negatives.

USF Data Attribution Research Project

Building upon our industry-leading research at the University of San Francisco on the Economic Value of Data, we are following up on that research by exploring a mathematical formula that can calculate how much of the analytic model’s predictive results can be attributed to any one individual variable. This research not only helps to further advance the XAI thinking, but also provides invaluable guidance to organizations that are trying to:

- Determine the economic or financial value of a particular data set can help prioritize IT and business investments in data management efforts (e.g., governance, quality, accuracy, completeness, granularity, metadata) and data acquisition strategies.

- Determine how an organization accounts for the value of its data impacts an organization’s balance sheet and market capitalization.

Watch this space for more details on this research project.

I’m also overjoyed by the positive feedback that I have gotten on the release of my third book: “The Art of Thinking Like A Data Scientist”. This workbook is jammed with templates, worksheets, examples and hands-on exercises to help organizations become more effective at leveraging data and analytics to power your business models. I hope that you enjoy it as well.