The list below is a (non-comprehensive) selection of what I believe should be taught first, in data science classes, based on 30 years of business experience. This is a follow up to my article Why logistic regression should be taught last.

I am not sure whether these topics below are even discussed in data camps or college classes. One of the issue is the way teachers are recruited. The recruitment process favors individuals famous for their academic achievements, or for their “star” status, and they tend to teach the same thing over and over, for decades. Successful professionals have little interest in becoming a teacher (as the saying goes: if you can’t do it, you write about it, if you can’t write about it, you teach it.)

It does not have to be that way. Plenty of qualified professionals, even though not being a star, would be perfect teachers and are not necessarily motivated by money. They come with tremendous experience gained in the trenches, and could be fantastic teachers, helping students deal with real data. And they do not need to be a data scientist, many engineers are entirely capable (and qualified) to provide great data science training.

Topics that should be taught very early on in a data science curriculum

I suggest the following:

- On overview of how algorithms work

- Different types of data and data issues (missing data, duplicated data, errors in data) together with exploring real-life sample data sets, and constructively criticizing them

- How to identify useful metrics

- Lifecycle of data science projects

- Introduction to programming languages, and fundamental command line instructions (Unix commands: grep, sort, uniq, head, Unix pipes, and so on.)

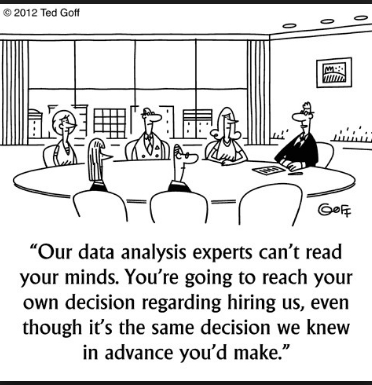

- Communicating results to non experts and understanding requests from decision makers (translating requests into action items for the data scientist)

- Overview of popular techniques with pluses and minuses, and when to use them

- Case studies

- Being able to identify flawed studies

By contrast, here is a typical list of topics discussed first, in traditional data science classes:

- Probability theory, random variables, maximum likelihood estimation

- Linear regression, logistic regression, analysis of variance, general linear model

- K-NN (nearest neighbors clustering), hierarchical clustering

- Test of hypotheses, non-parametric statistics, Markov chains, time series

- NLP, especially world clouds (applied to small sample Twitter data)

- Collaborative filtering algorithms

- Neural networks, decision trees, linear discriminant analysis, naive Bayes

There is nothing fundamentally wrong about these techniques (except the two last ones), but you are unlikely to use them in your career — not the rudimentary version presented in the classroom anyway — unless your are in a team of like-minded people all using the same old-fashioned black box tools. Indeed they should be taught, but maybe not at the beginning.

Topics that should also be included in a data science curriculum

The ones listed below should not be taught at the very beginning, but are very useful, and rarely included in standard curricula:

- Model selection, tool (product) selection, algorithm selection

- Rules of thumb

- Best practices

- Turning unstructured data into structured data (creating taxonomies, cataloging algorithms and automated tagging)

- Blending multiple techniques to get the best of each of them, as described here

- Measuring model performance (R-Squared is the worst metric, but usually the only one taught in the classroom)

- Data augmentation (finding external data sets and features to get better predictive power, blending it with internal data)

- Building your own home-made models and algorithms

- The curse of big data (different from the curse of dimensionality) and how to discriminate between correlation and causation

- How frequently data science implementations (for instance, look-up tables) should be updated

- From designing a prototype to deployment in production mode: caveats

- Monte-Carlo simulations (a simple alternative to computing confidence intervals and test statistical hypotheses, without even knowing what a random variable is.)

To find out more about these techniques, use our search box to find literature about the topic in question.

For related articles from the same author, click here or visit www.VincentGranville.com. Follow me on on LinkedIn.

DSC Resources

- Subscribe to our Newsletter

- Comprehensive Repository of Data Science and ML Resources

- Advanced Machine Learning with Basic Excel

- Difference between ML, Data Science, AI, Deep Learning, and Statistics

- Selected Business Analytics, Data Science and ML articles

- Hire a Data Scientist | Search DSC | Classifieds | Find a Job

- Post a Blog | Forum Questions