If you’re relatively new to the NLP and Text Analysis world, you’ll more than likely have come across some pretty technical terms and acronyms, that are challenging to get your head around, especially, if you’re relying on scientific definitions for a plain and simple explanation.

We decided to put together a list of 10 common terms in Natural Language Processing which we’ve broken down in layman terms, making them easier to understand. So if you don’t know your “Bag of Words” from your LDA we’ve got you covered.

The terms we chose were based on terms we often find ourselves explaining to users and customers on a day to day basis.

Natural Language Processing (NLP) – A Computer Science field connected to Artificial Intelligence and Computational Linguistics which focuses on interactions between computers and human language and a machine’s ability to understand, or mimic the understanding of human language. Examples of NLP applications include Siri and Google Now.

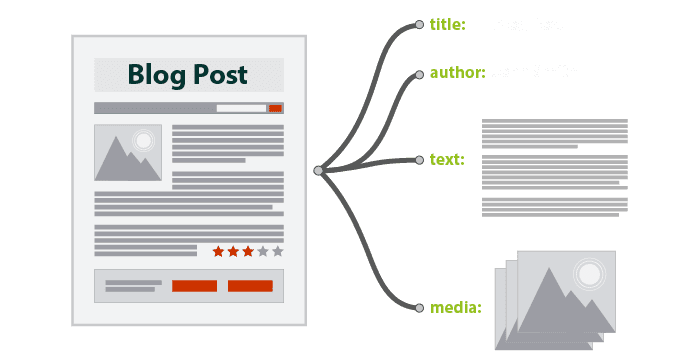

Information Extraction – The process of automatically extracting structured information from unstructured and/or semi-structured sources, such as text documents or web pages for example.

Extraction:

Named Entity Recognition (NER) – The process of locating and classifying elements in text into predefined categories such as the names of people, organizations, places, monetary values, percentages, etc.

Corpus or Corpora – A usually large collection of documents that can be used to infer and validate linguistic rules, as well as to do statistical analysis and hypothesis testing.

Sentiment Analysis – The use of Natural Language Processing techniques to extract subjective information from a piece of text. i.e. whether an author is being subjective or objective or even positive or negative. (can also be referred to as Opinion Mining)

Sentiment Analysis:

Word Sense Disambiguation – The ability to identify the meaning of words in context in a computational manner. A third party corpus or knowledge base, such as WordNet or Wikipedia, is often used to cross-reference entities as part of this process. A simple example being, for an algorithm to determine whether a reference to “apple” in a piece of text refers to the company or the fruit.

Bag of Words – A commonly used model in methods of Text Classification. As part of the BOW model, a piece of text (sentence or a document) is represented as a bag or multiset of words, disregarding grammar and even word order and the frequency or occurrence of each word is used as a feature for training a classifier.

Explicit Semantic Analysis (ESA) – Used in Information Retrieval, Document Classification and Semantic Relatedness calculation (i.e. how similar in meaning two words or pieces of text are to each other), ESA is the process of understanding the meaning of a piece text, as a combination of the concepts found in that text.

Latent Semantic Analysis (LSA) – The process of analyzing relationships between a set of documents and the terms they contain. Accomplished by producing a set of concepts related to the documents and terms. LSA assumes that words that are close in meaning will occur in similar pieces of text.

Latent Dirichlet Allocation (LDA) – A common topic modeling technique, LDA is based on the premise that each document or piece of text is a mixture of a small number of topics and that each word in a document is attributable to one of the topics.